Note

Click here to download the full example code

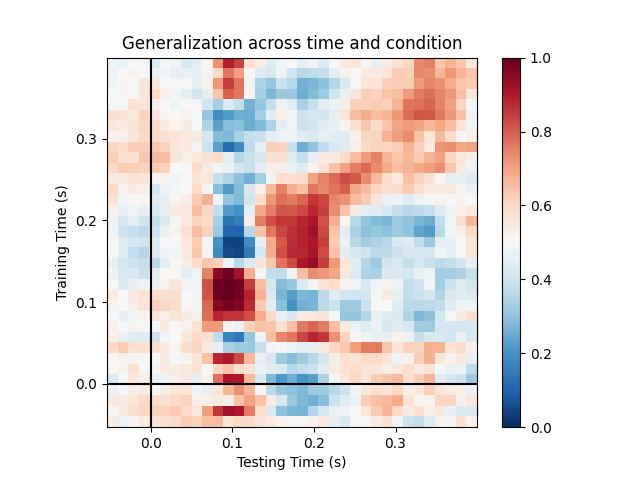

Decoding sensor space data with generalization across time and conditions¶

This example runs the analysis described in 1. It illustrates how one can fit a linear classifier to identify a discriminatory topography at a given time instant and subsequently assess whether this linear model can accurately predict all of the time samples of a second set of conditions.

# Authors: Jean-Remi King <jeanremi.king@gmail.com>

# Alexandre Gramfort <alexandre.gramfort@inria.fr>

# Denis Engemann <denis.engemann@gmail.com>

#

# License: BSD (3-clause)

import matplotlib.pyplot as plt

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

import mne

from mne.datasets import sample

from mne.decoding import GeneralizingEstimator

print(__doc__)

# Preprocess data

data_path = sample.data_path()

# Load and filter data, set up epochs

raw_fname = data_path + '/MEG/sample/sample_audvis_filt-0-40_raw.fif'

events_fname = data_path + '/MEG/sample/sample_audvis_filt-0-40_raw-eve.fif'

raw = mne.io.read_raw_fif(raw_fname, preload=True)

picks = mne.pick_types(raw.info, meg=True, exclude='bads') # Pick MEG channels

raw.filter(1., 30., fir_design='firwin') # Band pass filtering signals

events = mne.read_events(events_fname)

event_id = {'Auditory/Left': 1, 'Auditory/Right': 2,

'Visual/Left': 3, 'Visual/Right': 4}

tmin = -0.050

tmax = 0.400

# decimate to make the example faster to run, but then use verbose='error' in

# the Epochs constructor to suppress warning about decimation causing aliasing

decim = 2

epochs = mne.Epochs(raw, events, event_id=event_id, tmin=tmin, tmax=tmax,

proj=True, picks=picks, baseline=None, preload=True,

reject=dict(mag=5e-12), decim=decim, verbose='error')

Out:

Opening raw data file /home/circleci/mne_data/MNE-sample-data/MEG/sample/sample_audvis_filt-0-40_raw.fif...

Read a total of 4 projection items:

PCA-v1 (1 x 102) idle

PCA-v2 (1 x 102) idle

PCA-v3 (1 x 102) idle

Average EEG reference (1 x 60) idle

Range : 6450 ... 48149 = 42.956 ... 320.665 secs

Ready.

Reading 0 ... 41699 = 0.000 ... 277.709 secs...

Filtering raw data in 1 contiguous segment

Setting up band-pass filter from 1 - 30 Hz

FIR filter parameters

---------------------

Designing a one-pass, zero-phase, non-causal bandpass filter:

- Windowed time-domain design (firwin) method

- Hamming window with 0.0194 passband ripple and 53 dB stopband attenuation

- Lower passband edge: 1.00

- Lower transition bandwidth: 1.00 Hz (-6 dB cutoff frequency: 0.50 Hz)

- Upper passband edge: 30.00 Hz

- Upper transition bandwidth: 7.50 Hz (-6 dB cutoff frequency: 33.75 Hz)

- Filter length: 497 samples (3.310 sec)

We will train the classifier on all left visual vs auditory trials and test on all right visual vs auditory trials.

clf = make_pipeline(StandardScaler(), LogisticRegression(solver='lbfgs'))

time_gen = GeneralizingEstimator(clf, scoring='roc_auc', n_jobs=1,

verbose=True)

# Fit classifiers on the epochs where the stimulus was presented to the left.

# Note that the experimental condition y indicates auditory or visual

time_gen.fit(X=epochs['Left'].get_data(),

y=epochs['Left'].events[:, 2] > 2)

Out:

0%| | Fitting GeneralizingEstimator : 0/35 [00:00<?, ?it/s]

3%|2 | Fitting GeneralizingEstimator : 1/35 [00:00<00:01, 22.29it/s]

9%|8 | Fitting GeneralizingEstimator : 3/35 [00:00<00:01, 24.64it/s]

17%|#7 | Fitting GeneralizingEstimator : 6/35 [00:00<00:00, 36.78it/s]

23%|##2 | Fitting GeneralizingEstimator : 8/35 [00:00<00:00, 31.38it/s]

34%|###4 | Fitting GeneralizingEstimator : 12/35 [00:00<00:00, 33.90it/s]

37%|###7 | Fitting GeneralizingEstimator : 13/35 [00:00<00:00, 30.68it/s]

49%|####8 | Fitting GeneralizingEstimator : 17/35 [00:00<00:00, 37.43it/s]

57%|#####7 | Fitting GeneralizingEstimator : 20/35 [00:00<00:00, 36.54it/s]

63%|######2 | Fitting GeneralizingEstimator : 22/35 [00:00<00:00, 34.99it/s]

69%|######8 | Fitting GeneralizingEstimator : 24/35 [00:00<00:00, 33.08it/s]

77%|#######7 | Fitting GeneralizingEstimator : 27/35 [00:00<00:00, 36.07it/s]

80%|######## | Fitting GeneralizingEstimator : 28/35 [00:00<00:00, 33.90it/s]

89%|########8 | Fitting GeneralizingEstimator : 31/35 [00:00<00:00, 36.26it/s]

91%|#########1| Fitting GeneralizingEstimator : 32/35 [00:00<00:00, 34.11it/s]

97%|#########7| Fitting GeneralizingEstimator : 34/35 [00:01<00:00, 32.84it/s]

100%|##########| Fitting GeneralizingEstimator : 35/35 [00:01<00:00, 33.96it/s]

Score on the epochs where the stimulus was presented to the right.

scores = time_gen.score(X=epochs['Right'].get_data(),

y=epochs['Right'].events[:, 2] > 2)

Out:

0%| | Scoring GeneralizingEstimator : 0/1225 [00:00<?, ?it/s]

2%|1 | Scoring GeneralizingEstimator : 22/1225 [00:00<00:01, 641.37it/s]

3%|2 | Scoring GeneralizingEstimator : 33/1225 [00:00<00:02, 480.78it/s]

5%|4 | Scoring GeneralizingEstimator : 57/1225 [00:00<00:02, 560.23it/s]

5%|5 | Scoring GeneralizingEstimator : 62/1225 [00:00<00:03, 380.52it/s]

7%|7 | Scoring GeneralizingEstimator : 86/1225 [00:00<00:02, 443.34it/s]

8%|7 | Scoring GeneralizingEstimator : 97/1225 [00:00<00:03, 365.05it/s]

10%|9 | Scoring GeneralizingEstimator : 121/1225 [00:00<00:02, 409.87it/s]

11%|# | Scoring GeneralizingEstimator : 132/1225 [00:00<00:03, 358.32it/s]

13%|#2 | Scoring GeneralizingEstimator : 156/1225 [00:00<00:02, 394.27it/s]

14%|#3 | Scoring GeneralizingEstimator : 166/1225 [00:00<00:02, 384.50it/s]

14%|#3 | Scoring GeneralizingEstimator : 167/1225 [00:00<00:03, 350.78it/s]

16%|#5 | Scoring GeneralizingEstimator : 192/1225 [00:00<00:02, 385.39it/s]

16%|#6 | Scoring GeneralizingEstimator : 201/1225 [00:00<00:02, 348.75it/s]

18%|#7 | Scoring GeneralizingEstimator : 220/1225 [00:00<00:02, 365.29it/s]

19%|#8 | Scoring GeneralizingEstimator : 232/1225 [00:00<00:02, 339.78it/s]

21%|## | Scoring GeneralizingEstimator : 252/1225 [00:00<00:02, 356.84it/s]

21%|##1 | Scoring GeneralizingEstimator : 263/1225 [00:00<00:02, 333.42it/s]

23%|##3 | Scoring GeneralizingEstimator : 282/1225 [00:00<00:02, 347.37it/s]

24%|##3 | Scoring GeneralizingEstimator : 292/1225 [00:00<00:02, 344.12it/s]

24%|##3 | Scoring GeneralizingEstimator : 293/1225 [00:00<00:02, 324.76it/s]

26%|##5 | Scoring GeneralizingEstimator : 313/1225 [00:00<00:02, 340.96it/s]

26%|##6 | Scoring GeneralizingEstimator : 322/1225 [00:00<00:02, 319.45it/s]

28%|##7 | Scoring GeneralizingEstimator : 342/1225 [00:00<00:02, 334.53it/s]

29%|##8 | Scoring GeneralizingEstimator : 353/1225 [00:01<00:02, 317.23it/s]

30%|### | Scoring GeneralizingEstimator : 372/1225 [00:01<00:02, 330.03it/s]

31%|###1 | Scoring GeneralizingEstimator : 384/1225 [00:01<00:02, 315.55it/s]

33%|###2 | Scoring GeneralizingEstimator : 404/1225 [00:01<00:02, 329.13it/s]

34%|###3 | Scoring GeneralizingEstimator : 413/1225 [00:01<00:02, 311.28it/s]

35%|###5 | Scoring GeneralizingEstimator : 432/1225 [00:01<00:02, 323.19it/s]

36%|###6 | Scoring GeneralizingEstimator : 444/1225 [00:01<00:02, 310.52it/s]

38%|###7 | Scoring GeneralizingEstimator : 464/1225 [00:01<00:02, 323.39it/s]

39%|###8 | Scoring GeneralizingEstimator : 473/1225 [00:01<00:02, 307.22it/s]

40%|#### | Scoring GeneralizingEstimator : 493/1225 [00:01<00:02, 319.85it/s]

41%|####1 | Scoring GeneralizingEstimator : 505/1225 [00:01<00:02, 308.26it/s]

43%|####2 | Scoring GeneralizingEstimator : 524/1225 [00:01<00:02, 319.18it/s]

44%|####3 | Scoring GeneralizingEstimator : 534/1225 [00:01<00:02, 305.51it/s]

45%|####5 | Scoring GeneralizingEstimator : 554/1225 [00:01<00:02, 317.54it/s]

46%|####6 | Scoring GeneralizingEstimator : 565/1225 [00:01<00:02, 305.41it/s]

48%|####7 | Scoring GeneralizingEstimator : 584/1225 [00:01<00:02, 315.77it/s]

49%|####8 | Scoring GeneralizingEstimator : 595/1225 [00:01<00:02, 304.27it/s]

50%|##### | Scoring GeneralizingEstimator : 614/1225 [00:01<00:01, 314.45it/s]

51%|#####1 | Scoring GeneralizingEstimator : 626/1225 [00:01<00:01, 304.43it/s]

53%|#####2 | Scoring GeneralizingEstimator : 645/1225 [00:01<00:01, 314.48it/s]

53%|#####3 | Scoring GeneralizingEstimator : 655/1225 [00:02<00:01, 302.36it/s]

55%|#####5 | Scoring GeneralizingEstimator : 675/1225 [00:02<00:01, 313.47it/s]

56%|#####6 | Scoring GeneralizingEstimator : 686/1225 [00:02<00:01, 302.68it/s]

58%|#####7 | Scoring GeneralizingEstimator : 706/1225 [00:02<00:01, 313.80it/s]

59%|#####8 | Scoring GeneralizingEstimator : 717/1225 [00:02<00:01, 302.94it/s]

60%|###### | Scoring GeneralizingEstimator : 736/1225 [00:02<00:01, 312.73it/s]

61%|######1 | Scoring GeneralizingEstimator : 748/1225 [00:02<00:01, 303.20it/s]

63%|######2 | Scoring GeneralizingEstimator : 767/1225 [00:02<00:01, 312.80it/s]

63%|######3 | Scoring GeneralizingEstimator : 777/1225 [00:02<00:01, 301.33it/s]

65%|######5 | Scoring GeneralizingEstimator : 797/1225 [00:02<00:01, 312.11it/s]

66%|######6 | Scoring GeneralizingEstimator : 809/1225 [00:02<00:01, 302.83it/s]

68%|######7 | Scoring GeneralizingEstimator : 828/1225 [00:02<00:01, 312.37it/s]

68%|######8 | Scoring GeneralizingEstimator : 839/1225 [00:02<00:01, 302.03it/s]

70%|####### | Scoring GeneralizingEstimator : 858/1225 [00:02<00:01, 311.32it/s]

71%|####### | Scoring GeneralizingEstimator : 868/1225 [00:02<00:01, 300.32it/s]

72%|#######2 | Scoring GeneralizingEstimator : 887/1225 [00:02<00:01, 309.79it/s]

73%|#######3 | Scoring GeneralizingEstimator : 899/1225 [00:02<00:01, 300.89it/s]

75%|#######4 | Scoring GeneralizingEstimator : 918/1225 [00:02<00:00, 310.28it/s]

76%|#######5 | Scoring GeneralizingEstimator : 928/1225 [00:02<00:00, 299.25it/s]

77%|#######7 | Scoring GeneralizingEstimator : 947/1225 [00:02<00:00, 308.54it/s]

78%|#######8 | Scoring GeneralizingEstimator : 959/1225 [00:03<00:00, 299.92it/s]

80%|#######9 | Scoring GeneralizingEstimator : 978/1225 [00:03<00:00, 309.10it/s]

81%|######## | Scoring GeneralizingEstimator : 990/1225 [00:03<00:00, 300.52it/s]

82%|########2 | Scoring GeneralizingEstimator : 1009/1225 [00:03<00:00, 309.78it/s]

83%|########3 | Scoring GeneralizingEstimator : 1019/1225 [00:03<00:00, 298.99it/s]

85%|########4 | Scoring GeneralizingEstimator : 1038/1225 [00:03<00:00, 308.23it/s]

86%|########5 | Scoring GeneralizingEstimator : 1050/1225 [00:03<00:00, 299.66it/s]

87%|########7 | Scoring GeneralizingEstimator : 1070/1225 [00:03<00:00, 309.67it/s]

88%|########8 | Scoring GeneralizingEstimator : 1081/1225 [00:03<00:00, 300.24it/s]

90%|########9 | Scoring GeneralizingEstimator : 1101/1225 [00:03<00:00, 310.34it/s]

91%|######### | Scoring GeneralizingEstimator : 1112/1225 [00:03<00:00, 300.75it/s]

92%|#########2| Scoring GeneralizingEstimator : 1132/1225 [00:03<00:00, 310.78it/s]

93%|#########3| Scoring GeneralizingEstimator : 1141/1225 [00:03<00:00, 299.18it/s]

95%|#########4| Scoring GeneralizingEstimator : 1160/1225 [00:03<00:00, 308.32it/s]

96%|#########5| Scoring GeneralizingEstimator : 1172/1225 [00:03<00:00, 299.80it/s]

97%|#########7| Scoring GeneralizingEstimator : 1191/1225 [00:03<00:00, 308.78it/s]

98%|#########8| Scoring GeneralizingEstimator : 1203/1225 [00:03<00:00, 300.41it/s]

100%|#########9| Scoring GeneralizingEstimator : 1222/1225 [00:03<00:00, 309.34it/s]

100%|##########| Scoring GeneralizingEstimator : 1225/1225 [00:03<00:00, 314.39it/s]

Plot

fig, ax = plt.subplots(1)

im = ax.matshow(scores, vmin=0, vmax=1., cmap='RdBu_r', origin='lower',

extent=epochs.times[[0, -1, 0, -1]])

ax.axhline(0., color='k')

ax.axvline(0., color='k')

ax.xaxis.set_ticks_position('bottom')

ax.set_xlabel('Testing Time (s)')

ax.set_ylabel('Training Time (s)')

ax.set_title('Generalization across time and condition')

plt.colorbar(im, ax=ax)

plt.show()

References¶

- 1

Jean-Rémi King and Stanislas Dehaene. Characterizing the dynamics of mental representations: the temporal generalization method. Trends in Cognitive Sciences, 18(4):203–210, 2014. doi:10.1016/j.tics.2014.01.002.

Total running time of the script: ( 0 minutes 10.708 seconds)

Estimated memory usage: 128 MB