Note

Click here to download the full example code

Compute and visualize ERDS maps¶

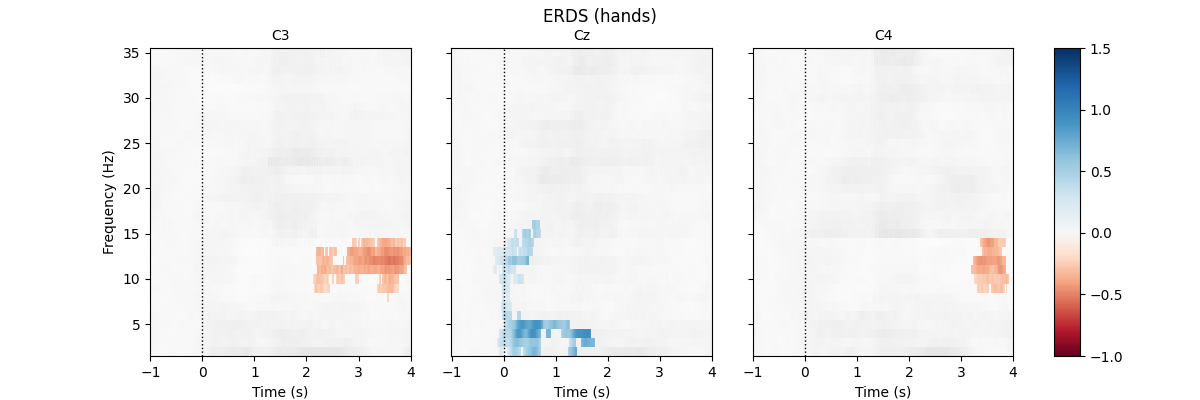

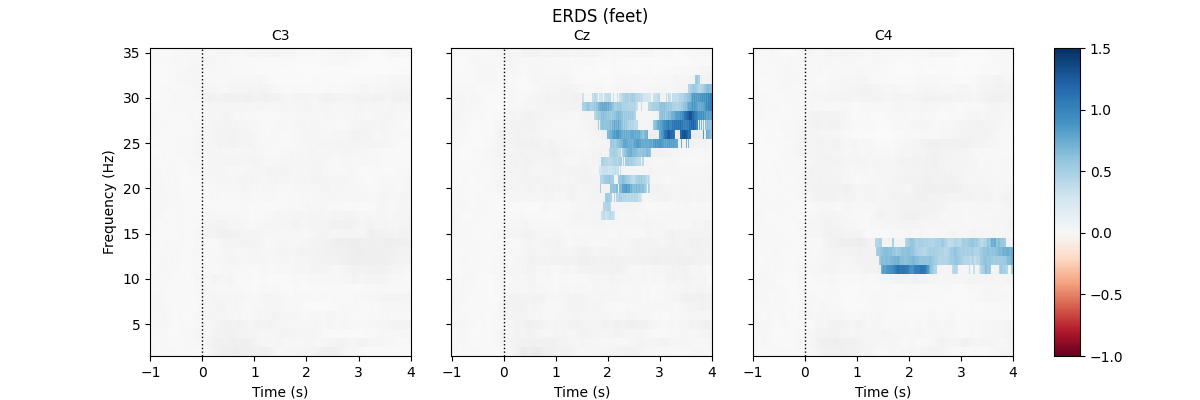

This example calculates and displays ERDS maps of event-related EEG data. ERDS (sometimes also written as ERD/ERS) is short for event-related desynchronization (ERD) and event-related synchronization (ERS) 1. Conceptually, ERD corresponds to a decrease in power in a specific frequency band relative to a baseline. Similarly, ERS corresponds to an increase in power. An ERDS map is a time/frequency representation of ERD/ERS over a range of frequencies 2. ERDS maps are also known as ERSP (event-related spectral perturbation) 3.

We use a public EEG BCI data set containing two different motor imagery tasks available at PhysioNet. The two tasks are imagined hand and feet movement. Our goal is to generate ERDS maps for each of the two tasks.

First, we load the data and create epochs of 5s length. The data sets contain multiple channels, but we will only consider the three channels C3, Cz, and C4. We compute maps containing frequencies ranging from 2 to 35Hz. We map ERD to red color and ERS to blue color, which is the convention in many ERDS publications. Finally, we perform cluster-based permutation tests to estimate significant ERDS values (corrected for multiple comparisons within channels).

References¶

- 1

G. Pfurtscheller, F. H. Lopes da Silva. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clinical Neurophysiology 110(11), 1842-1857, 1999.

- 2

B. Graimann, J. E. Huggins, S. P. Levine, G. Pfurtscheller. Visualization of significant ERD/ERS patterns in multichannel EEG and ECoG data. Clinical Neurophysiology 113(1), 43-47, 2002.

- 3

S. Makeig. Auditory event-related dynamics of the EEG spectrum and effects of exposure to tones. Electroencephalography and Clinical Neurophysiology 86(4), 283-293, 1993.

Out:

Extracting EDF parameters from /home/circleci/mne_data/MNE-eegbci-data/files/eegmmidb/1.0.0/S001/S001R06.edf...

EDF file detected

Setting channel info structure...

Creating raw.info structure...

Reading 0 ... 19999 = 0.000 ... 124.994 secs...

Extracting EDF parameters from /home/circleci/mne_data/MNE-eegbci-data/files/eegmmidb/1.0.0/S001/S001R10.edf...

EDF file detected

Setting channel info structure...

Creating raw.info structure...

Reading 0 ... 19999 = 0.000 ... 124.994 secs...

Extracting EDF parameters from /home/circleci/mne_data/MNE-eegbci-data/files/eegmmidb/1.0.0/S001/S001R14.edf...

EDF file detected

Setting channel info structure...

Creating raw.info structure...

Reading 0 ... 19999 = 0.000 ... 124.994 secs...

Used Annotations descriptions: ['T1', 'T2']

Not setting metadata

Not setting metadata

45 matching events found

No baseline correction applied

0 projection items activated

Loading data for 45 events and 961 original time points ...

0 bad epochs dropped

Not setting metadata

Applying baseline correction (mode: percent)

Using a threshold of 1.724718

stat_fun(H1): min=-8.523637 max=3.197747

Running initial clustering

Found 78 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

13%|#3 | : 13/99 [00:00<00:00, 376.68it/s]

26%|##6 | : 26/99 [00:00<00:00, 380.79it/s]

39%|###9 | : 39/99 [00:00<00:00, 382.12it/s]

55%|#####4 | : 54/99 [00:00<00:00, 398.73it/s]

68%|######7 | : 67/99 [00:00<00:00, 395.89it/s]

82%|########1 | : 81/99 [00:00<00:00, 399.40it/s]

98%|#########7| : 97/99 [00:00<00:00, 411.79it/s]

100%|##########| : 99/99 [00:00<00:00, 409.36it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

Using a threshold of -1.724718

stat_fun(H1): min=-8.523637 max=3.197747

Running initial clustering

Found 65 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

14%|#4 | : 14/99 [00:00<00:00, 413.31it/s]

30%|### | : 30/99 [00:00<00:00, 444.74it/s]

40%|#### | : 40/99 [00:00<00:00, 392.88it/s]

56%|#####5 | : 55/99 [00:00<00:00, 406.80it/s]

70%|######9 | : 69/99 [00:00<00:00, 408.58it/s]

81%|######## | : 80/99 [00:00<00:00, 392.98it/s]

96%|#########5| : 95/99 [00:00<00:00, 401.45it/s]

100%|##########| : 99/99 [00:00<00:00, 402.52it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

11%|#1 | : 11/99 [00:00<00:00, 324.61it/s]

26%|##6 | : 26/99 [00:00<00:00, 385.72it/s]

41%|####1 | : 41/99 [00:00<00:00, 406.23it/s]

53%|#####2 | : 52/99 [00:00<00:00, 384.62it/s]

67%|######6 | : 66/99 [00:00<00:00, 391.18it/s]

82%|########1 | : 81/99 [00:00<00:00, 401.32it/s]

93%|#########2| : 92/99 [00:00<00:00, 388.90it/s]

100%|##########| : 99/99 [00:00<00:00, 392.15it/s]

100%|##########| : 99/99 [00:00<00:00, 391.39it/s]

Computing cluster p-values

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

Done.

No baseline correction applied

Using a threshold of 1.724718

stat_fun(H1): min=-4.573067 max=3.687727

Running initial clustering

Found 85 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

10%|# | : 10/99 [00:00<00:00, 295.31it/s]

18%|#8 | : 18/99 [00:00<00:00, 265.15it/s]

29%|##9 | : 29/99 [00:00<00:00, 207.33it/s]

44%|####4 | : 44/99 [00:00<00:00, 257.19it/s]

60%|#####9 | : 59/99 [00:00<00:00, 291.20it/s]

71%|####### | : 70/99 [00:00<00:00, 296.62it/s]

86%|########5 | : 85/99 [00:00<00:00, 317.95it/s]

100%|##########| : 99/99 [00:00<00:00, 336.25it/s]

100%|##########| : 99/99 [00:00<00:00, 326.59it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

11%|#1 | : 11/99 [00:00<00:00, 324.52it/s]

26%|##6 | : 26/99 [00:00<00:00, 385.86it/s]

40%|#### | : 40/99 [00:00<00:00, 395.94it/s]

53%|#####2 | : 52/99 [00:00<00:00, 384.98it/s]

69%|######8 | : 68/99 [00:00<00:00, 404.68it/s]

82%|########1 | : 81/99 [00:00<00:00, 401.00it/s]

96%|#########5| : 95/99 [00:00<00:00, 403.34it/s]

100%|##########| : 99/99 [00:00<00:00, 402.65it/s]

Computing cluster p-values

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

Done.

Using a threshold of -1.724718

stat_fun(H1): min=-4.573067 max=3.687727

Running initial clustering

Found 57 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

11%|#1 | : 11/99 [00:00<00:00, 321.49it/s]

25%|##5 | : 25/99 [00:00<00:00, 368.32it/s]

40%|#### | : 40/99 [00:00<00:00, 394.75it/s]

53%|#####2 | : 52/99 [00:00<00:00, 384.45it/s]

67%|######6 | : 66/99 [00:00<00:00, 390.67it/s]

81%|######## | : 80/99 [00:00<00:00, 395.15it/s]

92%|#########1| : 91/99 [00:00<00:00, 383.77it/s]

100%|##########| : 99/99 [00:00<00:00, 389.45it/s]

100%|##########| : 99/99 [00:00<00:00, 388.23it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

No baseline correction applied

Using a threshold of 1.724718

stat_fun(H1): min=-6.599131 max=3.329547

Running initial clustering

Found 64 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

10%|# | : 10/99 [00:00<00:00, 295.40it/s]

24%|##4 | : 24/99 [00:00<00:00, 356.32it/s]

38%|###8 | : 38/99 [00:00<00:00, 376.67it/s]

43%|####3 | : 43/99 [00:00<00:00, 315.15it/s]

59%|#####8 | : 58/99 [00:00<00:00, 343.61it/s]

74%|#######3 | : 73/99 [00:00<00:00, 362.58it/s]

85%|########4 | : 84/99 [00:00<00:00, 356.42it/s]

99%|#########8| : 98/99 [00:00<00:00, 365.08it/s]

100%|##########| : 99/99 [00:00<00:00, 365.59it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

Using a threshold of -1.724718

stat_fun(H1): min=-6.599131 max=3.329547

Running initial clustering

Found 64 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

10%|# | : 10/99 [00:00<00:00, 293.05it/s]

25%|##5 | : 25/99 [00:00<00:00, 369.30it/s]

39%|###9 | : 39/99 [00:00<00:00, 385.16it/s]

51%|##### | : 50/99 [00:00<00:00, 369.19it/s]

65%|######4 | : 64/99 [00:00<00:00, 378.80it/s]

80%|#######9 | : 79/99 [00:00<00:00, 391.14it/s]

90%|########9 | : 89/99 [00:00<00:00, 375.41it/s]

100%|##########| : 99/99 [00:00<00:00, 386.19it/s]

100%|##########| : 99/99 [00:00<00:00, 383.96it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

No baseline correction applied

Using a threshold of 1.713872

stat_fun(H1): min=-3.687815 max=3.369164

Running initial clustering

Found 66 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

11%|#1 | : 11/99 [00:00<00:00, 324.38it/s]

24%|##4 | : 24/99 [00:00<00:00, 355.26it/s]

36%|###6 | : 36/99 [00:00<00:00, 355.42it/s]

48%|####8 | : 48/99 [00:00<00:00, 355.44it/s]

63%|######2 | : 62/99 [00:00<00:00, 368.45it/s]

75%|#######4 | : 74/99 [00:00<00:00, 366.01it/s]

87%|########6 | : 86/99 [00:00<00:00, 364.22it/s]

100%|##########| : 99/99 [00:00<00:00, 371.64it/s]

100%|##########| : 99/99 [00:00<00:00, 369.41it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

Using a threshold of -1.713872

stat_fun(H1): min=-3.687815 max=3.369164

Running initial clustering

Found 75 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

10%|# | : 10/99 [00:00<00:00, 296.11it/s]

23%|##3 | : 23/99 [00:00<00:00, 340.98it/s]

38%|###8 | : 38/99 [00:00<00:00, 377.08it/s]

48%|####8 | : 48/99 [00:00<00:00, 355.36it/s]

63%|######2 | : 62/99 [00:00<00:00, 368.40it/s]

77%|#######6 | : 76/99 [00:00<00:00, 377.08it/s]

88%|########7 | : 87/99 [00:00<00:00, 368.52it/s]

100%|##########| : 99/99 [00:00<00:00, 374.09it/s]

100%|##########| : 99/99 [00:00<00:00, 372.09it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

No baseline correction applied

Using a threshold of 1.713872

stat_fun(H1): min=-5.046259 max=5.406477

Running initial clustering

Found 98 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

10%|# | : 10/99 [00:00<00:00, 294.99it/s]

24%|##4 | : 24/99 [00:00<00:00, 355.83it/s]

32%|###2 | : 32/99 [00:00<00:00, 313.98it/s]

35%|###5 | : 35/99 [00:00<00:00, 252.99it/s]

48%|####8 | : 48/99 [00:00<00:00, 281.87it/s]

64%|######3 | : 63/99 [00:00<00:00, 312.41it/s]

75%|#######4 | : 74/99 [00:00<00:00, 314.68it/s]

88%|########7 | : 87/99 [00:00<00:00, 324.90it/s]

100%|##########| : 99/99 [00:00<00:00, 337.82it/s]

100%|##########| : 99/99 [00:00<00:00, 331.79it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

10%|# | : 10/99 [00:00<00:00, 295.16it/s]

24%|##4 | : 24/99 [00:00<00:00, 356.16it/s]

38%|###8 | : 38/99 [00:00<00:00, 376.76it/s]

48%|####8 | : 48/99 [00:00<00:00, 355.00it/s]

63%|######2 | : 62/99 [00:00<00:00, 368.07it/s]

77%|#######6 | : 76/99 [00:00<00:00, 376.78it/s]

87%|########6 | : 86/99 [00:00<00:00, 363.58it/s]

100%|##########| : 99/99 [00:00<00:00, 373.28it/s]

100%|##########| : 99/99 [00:00<00:00, 371.39it/s]

Computing cluster p-values

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

Done.

Using a threshold of -1.713872

stat_fun(H1): min=-5.046259 max=5.406477

Running initial clustering

Found 66 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

13%|#3 | : 13/99 [00:00<00:00, 382.85it/s]

23%|##3 | : 23/99 [00:00<00:00, 338.66it/s]

37%|###7 | : 37/99 [00:00<00:00, 365.07it/s]

52%|#####1 | : 51/99 [00:00<00:00, 378.58it/s]

62%|######1 | : 61/99 [00:00<00:00, 360.46it/s]

76%|#######5 | : 75/99 [00:00<00:00, 370.62it/s]

90%|########9 | : 89/99 [00:00<00:00, 377.95it/s]

100%|##########| : 99/99 [00:00<00:00, 368.75it/s]

100%|##########| : 99/99 [00:00<00:00, 368.37it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

No baseline correction applied

Using a threshold of 1.713872

stat_fun(H1): min=-5.964817 max=4.078953

Running initial clustering

Found 92 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

12%|#2 | : 12/99 [00:00<00:00, 354.35it/s]

23%|##3 | : 23/99 [00:00<00:00, 339.56it/s]

37%|###7 | : 37/99 [00:00<00:00, 365.72it/s]

51%|##### | : 50/99 [00:00<00:00, 371.10it/s]

62%|######1 | : 61/99 [00:00<00:00, 361.16it/s]

76%|#######5 | : 75/99 [00:00<00:00, 371.31it/s]

89%|########8 | : 88/99 [00:00<00:00, 373.68it/s]

100%|##########| : 99/99 [00:00<00:00, 369.62it/s]

100%|##########| : 99/99 [00:00<00:00, 368.65it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

14%|#4 | : 14/99 [00:00<00:00, 413.10it/s]

25%|##5 | : 25/99 [00:00<00:00, 368.78it/s]

37%|###7 | : 37/99 [00:00<00:00, 363.97it/s]

53%|#####2 | : 52/99 [00:00<00:00, 385.56it/s]

64%|######3 | : 63/99 [00:00<00:00, 372.38it/s]

77%|#######6 | : 76/99 [00:00<00:00, 374.71it/s]

92%|#########1| : 91/99 [00:00<00:00, 386.20it/s]

100%|##########| : 99/99 [00:00<00:00, 387.93it/s]

100%|##########| : 99/99 [00:00<00:00, 385.74it/s]

Computing cluster p-values

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

Done.

Using a threshold of -1.713872

stat_fun(H1): min=-5.964817 max=4.078953

Running initial clustering

Found 52 clusters

Permuting 99 times...

0%| | : 0/99 [00:00<?, ?it/s]

13%|#3 | : 13/99 [00:00<00:00, 384.79it/s]

28%|##8 | : 28/99 [00:00<00:00, 415.27it/s]

38%|###8 | : 38/99 [00:00<00:00, 373.89it/s]

52%|#####1 | : 51/99 [00:00<00:00, 376.96it/s]

66%|######5 | : 65/99 [00:00<00:00, 385.22it/s]

76%|#######5 | : 75/99 [00:00<00:00, 368.61it/s]

90%|########9 | : 89/99 [00:00<00:00, 376.30it/s]

100%|##########| : 99/99 [00:00<00:00, 380.65it/s]

100%|##########| : 99/99 [00:00<00:00, 380.06it/s]

Computing cluster p-values

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Done.

No baseline correction applied

# Authors: Clemens Brunner <clemens.brunner@gmail.com>

#

# License: BSD (3-clause)

import numpy as np

import matplotlib.pyplot as plt

import mne

from mne.datasets import eegbci

from mne.io import concatenate_raws, read_raw_edf

from mne.time_frequency import tfr_multitaper

from mne.stats import permutation_cluster_1samp_test as pcluster_test

from mne.viz.utils import center_cmap

# load and preprocess data ####################################################

subject = 1 # use data from subject 1

runs = [6, 10, 14] # use only hand and feet motor imagery runs

fnames = eegbci.load_data(subject, runs)

raws = [read_raw_edf(f, preload=True) for f in fnames]

raw = concatenate_raws(raws)

raw.rename_channels(lambda x: x.strip('.')) # remove dots from channel names

events, _ = mne.events_from_annotations(raw, event_id=dict(T1=2, T2=3))

picks = mne.pick_channels(raw.info["ch_names"], ["C3", "Cz", "C4"])

# epoch data ##################################################################

tmin, tmax = -1, 4 # define epochs around events (in s)

event_ids = dict(hands=2, feet=3) # map event IDs to tasks

epochs = mne.Epochs(raw, events, event_ids, tmin - 0.5, tmax + 0.5,

picks=picks, baseline=None, preload=True)

# compute ERDS maps ###########################################################

freqs = np.arange(2, 36, 1) # frequencies from 2-35Hz

n_cycles = freqs # use constant t/f resolution

vmin, vmax = -1, 1.5 # set min and max ERDS values in plot

baseline = [-1, 0] # baseline interval (in s)

cmap = center_cmap(plt.cm.RdBu, vmin, vmax) # zero maps to white

kwargs = dict(n_permutations=100, step_down_p=0.05, seed=1,

buffer_size=None, out_type='mask') # for cluster test

# Run TF decomposition overall epochs

tfr = tfr_multitaper(epochs, freqs=freqs, n_cycles=n_cycles,

use_fft=True, return_itc=False, average=False,

decim=2)

tfr.crop(tmin, tmax)

tfr.apply_baseline(baseline, mode="percent")

for event in event_ids:

# select desired epochs for visualization

tfr_ev = tfr[event]

fig, axes = plt.subplots(1, 4, figsize=(12, 4),

gridspec_kw={"width_ratios": [10, 10, 10, 1]})

for ch, ax in enumerate(axes[:-1]): # for each channel

# positive clusters

_, c1, p1, _ = pcluster_test(tfr_ev.data[:, ch, ...], tail=1, **kwargs)

# negative clusters

_, c2, p2, _ = pcluster_test(tfr_ev.data[:, ch, ...], tail=-1,

**kwargs)

# note that we keep clusters with p <= 0.05 from the combined clusters

# of two independent tests; in this example, we do not correct for

# these two comparisons

c = np.stack(c1 + c2, axis=2) # combined clusters

p = np.concatenate((p1, p2)) # combined p-values

mask = c[..., p <= 0.05].any(axis=-1)

# plot TFR (ERDS map with masking)

tfr_ev.average().plot([ch], vmin=vmin, vmax=vmax, cmap=(cmap, False),

axes=ax, colorbar=False, show=False, mask=mask,

mask_style="mask")

ax.set_title(epochs.ch_names[ch], fontsize=10)

ax.axvline(0, linewidth=1, color="black", linestyle=":") # event

if ch != 0:

ax.set_ylabel("")

ax.set_yticklabels("")

fig.colorbar(axes[0].images[-1], cax=axes[-1])

fig.suptitle("ERDS ({})".format(event))

fig.show()

Total running time of the script: ( 0 minutes 7.008 seconds)

Estimated memory usage: 8 MB