Note

Go to the end to download the full example code.

Compute RSA between RDMs#

This example showcases the most basic version of RSA: computing the similarity between two RDMs. Then we continue with computing RSA between many RDMs efficiently.

# sphinx_gallery_thumbnail_number=2

import mne

import mne_rsa

# Import required packages

import pandas as pd

from matplotlib import pyplot as plt

MNE-Python contains a built-in data loader for the kiloword dataset, which is used

here as an example dataset. Since we only need the words shown during the experiment,

which are in the metadata, we can pass preload=False to prevent MNE-Python from

loading the EEG data, which is a nice speed gain.

data_path = mne.datasets.kiloword.data_path(verbose=True)

epochs = mne.read_epochs(data_path / "kword_metadata-epo.fif")

# Show the metadata of 10 random epochs

epochs.metadata.sample(10)

Reading /home/runner/mne_data/MNE-kiloword-data/kword_metadata-epo.fif ...

Isotrak not found

Found the data of interest:

t = -100.00 ... 920.00 ms

0 CTF compensation matrices available

Adding metadata with 8 columns

960 matching events found

No baseline correction applied

0 projection items activated

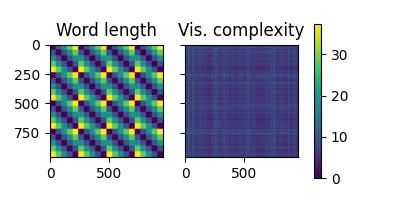

Compute RDMs based on word length and visual complexity.

<Figure size 400x200 with 3 Axes>

Perform RSA between the two RDMs using Spearman correlation

rsa_result = mne_rsa.rsa(rdm1, rdm2, metric="spearman")

print("RSA score:", rsa_result)

RSA score: 0.026439883289118636

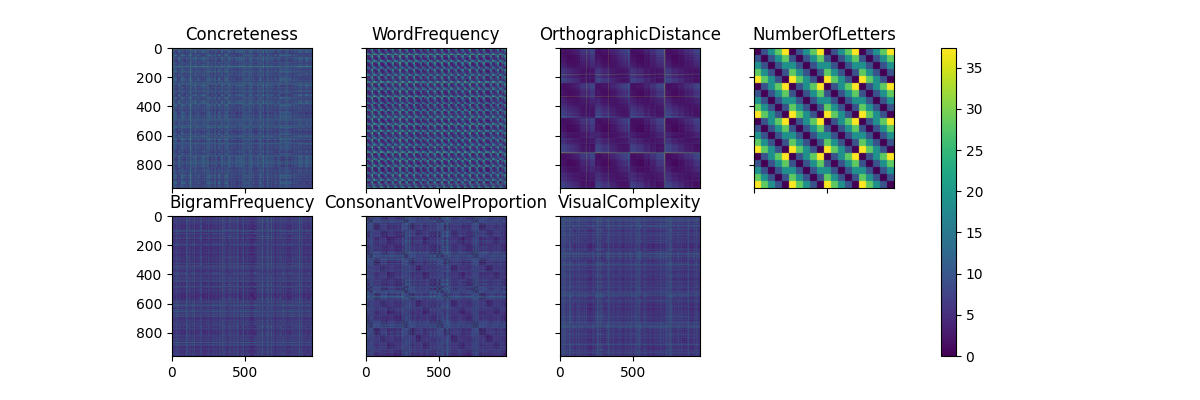

We can compute RSA between multiple RDMs by passing lists to the mne_rsa.rsa()

function.

# Create RDMs for each stimulus property

columns = metadata.columns[1:] # Skip the first column: WORD

rdms = [mne_rsa.compute_rdm(metadata[col], metric="euclidean") for col in columns]

# Plot the RDMs

fig = mne_rsa.plot_rdms(rdms, names=columns, n_rows=2)

fig.set_size_inches(12, 4)

# Compute RSA between the first two RDMs (Concreteness and WordFrequency) and the

# others.

rsa_results = mne_rsa.rsa(rdms[:2], rdms[2:], metric="spearman")

# Pack the result into a Pandas DataFrame for easy viewing

print(pd.DataFrame(rsa_results, index=columns[:2], columns=columns[2:]))

OrthographicDistance ... VisualComplexity

Concreteness 0.031064 ... 0.004263

WordFrequency 0.058385 ... -0.009620

[2 rows x 5 columns]

What if we have many RDMs? The mne_rsa.rsa() function is optimized for the case

where the first parameter (the “data” RDMs) is a large list of RDMs and the second

parameter (the “model” RDMs) is a smaller list. To save memory, you can also pass

generators instead of lists.

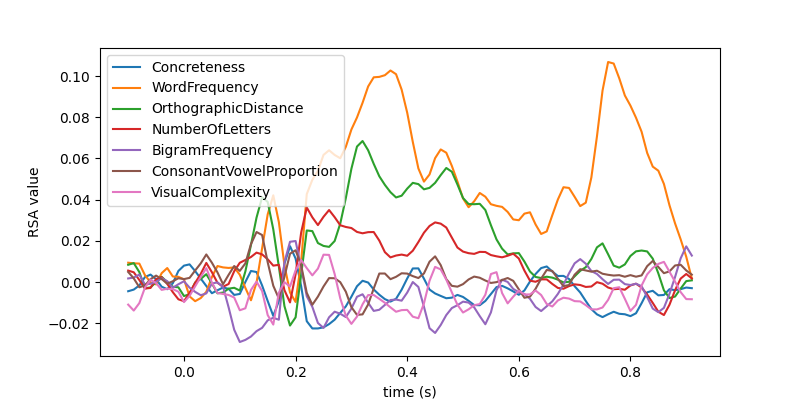

Let’s create a generator that creates RDMs for each time-point in the EEG data and

compute the RSA between those RDMs and all the “model” RDMs we computed above. This is

a basic example of using a “searchlight” and in other examples, you can learn how to

use the mne_rsa.searchlight generator to build more advanced searchlights.

However, since this is such a simple case, it is educational to construct the

generator manually.

The RSA computation will take some time. Therefore, we pass a few extra parameters to

mne_rsa.rsa() to enable some improvements. First, the verbose=True enables a

progress bar. However, since we are using a generator, the progress bar cannot

automatically infer how many RDMs there will be. Hence, we provide this information

explicitly using the n_data_rdms parameter. Finally, depending on how many CPUs

you have on your system, consider increasing the n_jobs parameter to parallelize

the computation over multiple CPUs.

epochs.resample(100) # Downsample to speed things up for this example

eeg_data = epochs.get_data()

n_trials, n_sensors, n_times = eeg_data.shape

def generate_eeg_rdms():

"""Generate RDMs for each time sample."""

for i in range(n_times):

yield mne_rsa.compute_rdm(eeg_data[:, :, i], metric="correlation")

rsa_results = mne_rsa.rsa(

generate_eeg_rdms(),

rdms,

metric="spearman",

verbose=True,

n_data_rdms=n_times,

n_jobs=1, # Use this to specify the number of CPU cores to use.

)

# Plot the RSA values over time using standard matplotlib commands

plt.figure(figsize=(8, 4))

plt.plot(epochs.times, rsa_results)

plt.xlabel("time (s)")

plt.ylabel("RSA value")

plt.legend(columns)

0%| | 0/102 [00:00<?, ?RDM/s]

1%| | 1/102 [00:00<00:36, 2.76RDM/s]

3%|▎ | 3/102 [00:00<00:15, 6.43RDM/s]

5%|▍ | 5/102 [00:00<00:11, 8.48RDM/s]

7%|▋ | 7/102 [00:00<00:09, 9.72RDM/s]

9%|▉ | 9/102 [00:01<00:08, 10.50RDM/s]

11%|█ | 11/102 [00:01<00:08, 11.01RDM/s]

13%|█▎ | 13/102 [00:01<00:07, 11.41RDM/s]

15%|█▍ | 15/102 [00:01<00:07, 11.66RDM/s]

17%|█▋ | 17/102 [00:01<00:07, 11.79RDM/s]

19%|█▊ | 19/102 [00:01<00:06, 11.93RDM/s]

21%|██ | 21/102 [00:02<00:06, 12.04RDM/s]

23%|██▎ | 23/102 [00:02<00:06, 12.11RDM/s]

25%|██▍ | 25/102 [00:02<00:06, 12.15RDM/s]

26%|██▋ | 27/102 [00:02<00:06, 12.18RDM/s]

28%|██▊ | 29/102 [00:02<00:05, 12.18RDM/s]

30%|███ | 31/102 [00:02<00:05, 12.21RDM/s]

32%|███▏ | 33/102 [00:02<00:05, 12.21RDM/s]

34%|███▍ | 35/102 [00:03<00:05, 12.24RDM/s]

36%|███▋ | 37/102 [00:03<00:05, 12.17RDM/s]

38%|███▊ | 39/102 [00:03<00:05, 12.10RDM/s]

40%|████ | 41/102 [00:03<00:05, 12.07RDM/s]

42%|████▏ | 43/102 [00:03<00:04, 12.09RDM/s]

44%|████▍ | 45/102 [00:03<00:04, 12.14RDM/s]

46%|████▌ | 47/102 [00:04<00:04, 12.17RDM/s]

48%|████▊ | 49/102 [00:04<00:04, 12.18RDM/s]

50%|█████ | 51/102 [00:04<00:04, 12.16RDM/s]

52%|█████▏ | 53/102 [00:04<00:04, 12.17RDM/s]

54%|█████▍ | 55/102 [00:04<00:03, 12.18RDM/s]

56%|█████▌ | 57/102 [00:04<00:03, 12.21RDM/s]

58%|█████▊ | 59/102 [00:05<00:03, 12.24RDM/s]

60%|█████▉ | 61/102 [00:05<00:03, 12.27RDM/s]

62%|██████▏ | 63/102 [00:05<00:03, 12.28RDM/s]

64%|██████▎ | 65/102 [00:05<00:03, 12.29RDM/s]

66%|██████▌ | 67/102 [00:05<00:02, 12.29RDM/s]

68%|██████▊ | 69/102 [00:05<00:02, 12.28RDM/s]

70%|██████▉ | 71/102 [00:06<00:02, 12.24RDM/s]

72%|███████▏ | 73/102 [00:06<00:02, 12.24RDM/s]

74%|███████▎ | 75/102 [00:06<00:02, 12.24RDM/s]

75%|███████▌ | 77/102 [00:06<00:02, 12.21RDM/s]

77%|███████▋ | 79/102 [00:06<00:01, 12.20RDM/s]

79%|███████▉ | 81/102 [00:06<00:01, 12.21RDM/s]

81%|████████▏ | 83/102 [00:07<00:01, 12.21RDM/s]

83%|████████▎ | 85/102 [00:07<00:01, 12.23RDM/s]

85%|████████▌ | 87/102 [00:07<00:01, 12.26RDM/s]

87%|████████▋ | 89/102 [00:07<00:01, 12.12RDM/s]

89%|████████▉ | 91/102 [00:07<00:00, 12.16RDM/s]

91%|█████████ | 93/102 [00:07<00:00, 12.21RDM/s]

93%|█████████▎| 95/102 [00:08<00:00, 12.18RDM/s]

95%|█████████▌| 97/102 [00:08<00:00, 12.21RDM/s]

97%|█████████▋| 99/102 [00:08<00:00, 12.24RDM/s]

99%|█████████▉| 101/102 [00:08<00:00, 12.27RDM/s]

100%|██████████| 102/102 [00:08<00:00, 11.81RDM/s]

<matplotlib.legend.Legend object at 0x7f378d71e870>

Total running time of the script: (0 minutes 11.993 seconds)