Note

Click here to download the full example code

Decoding (MVPA)¶

Contents

Design philosophy¶

Decoding (a.k.a. MVPA) in MNE largely follows the machine

learning API of the scikit-learn package.

Each estimator implements fit, transform, fit_transform, and

(optionally) inverse_transform methods. For more details on this design,

visit scikit-learn. For additional theoretical insights into the decoding

framework in MNE 1.

For ease of comprehension, we will denote instantiations of the class using the same name as the class but in small caps instead of camel cases.

Let’s start by loading data for a simple two-class problem:

import numpy as np

import matplotlib.pyplot as plt

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

import mne

from mne.datasets import sample

from mne.decoding import (SlidingEstimator, GeneralizingEstimator, Scaler,

cross_val_multiscore, LinearModel, get_coef,

Vectorizer, CSP)

data_path = sample.data_path()

subjects_dir = data_path + '/subjects'

raw_fname = data_path + '/MEG/sample/sample_audvis_raw.fif'

tmin, tmax = -0.200, 0.500

event_id = {'Auditory/Left': 1, 'Visual/Left': 3} # just use two

raw = mne.io.read_raw_fif(raw_fname, preload=True)

# The subsequent decoding analyses only capture evoked responses, so we can

# low-pass the MEG data. Usually a value more like 40 Hz would be used,

# but here low-pass at 20 so we can more heavily decimate, and allow

# the examlpe to run faster. The 2 Hz high-pass helps improve CSP.

raw.filter(2, 20)

events = mne.find_events(raw, 'STI 014')

# Set up pick list: EEG + MEG - bad channels (modify to your needs)

raw.info['bads'] += ['MEG 2443', 'EEG 053'] # bads + 2 more

# Read epochs

epochs = mne.Epochs(raw, events, event_id, tmin, tmax, proj=True,

picks=('grad', 'eog'), baseline=(None, 0.), preload=True,

reject=dict(grad=4000e-13, eog=150e-6), decim=10)

epochs.pick_types(meg=True, exclude='bads') # remove stim and EOG

del raw

X = epochs.get_data() # MEG signals: n_epochs, n_meg_channels, n_times

y = epochs.events[:, 2] # target: auditory left vs visual left

Out:

Opening raw data file /home/circleci/mne_data/MNE-sample-data/MEG/sample/sample_audvis_raw.fif...

Read a total of 3 projection items:

PCA-v1 (1 x 102) idle

PCA-v2 (1 x 102) idle

PCA-v3 (1 x 102) idle

Range : 25800 ... 192599 = 42.956 ... 320.670 secs

Ready.

Reading 0 ... 166799 = 0.000 ... 277.714 secs...

Filtering raw data in 1 contiguous segment

Setting up band-pass filter from 2 - 20 Hz

FIR filter parameters

---------------------

Designing a one-pass, zero-phase, non-causal bandpass filter:

- Windowed time-domain design (firwin) method

- Hamming window with 0.0194 passband ripple and 53 dB stopband attenuation

- Lower passband edge: 2.00

- Lower transition bandwidth: 2.00 Hz (-6 dB cutoff frequency: 1.00 Hz)

- Upper passband edge: 20.00 Hz

- Upper transition bandwidth: 5.00 Hz (-6 dB cutoff frequency: 22.50 Hz)

- Filter length: 993 samples (1.653 sec)

320 events found

Event IDs: [ 1 2 3 4 5 32]

Not setting metadata

Not setting metadata

145 matching events found

Setting baseline interval to [-0.19979521315838786, 0.0] sec

Applying baseline correction (mode: mean)

3 projection items activated

Loading data for 145 events and 421 original time points ...

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

22 bad epochs dropped

Removing projector <Projection | PCA-v1, active : True, n_channels : 102>

Removing projector <Projection | PCA-v2, active : True, n_channels : 102>

Removing projector <Projection | PCA-v3, active : True, n_channels : 102>

Transformation classes¶

Scaler¶

The mne.decoding.Scaler will standardize the data based on channel

scales. In the simplest modes scalings=None or scalings=dict(...),

each data channel type (e.g., mag, grad, eeg) is treated separately and

scaled by a constant. This is the approach used by e.g.,

mne.compute_covariance() to standardize channel scales.

If scalings='mean' or scalings='median', each channel is scaled using

empirical measures. Each channel is scaled independently by the mean and

standand deviation, or median and interquartile range, respectively, across

all epochs and time points during fit

(during training). The transform() method is

called to transform data (training or test set) by scaling all time points

and epochs on a channel-by-channel basis. To perform both the fit and

transform operations in a single call, the

fit_transform() method may be used. To invert the

transform, inverse_transform() can be used. For

scalings='median', scikit-learn version 0.17+ is required.

Note

Using this class is different from directly applying

sklearn.preprocessing.StandardScaler or

sklearn.preprocessing.RobustScaler offered by

scikit-learn. These scale each classification feature, e.g.

each time point for each channel, with mean and standard

deviation computed across epochs, whereas

mne.decoding.Scaler scales each channel using mean and

standard deviation computed across all of its time points

and epochs.

Vectorizer¶

Scikit-learn API provides functionality to chain transformers and estimators

by using sklearn.pipeline.Pipeline. We can construct decoding

pipelines and perform cross-validation and grid-search. However scikit-learn

transformers and estimators generally expect 2D data

(n_samples * n_features), whereas MNE transformers typically output data

with a higher dimensionality

(e.g. n_samples * n_channels * n_frequencies * n_times). A Vectorizer

therefore needs to be applied between the MNE and the scikit-learn steps

like:

# Uses all MEG sensors and time points as separate classification

# features, so the resulting filters used are spatio-temporal

clf = make_pipeline(Scaler(epochs.info),

Vectorizer(),

LogisticRegression(solver='lbfgs'))

scores = cross_val_multiscore(clf, X, y, cv=5, n_jobs=1)

# Mean scores across cross-validation splits

score = np.mean(scores, axis=0)

print('Spatio-temporal: %0.1f%%' % (100 * score,))

Out:

Spatio-temporal: 99.2%

PSDEstimator¶

The mne.decoding.PSDEstimator

computes the power spectral density (PSD) using the multitaper

method. It takes a 3D array as input, converts it into 2D and computes the

PSD.

FilterEstimator¶

The mne.decoding.FilterEstimator filters the 3D epochs data.

Spatial filters¶

Just like temporal filters, spatial filters provide weights to modify the data along the sensor dimension. They are popular in the BCI community because of their simplicity and ability to distinguish spatially-separated neural activity.

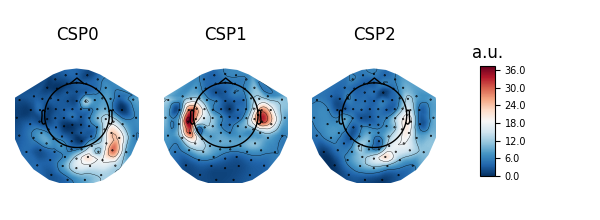

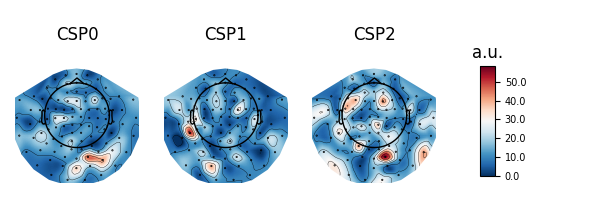

Common spatial pattern¶

mne.decoding.CSP is a technique to analyze multichannel data based

on recordings from two classes 2 (see also

https://en.wikipedia.org/wiki/Common_spatial_pattern).

Let \(X \in R^{C\times T}\) be a segment of data with \(C\) channels and \(T\) time points. The data at a single time point is denoted by \(x(t)\) such that \(X=[x(t), x(t+1), ..., x(t+T-1)]\). Common spatial pattern (CSP) finds a decomposition that projects the signal in the original sensor space to CSP space using the following transformation:

where each column of \(W \in R^{C\times C}\) is a spatial filter and each row of \(x_{CSP}\) is a CSP component. The matrix \(W\) is also called the de-mixing matrix in other contexts. Let \(\Sigma^{+} \in R^{C\times C}\) and \(\Sigma^{-} \in R^{C\times C}\) be the estimates of the covariance matrices of the two conditions. CSP analysis is given by the simultaneous diagonalization of the two covariance matrices

where \(\lambda^{C}\) is a diagonal matrix whose entries are the eigenvalues of the following generalized eigenvalue problem

Large entries in the diagonal matrix corresponds to a spatial filter which gives high variance in one class but low variance in the other. Thus, the filter facilitates discrimination between the two classes.

Examples

Note

The winning entry of the Grasp-and-lift EEG competition in Kaggle used

the CSP implementation in MNE and was featured as

a script of the week.

We can use CSP with these data with:

csp = CSP(n_components=3, norm_trace=False)

clf_csp = make_pipeline(csp, LinearModel(LogisticRegression(solver='lbfgs')))

scores = cross_val_multiscore(clf_csp, X, y, cv=5, n_jobs=1)

print('CSP: %0.1f%%' % (100 * scores.mean(),))

Out:

Computing rank from data with rank=None

Using tolerance 4.4e-11 (2.2e-16 eps * 203 dim * 9.7e+02 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 5.2e-11 (2.2e-16 eps * 203 dim * 1.1e+03 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 4.2e-11 (2.2e-16 eps * 203 dim * 9.3e+02 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 5.2e-11 (2.2e-16 eps * 203 dim * 1.2e+03 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 4.2e-11 (2.2e-16 eps * 203 dim * 9.4e+02 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 5.2e-11 (2.2e-16 eps * 203 dim * 1.1e+03 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 4.2e-11 (2.2e-16 eps * 203 dim * 9.4e+02 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 5e-11 (2.2e-16 eps * 203 dim * 1.1e+03 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 4.2e-11 (2.2e-16 eps * 203 dim * 9.3e+02 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 5.2e-11 (2.2e-16 eps * 203 dim * 1.1e+03 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

CSP: 87.0%

Source power comodulation (SPoC)¶

Source Power Comodulation (mne.decoding.SPoC)

3 identifies the composition of

orthogonal spatial filters that maximally correlate with a continuous target.

SPoC can be seen as an extension of the CSP where the target is driven by a continuous variable rather than a discrete variable. Typical applications include extraction of motor patterns using EMG power or audio patterns using sound envelope.

Examples

xDAWN¶

mne.preprocessing.Xdawn is a spatial filtering method designed to

improve the signal to signal + noise ratio (SSNR) of the ERP responses

4. Xdawn was originally

designed for P300 evoked potential by enhancing the target response with

respect to the non-target response. The implementation in MNE-Python is a

generalization to any type of ERP.

Effect-matched spatial filtering¶

The result of mne.decoding.EMS is a spatial filter at each time

point and a corresponding time course 5.

Intuitively, the result gives the similarity between the filter at

each time point and the data vector (sensors) at that time point.

Patterns vs. filters¶

When interpreting the components of the CSP (or spatial filters in general), it is often more intuitive to think about how \(x(t)\) is composed of the different CSP components \(x_{CSP}(t)\). In other words, we can rewrite Equation (1) as follows:

The columns of the matrix \((W^{-1})^T\) are called spatial patterns. This is also called the mixing matrix. The example Linear classifier on sensor data with plot patterns and filters discusses the difference between patterns and filters.

These can be plotted with:

# Fit CSP on full data and plot

csp.fit(X, y)

csp.plot_patterns(epochs.info)

csp.plot_filters(epochs.info, scalings=1e-9)

Out:

Computing rank from data with rank=None

Using tolerance 4.8e-11 (2.2e-16 eps * 203 dim * 1.1e+03 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Computing rank from data with rank=None

Using tolerance 5.7e-11 (2.2e-16 eps * 203 dim * 1.3e+03 max singular value)

Estimated rank (mag): 203

MAG: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

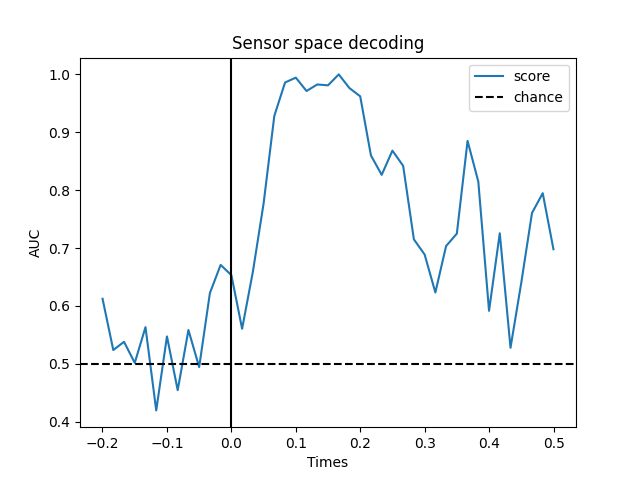

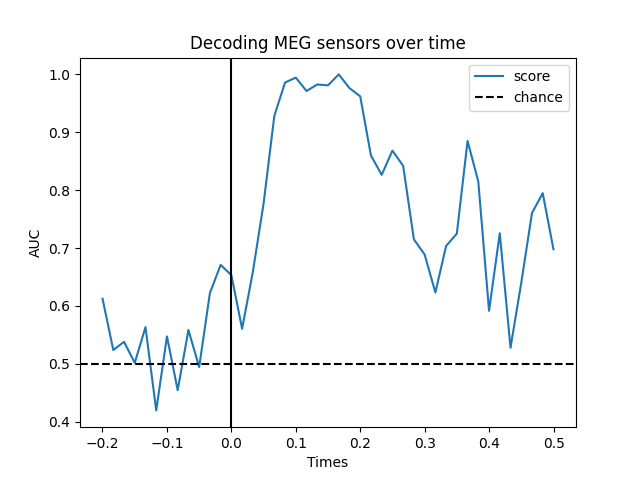

Decoding over time¶

This strategy consists in fitting a multivariate predictive model on each

time instant and evaluating its performance at the same instant on new

epochs. The mne.decoding.SlidingEstimator will take as input a

pair of features \(X\) and targets \(y\), where \(X\) has

more than 2 dimensions. For decoding over time the data \(X\)

is the epochs data of shape n_epochs x n_channels x n_times. As the

last dimension of \(X\) is the time, an estimator will be fit

on every time instant.

This approach is analogous to SlidingEstimator-based approaches in fMRI, where here we are interested in when one can discriminate experimental conditions and therefore figure out when the effect of interest happens.

When working with linear models as estimators, this approach boils down to estimating a discriminative spatial filter for each time instant.

Temporal decoding¶

We’ll use a Logistic Regression for a binary classification as machine learning model.

# We will train the classifier on all left visual vs auditory trials on MEG

clf = make_pipeline(StandardScaler(), LogisticRegression(solver='lbfgs'))

time_decod = SlidingEstimator(clf, n_jobs=1, scoring='roc_auc', verbose=True)

scores = cross_val_multiscore(time_decod, X, y, cv=5, n_jobs=1)

# Mean scores across cross-validation splits

scores = np.mean(scores, axis=0)

# Plot

fig, ax = plt.subplots()

ax.plot(epochs.times, scores, label='score')

ax.axhline(.5, color='k', linestyle='--', label='chance')

ax.set_xlabel('Times')

ax.set_ylabel('AUC') # Area Under the Curve

ax.legend()

ax.axvline(.0, color='k', linestyle='-')

ax.set_title('Sensor space decoding')

Out:

0%| | Fitting SlidingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting SlidingEstimator : 2/43 [00:00<00:00, 58.36it/s]

9%|9 | Fitting SlidingEstimator : 4/43 [00:00<00:01, 34.27it/s]

16%|#6 | Fitting SlidingEstimator : 7/43 [00:00<00:00, 47.18it/s]

19%|#8 | Fitting SlidingEstimator : 8/43 [00:00<00:00, 36.67it/s]

26%|##5 | Fitting SlidingEstimator : 11/43 [00:00<00:00, 43.53it/s]

35%|###4 | Fitting SlidingEstimator : 15/43 [00:00<00:00, 42.40it/s]

37%|###7 | Fitting SlidingEstimator : 16/43 [00:00<00:00, 38.03it/s]

49%|####8 | Fitting SlidingEstimator : 21/43 [00:00<00:00, 46.98it/s]

60%|###### | Fitting SlidingEstimator : 26/43 [00:00<00:00, 47.73it/s]

63%|######2 | Fitting SlidingEstimator : 27/43 [00:00<00:00, 43.99it/s]

70%|######9 | Fitting SlidingEstimator : 30/43 [00:00<00:00, 46.92it/s]

72%|#######2 | Fitting SlidingEstimator : 31/43 [00:00<00:00, 43.13it/s]

79%|#######9 | Fitting SlidingEstimator : 34/43 [00:00<00:00, 45.88it/s]

81%|########1 | Fitting SlidingEstimator : 35/43 [00:00<00:00, 42.48it/s]

91%|######### | Fitting SlidingEstimator : 39/43 [00:00<00:00, 46.19it/s]

98%|#########7| Fitting SlidingEstimator : 42/43 [00:00<00:00, 44.24it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:00<00:00, 45.14it/s]

0%| | Fitting SlidingEstimator : 0/43 [00:00<?, ?it/s]

2%|2 | Fitting SlidingEstimator : 1/43 [00:00<00:02, 17.41it/s]

12%|#1 | Fitting SlidingEstimator : 5/43 [00:00<00:00, 51.76it/s]

21%|## | Fitting SlidingEstimator : 9/43 [00:00<00:00, 45.58it/s]

30%|### | Fitting SlidingEstimator : 13/43 [00:00<00:00, 43.57it/s]

40%|###9 | Fitting SlidingEstimator : 17/43 [00:00<00:00, 43.10it/s]

42%|####1 | Fitting SlidingEstimator : 18/43 [00:00<00:00, 38.91it/s]

53%|#####3 | Fitting SlidingEstimator : 23/43 [00:00<00:00, 47.42it/s]

56%|#####5 | Fitting SlidingEstimator : 24/43 [00:00<00:00, 43.05it/s]

65%|######5 | Fitting SlidingEstimator : 28/43 [00:00<00:00, 48.38it/s]

67%|######7 | Fitting SlidingEstimator : 29/43 [00:00<00:00, 44.14it/s]

77%|#######6 | Fitting SlidingEstimator : 33/43 [00:00<00:00, 48.43it/s]

86%|########6 | Fitting SlidingEstimator : 37/43 [00:00<00:00, 47.37it/s]

88%|########8 | Fitting SlidingEstimator : 38/43 [00:00<00:00, 44.08it/s]

95%|#########5| Fitting SlidingEstimator : 41/43 [00:00<00:00, 46.22it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:00<00:00, 47.51it/s]

0%| | Fitting SlidingEstimator : 0/43 [00:00<?, ?it/s]

2%|2 | Fitting SlidingEstimator : 1/43 [00:00<00:02, 17.22it/s]

9%|9 | Fitting SlidingEstimator : 4/43 [00:00<00:00, 44.39it/s]

12%|#1 | Fitting SlidingEstimator : 5/43 [00:00<00:01, 31.55it/s]

19%|#8 | Fitting SlidingEstimator : 8/43 [00:00<00:00, 42.31it/s]

21%|## | Fitting SlidingEstimator : 9/43 [00:00<00:00, 34.75it/s]

28%|##7 | Fitting SlidingEstimator : 12/43 [00:00<00:00, 40.68it/s]

30%|### | Fitting SlidingEstimator : 13/43 [00:00<00:00, 36.20it/s]

37%|###7 | Fitting SlidingEstimator : 16/43 [00:00<00:00, 41.07it/s]

42%|####1 | Fitting SlidingEstimator : 18/43 [00:00<00:00, 39.64it/s]

53%|#####3 | Fitting SlidingEstimator : 23/43 [00:00<00:00, 48.73it/s]

56%|#####5 | Fitting SlidingEstimator : 24/43 [00:00<00:00, 43.87it/s]

65%|######5 | Fitting SlidingEstimator : 28/43 [00:00<00:00, 49.46it/s]

67%|######7 | Fitting SlidingEstimator : 29/43 [00:00<00:00, 44.86it/s]

77%|#######6 | Fitting SlidingEstimator : 33/43 [00:00<00:00, 49.23it/s]

86%|########6 | Fitting SlidingEstimator : 37/43 [00:00<00:00, 48.15it/s]

88%|########8 | Fitting SlidingEstimator : 38/43 [00:00<00:00, 44.57it/s]

93%|#########3| Fitting SlidingEstimator : 40/43 [00:00<00:00, 45.34it/s]

95%|#########5| Fitting SlidingEstimator : 41/43 [00:00<00:00, 42.30it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:00<00:00, 43.67it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:00<00:00, 43.67it/s]

0%| | Fitting SlidingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting SlidingEstimator : 2/43 [00:00<00:01, 26.98it/s]

14%|#3 | Fitting SlidingEstimator : 6/43 [00:00<00:00, 52.02it/s]

23%|##3 | Fitting SlidingEstimator : 10/43 [00:00<00:00, 46.51it/s]

30%|### | Fitting SlidingEstimator : 13/43 [00:00<00:00, 41.52it/s]

33%|###2 | Fitting SlidingEstimator : 14/43 [00:00<00:00, 36.84it/s]

44%|####4 | Fitting SlidingEstimator : 19/43 [00:00<00:00, 47.27it/s]

47%|####6 | Fitting SlidingEstimator : 20/43 [00:00<00:00, 42.10it/s]

58%|#####8 | Fitting SlidingEstimator : 25/43 [00:00<00:00, 50.57it/s]

60%|###### | Fitting SlidingEstimator : 26/43 [00:00<00:00, 45.58it/s]

70%|######9 | Fitting SlidingEstimator : 30/43 [00:00<00:00, 50.76it/s]

72%|#######2 | Fitting SlidingEstimator : 31/43 [00:00<00:00, 46.19it/s]

81%|########1 | Fitting SlidingEstimator : 35/43 [00:00<00:00, 50.21it/s]

91%|######### | Fitting SlidingEstimator : 39/43 [00:00<00:00, 48.77it/s]

93%|#########3| Fitting SlidingEstimator : 40/43 [00:00<00:00, 45.53it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:00<00:00, 48.12it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:00<00:00, 47.55it/s]

0%| | Fitting SlidingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting SlidingEstimator : 2/43 [00:00<00:01, 26.84it/s]

12%|#1 | Fitting SlidingEstimator : 5/43 [00:00<00:00, 45.44it/s]

14%|#3 | Fitting SlidingEstimator : 6/43 [00:00<00:01, 34.19it/s]

21%|## | Fitting SlidingEstimator : 9/43 [00:00<00:00, 43.36it/s]

23%|##3 | Fitting SlidingEstimator : 10/43 [00:00<00:00, 36.17it/s]

30%|### | Fitting SlidingEstimator : 13/43 [00:00<00:00, 42.76it/s]

33%|###2 | Fitting SlidingEstimator : 14/43 [00:00<00:00, 37.09it/s]

40%|###9 | Fitting SlidingEstimator : 17/43 [00:00<00:00, 42.09it/s]

44%|####4 | Fitting SlidingEstimator : 19/43 [00:00<00:00, 40.19it/s]

53%|#####3 | Fitting SlidingEstimator : 23/43 [00:00<00:00, 46.15it/s]

56%|#####5 | Fitting SlidingEstimator : 24/43 [00:00<00:00, 42.11it/s]

65%|######5 | Fitting SlidingEstimator : 28/43 [00:00<00:00, 47.34it/s]

74%|#######4 | Fitting SlidingEstimator : 32/43 [00:00<00:00, 46.26it/s]

77%|#######6 | Fitting SlidingEstimator : 33/43 [00:00<00:00, 42.71it/s]

86%|########6 | Fitting SlidingEstimator : 37/43 [00:00<00:00, 46.71it/s]

95%|#########5| Fitting SlidingEstimator : 41/43 [00:00<00:00, 45.91it/s]

98%|#########7| Fitting SlidingEstimator : 42/43 [00:00<00:00, 43.06it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:00<00:00, 43.71it/s]

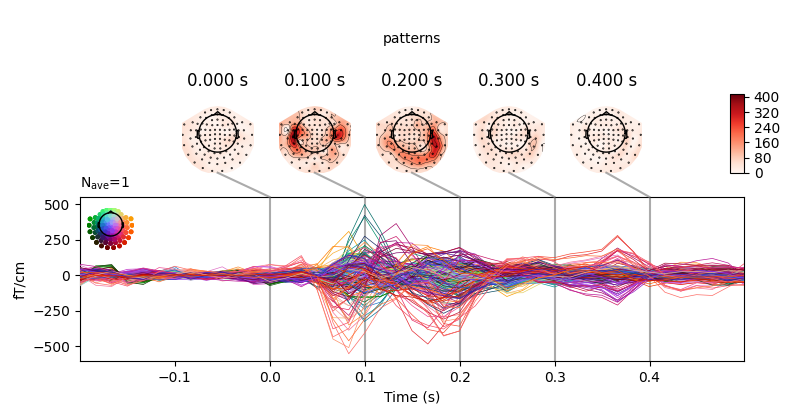

You can retrieve the spatial filters and spatial patterns if you explicitly use a LinearModel

clf = make_pipeline(StandardScaler(),

LinearModel(LogisticRegression(solver='lbfgs')))

time_decod = SlidingEstimator(clf, n_jobs=1, scoring='roc_auc', verbose=True)

time_decod.fit(X, y)

coef = get_coef(time_decod, 'patterns_', inverse_transform=True)

evoked_time_gen = mne.EvokedArray(coef, epochs.info, tmin=epochs.times[0])

joint_kwargs = dict(ts_args=dict(time_unit='s'),

topomap_args=dict(time_unit='s'))

evoked_time_gen.plot_joint(times=np.arange(0., .500, .100), title='patterns',

**joint_kwargs)

Out:

0%| | Fitting SlidingEstimator : 0/43 [00:00<?, ?it/s]

2%|2 | Fitting SlidingEstimator : 1/43 [00:00<00:01, 23.45it/s]

5%|4 | Fitting SlidingEstimator : 2/43 [00:00<00:01, 23.07it/s]

9%|9 | Fitting SlidingEstimator : 4/43 [00:00<00:01, 32.36it/s]

12%|#1 | Fitting SlidingEstimator : 5/43 [00:00<00:01, 26.74it/s]

16%|#6 | Fitting SlidingEstimator : 7/43 [00:00<00:01, 31.45it/s]

19%|#8 | Fitting SlidingEstimator : 8/43 [00:00<00:01, 27.83it/s]

23%|##3 | Fitting SlidingEstimator : 10/43 [00:00<00:01, 31.26it/s]

26%|##5 | Fitting SlidingEstimator : 11/43 [00:00<00:01, 28.34it/s]

30%|### | Fitting SlidingEstimator : 13/43 [00:00<00:00, 31.32it/s]

33%|###2 | Fitting SlidingEstimator : 14/43 [00:00<00:01, 28.63it/s]

40%|###9 | Fitting SlidingEstimator : 17/43 [00:00<00:00, 33.46it/s]

44%|####4 | Fitting SlidingEstimator : 19/43 [00:00<00:00, 33.12it/s]

51%|#####1 | Fitting SlidingEstimator : 22/43 [00:00<00:00, 37.06it/s]

56%|#####5 | Fitting SlidingEstimator : 24/43 [00:00<00:00, 36.26it/s]

63%|######2 | Fitting SlidingEstimator : 27/43 [00:00<00:00, 39.67it/s]

65%|######5 | Fitting SlidingEstimator : 28/43 [00:00<00:00, 36.77it/s]

70%|######9 | Fitting SlidingEstimator : 30/43 [00:00<00:00, 37.89it/s]

72%|#######2 | Fitting SlidingEstimator : 31/43 [00:00<00:00, 35.59it/s]

77%|#######6 | Fitting SlidingEstimator : 33/43 [00:00<00:00, 36.91it/s]

81%|########1 | Fitting SlidingEstimator : 35/43 [00:00<00:00, 36.22it/s]

86%|########6 | Fitting SlidingEstimator : 37/43 [00:01<00:00, 37.07it/s]

88%|########8 | Fitting SlidingEstimator : 38/43 [00:01<00:00, 35.26it/s]

93%|#########3| Fitting SlidingEstimator : 40/43 [00:01<00:00, 36.34it/s]

95%|#########5| Fitting SlidingEstimator : 41/43 [00:01<00:00, 34.46it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:01<00:00, 36.29it/s]

100%|##########| Fitting SlidingEstimator : 43/43 [00:01<00:00, 35.59it/s]

No projector specified for this dataset. Please consider the method self.add_proj.

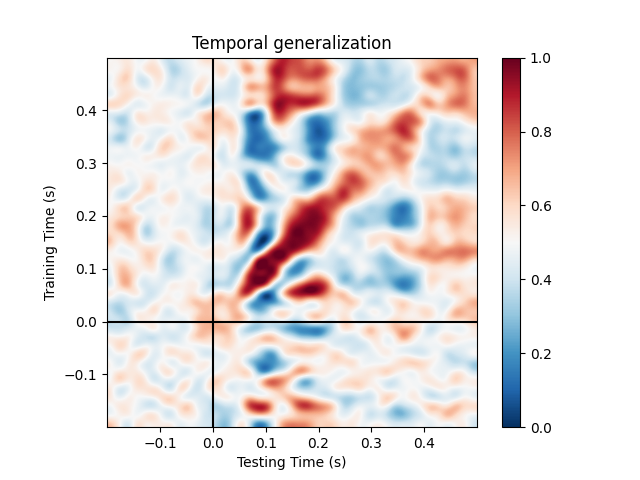

Temporal generalization¶

Temporal generalization is an extension of the decoding over time approach. It consists in evaluating whether the model estimated at a particular time instant accurately predicts any other time instant. It is analogous to transferring a trained model to a distinct learning problem, where the problems correspond to decoding the patterns of brain activity recorded at distinct time instants.

The object to for Temporal generalization is

mne.decoding.GeneralizingEstimator. It expects as input \(X\)

and \(y\) (similarly to SlidingEstimator) but

generates predictions from each model for all time instants. The class

GeneralizingEstimator is generic and will treat the

last dimension as the one to be used for generalization testing. For

convenience, here, we refer to it as different tasks. If \(X\)

corresponds to epochs data then the last dimension is time.

This runs the analysis used in 6 and further detailed in 7:

# define the Temporal generalization object

time_gen = GeneralizingEstimator(clf, n_jobs=1, scoring='roc_auc',

verbose=True)

scores = cross_val_multiscore(time_gen, X, y, cv=5, n_jobs=1)

# Mean scores across cross-validation splits

scores = np.mean(scores, axis=0)

# Plot the diagonal (it's exactly the same as the time-by-time decoding above)

fig, ax = plt.subplots()

ax.plot(epochs.times, np.diag(scores), label='score')

ax.axhline(.5, color='k', linestyle='--', label='chance')

ax.set_xlabel('Times')

ax.set_ylabel('AUC')

ax.legend()

ax.axvline(.0, color='k', linestyle='-')

ax.set_title('Decoding MEG sensors over time')

Out:

0%| | Fitting GeneralizingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting GeneralizingEstimator : 2/43 [00:00<00:00, 55.77it/s]

7%|6 | Fitting GeneralizingEstimator : 3/43 [00:00<00:01, 38.46it/s]

12%|#1 | Fitting GeneralizingEstimator : 5/43 [00:00<00:00, 43.75it/s]

14%|#3 | Fitting GeneralizingEstimator : 6/43 [00:00<00:01, 33.20it/s]

21%|## | Fitting GeneralizingEstimator : 9/43 [00:00<00:00, 42.96it/s]

23%|##3 | Fitting GeneralizingEstimator : 10/43 [00:00<00:00, 35.56it/s]

28%|##7 | Fitting GeneralizingEstimator : 12/43 [00:00<00:00, 38.29it/s]

30%|### | Fitting GeneralizingEstimator : 13/43 [00:00<00:00, 33.72it/s]

37%|###7 | Fitting GeneralizingEstimator : 16/43 [00:00<00:00, 39.08it/s]

42%|####1 | Fitting GeneralizingEstimator : 18/43 [00:00<00:00, 37.66it/s]

51%|#####1 | Fitting GeneralizingEstimator : 22/43 [00:00<00:00, 43.91it/s]

56%|#####5 | Fitting GeneralizingEstimator : 24/43 [00:00<00:00, 42.36it/s]

63%|######2 | Fitting GeneralizingEstimator : 27/43 [00:00<00:00, 45.17it/s]

65%|######5 | Fitting GeneralizingEstimator : 28/43 [00:00<00:00, 41.76it/s]

72%|#######2 | Fitting GeneralizingEstimator : 31/43 [00:00<00:00, 44.85it/s]

74%|#######4 | Fitting GeneralizingEstimator : 32/43 [00:00<00:00, 41.30it/s]

81%|########1 | Fitting GeneralizingEstimator : 35/43 [00:00<00:00, 43.96it/s]

86%|########6 | Fitting GeneralizingEstimator : 37/43 [00:00<00:00, 42.63it/s]

93%|#########3| Fitting GeneralizingEstimator : 40/43 [00:00<00:00, 45.22it/s]

95%|#########5| Fitting GeneralizingEstimator : 41/43 [00:00<00:00, 42.10it/s]

100%|##########| Fitting GeneralizingEstimator : 43/43 [00:01<00:00, 43.71it/s]

100%|##########| Fitting GeneralizingEstimator : 43/43 [00:01<00:00, 42.95it/s]

0%| | Scoring GeneralizingEstimator : 0/1849 [00:00<?, ?it/s]

1%| | Scoring GeneralizingEstimator : 18/1849 [00:00<00:06, 277.98it/s]

3%|2 | Scoring GeneralizingEstimator : 52/1849 [00:00<00:03, 534.19it/s]

5%|4 | Scoring GeneralizingEstimator : 90/1849 [00:00<00:02, 694.31it/s]

7%|6 | Scoring GeneralizingEstimator : 126/1849 [00:00<00:02, 776.48it/s]

9%|8 | Scoring GeneralizingEstimator : 161/1849 [00:00<00:02, 823.48it/s]

11%|# | Scoring GeneralizingEstimator : 199/1849 [00:00<00:01, 872.82it/s]

13%|#2 | Scoring GeneralizingEstimator : 235/1849 [00:00<00:01, 899.43it/s]

15%|#4 | Scoring GeneralizingEstimator : 271/1849 [00:00<00:01, 921.39it/s]

17%|#6 | Scoring GeneralizingEstimator : 309/1849 [00:00<00:01, 945.85it/s]

19%|#8 | Scoring GeneralizingEstimator : 347/1849 [00:00<00:01, 965.43it/s]

21%|## | Scoring GeneralizingEstimator : 385/1849 [00:00<00:01, 982.16it/s]

23%|##2 | Scoring GeneralizingEstimator : 424/1849 [00:00<00:01, 999.35it/s]

25%|##4 | Scoring GeneralizingEstimator : 462/1849 [00:00<00:01, 1011.02it/s]

27%|##7 | Scoring GeneralizingEstimator : 500/1849 [00:00<00:01, 1021.13it/s]

29%|##9 | Scoring GeneralizingEstimator : 538/1849 [00:00<00:01, 1030.31it/s]

31%|###1 | Scoring GeneralizingEstimator : 575/1849 [00:00<00:01, 1035.94it/s]

33%|###3 | Scoring GeneralizingEstimator : 613/1849 [00:00<00:01, 1041.99it/s]

35%|###5 | Scoring GeneralizingEstimator : 651/1849 [00:00<00:01, 1048.37it/s]

37%|###7 | Scoring GeneralizingEstimator : 689/1849 [00:00<00:01, 1053.43it/s]

39%|###9 | Scoring GeneralizingEstimator : 727/1849 [00:00<00:01, 1058.47it/s]

41%|####1 | Scoring GeneralizingEstimator : 765/1849 [00:00<00:01, 1062.58it/s]

43%|####3 | Scoring GeneralizingEstimator : 803/1849 [00:00<00:00, 1065.98it/s]

45%|####5 | Scoring GeneralizingEstimator : 841/1849 [00:00<00:00, 1069.98it/s]

47%|####7 | Scoring GeneralizingEstimator : 874/1849 [00:00<00:00, 1062.88it/s]

49%|####9 | Scoring GeneralizingEstimator : 909/1849 [00:00<00:00, 1060.50it/s]

51%|#####1 | Scoring GeneralizingEstimator : 948/1849 [00:00<00:00, 1066.46it/s]

53%|#####3 | Scoring GeneralizingEstimator : 985/1849 [00:00<00:00, 1068.35it/s]

55%|#####5 | Scoring GeneralizingEstimator : 1023/1849 [00:00<00:00, 1071.59it/s]

57%|#####7 | Scoring GeneralizingEstimator : 1062/1849 [00:01<00:00, 1076.39it/s]

59%|#####9 | Scoring GeneralizingEstimator : 1100/1849 [00:01<00:00, 1078.08it/s]

62%|######1 | Scoring GeneralizingEstimator : 1138/1849 [00:01<00:00, 1081.01it/s]

64%|######3 | Scoring GeneralizingEstimator : 1177/1849 [00:01<00:00, 1085.01it/s]

66%|######5 | Scoring GeneralizingEstimator : 1215/1849 [00:01<00:00, 1086.30it/s]

68%|######7 | Scoring GeneralizingEstimator : 1254/1849 [00:01<00:00, 1090.02it/s]

70%|######9 | Scoring GeneralizingEstimator : 1292/1849 [00:01<00:00, 1090.77it/s]

72%|#######1 | Scoring GeneralizingEstimator : 1331/1849 [00:01<00:00, 1094.31it/s]

74%|#######4 | Scoring GeneralizingEstimator : 1369/1849 [00:01<00:00, 1095.83it/s]

76%|#######6 | Scoring GeneralizingEstimator : 1407/1849 [00:01<00:00, 1096.05it/s]

78%|#######8 | Scoring GeneralizingEstimator : 1446/1849 [00:01<00:00, 1099.32it/s]

80%|######## | Scoring GeneralizingEstimator : 1484/1849 [00:01<00:00, 1099.56it/s]

82%|########2 | Scoring GeneralizingEstimator : 1522/1849 [00:01<00:00, 1100.62it/s]

84%|########4 | Scoring GeneralizingEstimator : 1560/1849 [00:01<00:00, 1101.83it/s]

86%|########6 | Scoring GeneralizingEstimator : 1598/1849 [00:01<00:00, 1102.01it/s]

89%|########8 | Scoring GeneralizingEstimator : 1637/1849 [00:01<00:00, 1103.88it/s]

91%|######### | Scoring GeneralizingEstimator : 1676/1849 [00:01<00:00, 1106.12it/s]

93%|#########2| Scoring GeneralizingEstimator : 1714/1849 [00:01<00:00, 1107.06it/s]

94%|#########4| Scoring GeneralizingEstimator : 1747/1849 [00:01<00:00, 1099.74it/s]

97%|#########6| Scoring GeneralizingEstimator : 1785/1849 [00:01<00:00, 1100.65it/s]

99%|#########8| Scoring GeneralizingEstimator : 1823/1849 [00:01<00:00, 1101.50it/s]

100%|##########| Scoring GeneralizingEstimator : 1849/1849 [00:01<00:00, 1103.18it/s]

100%|##########| Scoring GeneralizingEstimator : 1849/1849 [00:01<00:00, 1073.84it/s]

0%| | Fitting GeneralizingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting GeneralizingEstimator : 2/43 [00:00<00:00, 52.60it/s]

12%|#1 | Fitting GeneralizingEstimator : 5/43 [00:00<00:00, 69.52it/s]

16%|#6 | Fitting GeneralizingEstimator : 7/43 [00:00<00:00, 42.36it/s]

19%|#8 | Fitting GeneralizingEstimator : 8/43 [00:00<00:01, 34.09it/s]

26%|##5 | Fitting GeneralizingEstimator : 11/43 [00:00<00:00, 40.46it/s]

28%|##7 | Fitting GeneralizingEstimator : 12/43 [00:00<00:00, 35.85it/s]

35%|###4 | Fitting GeneralizingEstimator : 15/43 [00:00<00:00, 41.59it/s]

37%|###7 | Fitting GeneralizingEstimator : 16/43 [00:00<00:00, 36.75it/s]

47%|####6 | Fitting GeneralizingEstimator : 20/43 [00:00<00:00, 44.06it/s]

49%|####8 | Fitting GeneralizingEstimator : 21/43 [00:00<00:00, 39.54it/s]

58%|#####8 | Fitting GeneralizingEstimator : 25/43 [00:00<00:00, 44.94it/s]

60%|###### | Fitting GeneralizingEstimator : 26/43 [00:00<00:00, 41.47it/s]

67%|######7 | Fitting GeneralizingEstimator : 29/43 [00:00<00:00, 44.73it/s]

72%|#######2 | Fitting GeneralizingEstimator : 31/43 [00:00<00:00, 42.92it/s]

79%|#######9 | Fitting GeneralizingEstimator : 34/43 [00:00<00:00, 45.78it/s]

81%|########1 | Fitting GeneralizingEstimator : 35/43 [00:00<00:00, 42.27it/s]

86%|########6 | Fitting GeneralizingEstimator : 37/43 [00:00<00:00, 42.94it/s]

88%|########8 | Fitting GeneralizingEstimator : 38/43 [00:00<00:00, 40.28it/s]

95%|#########5| Fitting GeneralizingEstimator : 41/43 [00:00<00:00, 42.90it/s]

98%|#########7| Fitting GeneralizingEstimator : 42/43 [00:01<00:00, 40.11it/s]

100%|##########| Fitting GeneralizingEstimator : 43/43 [00:01<00:00, 41.34it/s]

0%| | Scoring GeneralizingEstimator : 0/1849 [00:00<?, ?it/s]

1%| | Scoring GeneralizingEstimator : 18/1849 [00:00<00:06, 278.65it/s]

3%|2 | Scoring GeneralizingEstimator : 55/1849 [00:00<00:03, 566.40it/s]

5%|5 | Scoring GeneralizingEstimator : 93/1849 [00:00<00:02, 716.49it/s]

7%|7 | Scoring GeneralizingEstimator : 131/1849 [00:00<00:02, 807.12it/s]

9%|9 | Scoring GeneralizingEstimator : 168/1849 [00:00<00:01, 861.27it/s]

11%|# | Scoring GeneralizingEstimator : 201/1849 [00:00<00:01, 880.36it/s]

13%|#2 | Scoring GeneralizingEstimator : 238/1849 [00:00<00:01, 912.16it/s]

15%|#4 | Scoring GeneralizingEstimator : 277/1849 [00:00<00:01, 944.36it/s]

17%|#7 | Scoring GeneralizingEstimator : 315/1849 [00:00<00:01, 966.34it/s]

19%|#9 | Scoring GeneralizingEstimator : 353/1849 [00:00<00:01, 983.33it/s]

21%|##1 | Scoring GeneralizingEstimator : 392/1849 [00:00<00:01, 1001.89it/s]

23%|##3 | Scoring GeneralizingEstimator : 430/1849 [00:00<00:01, 1013.21it/s]

25%|##5 | Scoring GeneralizingEstimator : 468/1849 [00:00<00:01, 1023.89it/s]

27%|##7 | Scoring GeneralizingEstimator : 507/1849 [00:00<00:01, 1035.01it/s]

29%|##9 | Scoring GeneralizingEstimator : 545/1849 [00:00<00:01, 1041.94it/s]

32%|###1 | Scoring GeneralizingEstimator : 583/1849 [00:00<00:01, 1048.89it/s]

34%|###3 | Scoring GeneralizingEstimator : 622/1849 [00:00<00:01, 1057.09it/s]

36%|###5 | Scoring GeneralizingEstimator : 660/1849 [00:00<00:01, 1062.26it/s]

38%|###7 | Scoring GeneralizingEstimator : 698/1849 [00:00<00:01, 1065.66it/s]

40%|###9 | Scoring GeneralizingEstimator : 737/1849 [00:00<00:01, 1071.99it/s]

42%|####1 | Scoring GeneralizingEstimator : 775/1849 [00:00<00:00, 1075.72it/s]

44%|####3 | Scoring GeneralizingEstimator : 813/1849 [00:00<00:00, 1078.73it/s]

46%|####6 | Scoring GeneralizingEstimator : 852/1849 [00:00<00:00, 1082.39it/s]

48%|####8 | Scoring GeneralizingEstimator : 891/1849 [00:00<00:00, 1085.86it/s]

50%|##### | Scoring GeneralizingEstimator : 930/1849 [00:00<00:00, 1090.45it/s]

52%|#####2 | Scoring GeneralizingEstimator : 967/1849 [00:00<00:00, 1090.80it/s]

54%|#####3 | Scoring GeneralizingEstimator : 996/1849 [00:00<00:00, 1075.34it/s]

56%|#####5 | Scoring GeneralizingEstimator : 1035/1849 [00:00<00:00, 1080.26it/s]

58%|#####8 | Scoring GeneralizingEstimator : 1073/1849 [00:01<00:00, 1083.03it/s]

60%|###### | Scoring GeneralizingEstimator : 1111/1849 [00:01<00:00, 1085.66it/s]

62%|######2 | Scoring GeneralizingEstimator : 1147/1849 [00:01<00:00, 1084.05it/s]

64%|######4 | Scoring GeneralizingEstimator : 1184/1849 [00:01<00:00, 1084.37it/s]

66%|######6 | Scoring GeneralizingEstimator : 1222/1849 [00:01<00:00, 1086.67it/s]

68%|######8 | Scoring GeneralizingEstimator : 1260/1849 [00:01<00:00, 1088.98it/s]

70%|####### | Scoring GeneralizingEstimator : 1297/1849 [00:01<00:00, 1089.32it/s]

72%|#######2 | Scoring GeneralizingEstimator : 1333/1849 [00:01<00:00, 1087.48it/s]

74%|#######4 | Scoring GeneralizingEstimator : 1372/1849 [00:01<00:00, 1090.00it/s]

76%|#######6 | Scoring GeneralizingEstimator : 1406/1849 [00:01<00:00, 1082.93it/s]

78%|#######7 | Scoring GeneralizingEstimator : 1439/1849 [00:01<00:00, 1076.73it/s]

80%|#######9 | Scoring GeneralizingEstimator : 1474/1849 [00:01<00:00, 1074.47it/s]

82%|########1 | Scoring GeneralizingEstimator : 1509/1849 [00:01<00:00, 1072.18it/s]

84%|########3 | Scoring GeneralizingEstimator : 1545/1849 [00:01<00:00, 1071.78it/s]

86%|########5 | Scoring GeneralizingEstimator : 1581/1849 [00:01<00:00, 1070.83it/s]

88%|########7 | Scoring GeneralizingEstimator : 1618/1849 [00:01<00:00, 1071.60it/s]

90%|########9 | Scoring GeneralizingEstimator : 1655/1849 [00:01<00:00, 1072.67it/s]

91%|#########1| Scoring GeneralizingEstimator : 1687/1849 [00:01<00:00, 1065.12it/s]

93%|#########3| Scoring GeneralizingEstimator : 1726/1849 [00:01<00:00, 1069.17it/s]

95%|#########5| Scoring GeneralizingEstimator : 1763/1849 [00:01<00:00, 1069.88it/s]

97%|#########7| Scoring GeneralizingEstimator : 1797/1849 [00:01<00:00, 1065.54it/s]

99%|#########9| Scoring GeneralizingEstimator : 1835/1849 [00:01<00:00, 1068.59it/s]

100%|##########| Scoring GeneralizingEstimator : 1849/1849 [00:01<00:00, 1060.39it/s]

0%| | Fitting GeneralizingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting GeneralizingEstimator : 2/43 [00:00<00:00, 55.18it/s]

9%|9 | Fitting GeneralizingEstimator : 4/43 [00:00<00:00, 41.64it/s]

14%|#3 | Fitting GeneralizingEstimator : 6/43 [00:00<00:00, 44.76it/s]

16%|#6 | Fitting GeneralizingEstimator : 7/43 [00:00<00:01, 35.16it/s]

23%|##3 | Fitting GeneralizingEstimator : 10/43 [00:00<00:00, 43.85it/s]

26%|##5 | Fitting GeneralizingEstimator : 11/43 [00:00<00:00, 36.65it/s]

33%|###2 | Fitting GeneralizingEstimator : 14/43 [00:00<00:00, 42.77it/s]

35%|###4 | Fitting GeneralizingEstimator : 15/43 [00:00<00:00, 37.39it/s]

42%|####1 | Fitting GeneralizingEstimator : 18/43 [00:00<00:00, 42.29it/s]

44%|####4 | Fitting GeneralizingEstimator : 19/43 [00:00<00:00, 37.83it/s]

53%|#####3 | Fitting GeneralizingEstimator : 23/43 [00:00<00:00, 44.15it/s]

58%|#####8 | Fitting GeneralizingEstimator : 25/43 [00:00<00:00, 42.36it/s]

65%|######5 | Fitting GeneralizingEstimator : 28/43 [00:00<00:00, 45.10it/s]

67%|######7 | Fitting GeneralizingEstimator : 29/43 [00:00<00:00, 41.77it/s]

74%|#######4 | Fitting GeneralizingEstimator : 32/43 [00:00<00:00, 44.21it/s]

77%|#######6 | Fitting GeneralizingEstimator : 33/43 [00:00<00:00, 41.34it/s]

84%|########3 | Fitting GeneralizingEstimator : 36/43 [00:00<00:00, 44.16it/s]

86%|########6 | Fitting GeneralizingEstimator : 37/43 [00:00<00:00, 40.99it/s]

91%|######### | Fitting GeneralizingEstimator : 39/43 [00:00<00:00, 41.95it/s]

93%|#########3| Fitting GeneralizingEstimator : 40/43 [00:00<00:00, 41.27it/s]

95%|#########5| Fitting GeneralizingEstimator : 41/43 [00:00<00:00, 40.63it/s]

100%|##########| Fitting GeneralizingEstimator : 43/43 [00:01<00:00, 41.97it/s]

100%|##########| Fitting GeneralizingEstimator : 43/43 [00:01<00:00, 41.87it/s]

0%| | Scoring GeneralizingEstimator : 0/1849 [00:00<?, ?it/s]

1%| | Scoring GeneralizingEstimator : 13/1849 [00:00<00:08, 206.94it/s]

2%|2 | Scoring GeneralizingEstimator : 44/1849 [00:00<00:03, 461.39it/s]

4%|4 | Scoring GeneralizingEstimator : 76/1849 [00:00<00:02, 594.14it/s]

6%|5 | Scoring GeneralizingEstimator : 106/1849 [00:00<00:02, 659.19it/s]

7%|7 | Scoring GeneralizingEstimator : 136/1849 [00:00<00:02, 701.71it/s]

9%|9 | Scoring GeneralizingEstimator : 175/1849 [00:00<00:02, 776.45it/s]

12%|#1 | Scoring GeneralizingEstimator : 213/1849 [00:00<00:01, 827.43it/s]

14%|#3 | Scoring GeneralizingEstimator : 250/1849 [00:00<00:01, 863.52it/s]

16%|#5 | Scoring GeneralizingEstimator : 287/1849 [00:00<00:01, 892.27it/s]

18%|#7 | Scoring GeneralizingEstimator : 326/1849 [00:00<00:01, 920.78it/s]

20%|#9 | Scoring GeneralizingEstimator : 362/1849 [00:00<00:01, 934.63it/s]

22%|##1 | Scoring GeneralizingEstimator : 400/1849 [00:00<00:01, 953.31it/s]

24%|##3 | Scoring GeneralizingEstimator : 439/1849 [00:00<00:01, 972.83it/s]

26%|##5 | Scoring GeneralizingEstimator : 477/1849 [00:00<00:01, 985.02it/s]

28%|##7 | Scoring GeneralizingEstimator : 516/1849 [00:00<00:01, 1000.12it/s]

30%|##9 | Scoring GeneralizingEstimator : 554/1849 [00:00<00:01, 1009.88it/s]

32%|###2 | Scoring GeneralizingEstimator : 593/1849 [00:00<00:01, 1021.62it/s]

34%|###4 | Scoring GeneralizingEstimator : 631/1849 [00:00<00:01, 1029.85it/s]

36%|###6 | Scoring GeneralizingEstimator : 667/1849 [00:00<00:01, 1031.72it/s]

38%|###8 | Scoring GeneralizingEstimator : 703/1849 [00:00<00:01, 1034.16it/s]

40%|###9 | Scoring GeneralizingEstimator : 732/1849 [00:00<00:01, 1021.01it/s]

41%|####1 | Scoring GeneralizingEstimator : 763/1849 [00:00<00:01, 1012.25it/s]

43%|####2 | Scoring GeneralizingEstimator : 795/1849 [00:00<00:01, 1007.62it/s]

45%|####4 | Scoring GeneralizingEstimator : 826/1849 [00:00<00:01, 1001.13it/s]

46%|####6 | Scoring GeneralizingEstimator : 857/1849 [00:00<00:00, 995.42it/s]

48%|####8 | Scoring GeneralizingEstimator : 888/1849 [00:00<00:00, 990.22it/s]

50%|####9 | Scoring GeneralizingEstimator : 917/1849 [00:00<00:00, 980.90it/s]

51%|#####1 | Scoring GeneralizingEstimator : 945/1849 [00:00<00:00, 971.02it/s]

53%|#####3 | Scoring GeneralizingEstimator : 983/1849 [00:01<00:00, 980.39it/s]

55%|#####5 | Scoring GeneralizingEstimator : 1022/1849 [00:01<00:00, 991.04it/s]

57%|#####6 | Scoring GeneralizingEstimator : 1050/1849 [00:01<00:00, 981.05it/s]

59%|#####8 | Scoring GeneralizingEstimator : 1089/1849 [00:01<00:00, 991.34it/s]

61%|###### | Scoring GeneralizingEstimator : 1127/1849 [00:01<00:00, 998.93it/s]

63%|######2 | Scoring GeneralizingEstimator : 1156/1849 [00:01<00:00, 989.64it/s]

65%|######4 | Scoring GeneralizingEstimator : 1195/1849 [00:01<00:00, 999.42it/s]

67%|######6 | Scoring GeneralizingEstimator : 1233/1849 [00:01<00:00, 1006.58it/s]

68%|######8 | Scoring GeneralizingEstimator : 1262/1849 [00:01<00:00, 997.62it/s]

70%|####### | Scoring GeneralizingEstimator : 1301/1849 [00:01<00:00, 1006.30it/s]

72%|#######2 | Scoring GeneralizingEstimator : 1339/1849 [00:01<00:00, 1012.99it/s]

74%|#######3 | Scoring GeneralizingEstimator : 1368/1849 [00:01<00:00, 1003.21it/s]

76%|#######6 | Scoring GeneralizingEstimator : 1407/1849 [00:01<00:00, 1010.79it/s]

78%|#######8 | Scoring GeneralizingEstimator : 1446/1849 [00:01<00:00, 1018.81it/s]

80%|#######9 | Scoring GeneralizingEstimator : 1474/1849 [00:01<00:00, 1007.44it/s]

82%|########1 | Scoring GeneralizingEstimator : 1513/1849 [00:01<00:00, 1015.27it/s]

84%|########3 | Scoring GeneralizingEstimator : 1551/1849 [00:01<00:00, 1021.10it/s]

85%|########5 | Scoring GeneralizingEstimator : 1580/1849 [00:01<00:00, 1011.16it/s]

88%|########7 | Scoring GeneralizingEstimator : 1619/1849 [00:01<00:00, 1017.67it/s]

90%|########9 | Scoring GeneralizingEstimator : 1658/1849 [00:01<00:00, 1025.09it/s]

91%|#########1| Scoring GeneralizingEstimator : 1687/1849 [00:01<00:00, 1015.93it/s]

93%|#########3| Scoring GeneralizingEstimator : 1725/1849 [00:01<00:00, 1021.76it/s]

95%|#########5| Scoring GeneralizingEstimator : 1763/1849 [00:01<00:00, 1027.23it/s]

97%|#########6| Scoring GeneralizingEstimator : 1793/1849 [00:01<00:00, 1019.67it/s]

99%|#########9| Scoring GeneralizingEstimator : 1831/1849 [00:01<00:00, 1024.87it/s]

100%|##########| Scoring GeneralizingEstimator : 1849/1849 [00:01<00:00, 1001.23it/s]

0%| | Fitting GeneralizingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting GeneralizingEstimator : 2/43 [00:00<00:00, 46.82it/s]

7%|6 | Fitting GeneralizingEstimator : 3/43 [00:00<00:01, 32.05it/s]

12%|#1 | Fitting GeneralizingEstimator : 5/43 [00:00<00:00, 38.24it/s]

14%|#3 | Fitting GeneralizingEstimator : 6/43 [00:00<00:01, 30.70it/s]

19%|#8 | Fitting GeneralizingEstimator : 8/43 [00:00<00:01, 34.89it/s]

23%|##3 | Fitting GeneralizingEstimator : 10/43 [00:00<00:00, 34.10it/s]

28%|##7 | Fitting GeneralizingEstimator : 12/43 [00:00<00:00, 36.45it/s]

30%|### | Fitting GeneralizingEstimator : 13/43 [00:00<00:00, 32.78it/s]

35%|###4 | Fitting GeneralizingEstimator : 15/43 [00:00<00:00, 35.27it/s]

37%|###7 | Fitting GeneralizingEstimator : 16/43 [00:00<00:00, 31.97it/s]

44%|####4 | Fitting GeneralizingEstimator : 19/43 [00:00<00:00, 36.56it/s]

49%|####8 | Fitting GeneralizingEstimator : 21/43 [00:00<00:00, 35.70it/s]

56%|#####5 | Fitting GeneralizingEstimator : 24/43 [00:00<00:00, 39.52it/s]

60%|###### | Fitting GeneralizingEstimator : 26/43 [00:00<00:00, 38.32it/s]

65%|######5 | Fitting GeneralizingEstimator : 28/43 [00:00<00:00, 39.58it/s]

70%|######9 | Fitting GeneralizingEstimator : 30/43 [00:00<00:00, 38.56it/s]

74%|#######4 | Fitting GeneralizingEstimator : 32/43 [00:00<00:00, 39.51it/s]

77%|#######6 | Fitting GeneralizingEstimator : 33/43 [00:00<00:00, 38.92it/s]

84%|########3 | Fitting GeneralizingEstimator : 36/43 [00:00<00:00, 39.46it/s]

86%|########6 | Fitting GeneralizingEstimator : 37/43 [00:00<00:00, 37.36it/s]

91%|######### | Fitting GeneralizingEstimator : 39/43 [00:01<00:00, 38.28it/s]

93%|#########3| Fitting GeneralizingEstimator : 40/43 [00:01<00:00, 37.83it/s]

95%|#########5| Fitting GeneralizingEstimator : 41/43 [00:01<00:00, 36.71it/s]

100%|##########| Fitting GeneralizingEstimator : 43/43 [00:01<00:00, 38.30it/s]

0%| | Scoring GeneralizingEstimator : 0/1849 [00:00<?, ?it/s]

0%| | Scoring GeneralizingEstimator : 2/1849 [00:00<00:43, 42.13it/s]

2%|1 | Scoring GeneralizingEstimator : 31/1849 [00:00<00:04, 391.47it/s]

4%|3 | Scoring GeneralizingEstimator : 69/1849 [00:00<00:02, 619.12it/s]

6%|5 | Scoring GeneralizingEstimator : 107/1849 [00:00<00:02, 742.88it/s]

7%|7 | Scoring GeneralizingEstimator : 136/1849 [00:00<00:02, 766.70it/s]

9%|9 | Scoring GeneralizingEstimator : 175/1849 [00:00<00:02, 835.83it/s]

11%|#1 | Scoring GeneralizingEstimator : 212/1849 [00:00<00:01, 875.77it/s]

13%|#3 | Scoring GeneralizingEstimator : 242/1849 [00:00<00:01, 876.28it/s]

15%|#5 | Scoring GeneralizingEstimator : 281/1849 [00:00<00:01, 910.70it/s]

17%|#7 | Scoring GeneralizingEstimator : 317/1849 [00:00<00:01, 927.54it/s]

19%|#8 | Scoring GeneralizingEstimator : 349/1849 [00:00<00:01, 928.16it/s]

21%|##1 | Scoring GeneralizingEstimator : 389/1849 [00:00<00:01, 953.25it/s]

23%|##2 | Scoring GeneralizingEstimator : 423/1849 [00:00<00:01, 958.16it/s]

25%|##4 | Scoring GeneralizingEstimator : 456/1849 [00:00<00:01, 958.14it/s]

27%|##6 | Scoring GeneralizingEstimator : 495/1849 [00:00<00:01, 975.76it/s]

29%|##8 | Scoring GeneralizingEstimator : 528/1849 [00:00<00:01, 975.26it/s]

30%|### | Scoring GeneralizingEstimator : 563/1849 [00:00<00:01, 979.77it/s]

33%|###2 | Scoring GeneralizingEstimator : 601/1849 [00:00<00:01, 990.81it/s]

34%|###4 | Scoring GeneralizingEstimator : 634/1849 [00:00<00:01, 989.68it/s]

36%|###6 | Scoring GeneralizingEstimator : 669/1849 [00:00<00:01, 992.87it/s]

38%|###8 | Scoring GeneralizingEstimator : 707/1849 [00:00<00:01, 1001.86it/s]

40%|###9 | Scoring GeneralizingEstimator : 739/1849 [00:00<00:01, 997.85it/s]

42%|####1 | Scoring GeneralizingEstimator : 775/1849 [00:00<00:01, 1002.30it/s]

44%|####3 | Scoring GeneralizingEstimator : 813/1849 [00:00<00:01, 1010.14it/s]

46%|####5 | Scoring GeneralizingEstimator : 844/1849 [00:00<00:01, 1002.83it/s]

48%|####7 | Scoring GeneralizingEstimator : 881/1849 [00:00<00:00, 1008.74it/s]

50%|####9 | Scoring GeneralizingEstimator : 920/1849 [00:00<00:00, 1018.33it/s]

51%|#####1 | Scoring GeneralizingEstimator : 949/1849 [00:00<00:00, 1007.90it/s]

53%|#####3 | Scoring GeneralizingEstimator : 987/1849 [00:01<00:00, 1014.34it/s]

56%|#####5 | Scoring GeneralizingEstimator : 1027/1849 [00:01<00:00, 1023.61it/s]

57%|#####7 | Scoring GeneralizingEstimator : 1055/1849 [00:01<00:00, 1011.01it/s]

59%|#####9 | Scoring GeneralizingEstimator : 1094/1849 [00:01<00:00, 1018.61it/s]

61%|######1 | Scoring GeneralizingEstimator : 1133/1849 [00:01<00:00, 1025.82it/s]

63%|######2 | Scoring GeneralizingEstimator : 1162/1849 [00:01<00:00, 1014.98it/s]

65%|######4 | Scoring GeneralizingEstimator : 1201/1849 [00:01<00:00, 1022.50it/s]

67%|######7 | Scoring GeneralizingEstimator : 1240/1849 [00:01<00:00, 1030.26it/s]

69%|######8 | Scoring GeneralizingEstimator : 1268/1849 [00:01<00:00, 1017.99it/s]

71%|####### | Scoring GeneralizingEstimator : 1307/1849 [00:01<00:00, 1025.86it/s]

73%|#######2 | Scoring GeneralizingEstimator : 1345/1849 [00:01<00:00, 1030.78it/s]

74%|#######4 | Scoring GeneralizingEstimator : 1374/1849 [00:01<00:00, 1020.00it/s]

76%|#######6 | Scoring GeneralizingEstimator : 1413/1849 [00:01<00:00, 1027.58it/s]

78%|#######8 | Scoring GeneralizingEstimator : 1451/1849 [00:01<00:00, 1031.94it/s]

80%|######## | Scoring GeneralizingEstimator : 1480/1849 [00:01<00:00, 1022.15it/s]

82%|########2 | Scoring GeneralizingEstimator : 1518/1849 [00:01<00:00, 1027.12it/s]

84%|########4 | Scoring GeneralizingEstimator : 1557/1849 [00:01<00:00, 1032.94it/s]

86%|########5 | Scoring GeneralizingEstimator : 1586/1849 [00:01<00:00, 1022.89it/s]

88%|########7 | Scoring GeneralizingEstimator : 1625/1849 [00:01<00:00, 1028.65it/s]

90%|########9 | Scoring GeneralizingEstimator : 1663/1849 [00:01<00:00, 1033.86it/s]

92%|#########1| Scoring GeneralizingEstimator : 1692/1849 [00:01<00:00, 1024.45it/s]

94%|#########3| Scoring GeneralizingEstimator : 1731/1849 [00:01<00:00, 1031.13it/s]

96%|#########5| Scoring GeneralizingEstimator : 1768/1849 [00:01<00:00, 1033.81it/s]

97%|#########7| Scoring GeneralizingEstimator : 1798/1849 [00:01<00:00, 1026.10it/s]

99%|#########9| Scoring GeneralizingEstimator : 1837/1849 [00:01<00:00, 1032.89it/s]

100%|##########| Scoring GeneralizingEstimator : 1849/1849 [00:01<00:00, 1010.30it/s]

0%| | Fitting GeneralizingEstimator : 0/43 [00:00<?, ?it/s]

5%|4 | Fitting GeneralizingEstimator : 2/43 [00:00<00:00, 53.53it/s]

9%|9 | Fitting GeneralizingEstimator : 4/43 [00:00<00:00, 44.46it/s]

14%|#3 | Fitting GeneralizingEstimator : 6/43 [00:00<00:00, 48.41it/s]

16%|#6 | Fitting GeneralizingEstimator : 7/43 [00:00<00:00, 36.16it/s]

21%|## | Fitting GeneralizingEstimator : 9/43 [00:00<00:00, 38.40it/s]

23%|##3 | Fitting GeneralizingEstimator : 10/43 [00:00<00:00, 33.68it/s]

28%|##7 | Fitting GeneralizingEstimator : 12/43 [00:00<00:00, 36.60it/s]

30%|### | Fitting GeneralizingEstimator : 13/43 [00:00<00:00, 32.45it/s]

35%|###4 | Fitting GeneralizingEstimator : 15/43 [00:00<00:00, 34.18it/s]

37%|###7 | Fitting GeneralizingEstimator : 16/43 [00:00<00:00, 31.76it/s]

44%|####4 | Fitting GeneralizingEstimator : 19/43 [00:00<00:00, 35.91it/s]

47%|####6 | Fitting GeneralizingEstimator : 20/43 [00:00<00:00, 33.38it/s]

51%|#####1 | Fitting GeneralizingEstimator : 22/43 [00:00<00:00, 35.24it/s]

53%|#####3 | Fitting GeneralizingEstimator : 23/43 [00:00<00:00, 32.64it/s]

58%|#####8 | Fitting GeneralizingEstimator : 25/43 [00:00<00:00, 34.27it/s]

60%|###### | Fitting GeneralizingEstimator : 26/43 [00:00<00:00, 32.09it/s]

67%|######7 | Fitting GeneralizingEstimator : 29/43 [00:00<00:00, 35.30it/s]

72%|#######2 | Fitting GeneralizingEstimator : 31/43 [00:00<00:00, 34.91it/s]

79%|#######9 | Fitting GeneralizingEstimator : 34/43 [00:00<00:00, 37.84it/s]

81%|########1 | Fitting GeneralizingEstimator : 35/43 [00:00<00:00, 35.57it/s]

88%|########8 | Fitting GeneralizingEstimator : 38/43 [00:01<00:00, 37.86it/s]

91%|######### | Fitting GeneralizingEstimator : 39/43 [00:01<00:00, 36.11it/s]

98%|#########7| Fitting GeneralizingEstimator : 42/43 [00:01<00:00, 38.42it/s]

100%|##########| Fitting GeneralizingEstimator : 43/43 [00:01<00:00, 38.05it/s]

0%| | Scoring GeneralizingEstimator : 0/1849 [00:00<?, ?it/s]

2%|2 | Scoring GeneralizingEstimator : 37/1849 [00:00<00:02, 627.62it/s]

4%|4 | Scoring GeneralizingEstimator : 75/1849 [00:00<00:02, 811.65it/s]

6%|6 | Scoring GeneralizingEstimator : 112/1849 [00:00<00:01, 891.57it/s]

8%|8 | Scoring GeneralizingEstimator : 150/1849 [00:00<00:01, 940.80it/s]

10%|# | Scoring GeneralizingEstimator : 188/1849 [00:00<00:01, 975.86it/s]

12%|#2 | Scoring GeneralizingEstimator : 226/1849 [00:00<00:01, 1000.81it/s]

14%|#4 | Scoring GeneralizingEstimator : 264/1849 [00:00<00:01, 1018.17it/s]

16%|#6 | Scoring GeneralizingEstimator : 302/1849 [00:00<00:01, 1032.05it/s]

18%|#8 | Scoring GeneralizingEstimator : 340/1849 [00:00<00:01, 1043.61it/s]

20%|## | Scoring GeneralizingEstimator : 377/1849 [00:00<00:01, 1049.27it/s]

22%|##2 | Scoring GeneralizingEstimator : 415/1849 [00:00<00:01, 1057.22it/s]

24%|##4 | Scoring GeneralizingEstimator : 453/1849 [00:00<00:01, 1061.76it/s]

27%|##6 | Scoring GeneralizingEstimator : 491/1849 [00:00<00:01, 1066.70it/s]

29%|##8 | Scoring GeneralizingEstimator : 530/1849 [00:00<00:01, 1074.44it/s]

31%|### | Scoring GeneralizingEstimator : 567/1849 [00:00<00:01, 1075.58it/s]

33%|###2 | Scoring GeneralizingEstimator : 605/1849 [00:00<00:01, 1079.39it/s]

35%|###4 | Scoring GeneralizingEstimator : 644/1849 [00:00<00:01, 1085.39it/s]

37%|###6 | Scoring GeneralizingEstimator : 681/1849 [00:00<00:01, 1086.09it/s]

39%|###8 | Scoring GeneralizingEstimator : 718/1849 [00:00<00:01, 1084.91it/s]

41%|#### | Scoring GeneralizingEstimator : 756/1849 [00:00<00:01, 1087.90it/s]

43%|####2 | Scoring GeneralizingEstimator : 792/1849 [00:00<00:00, 1086.25it/s]

45%|####4 | Scoring GeneralizingEstimator : 827/1849 [00:00<00:00, 1081.00it/s]

47%|####6 | Scoring GeneralizingEstimator : 864/1849 [00:00<00:00, 1081.57it/s]

49%|####8 | Scoring GeneralizingEstimator : 902/1849 [00:00<00:00, 1084.41it/s]

51%|##### | Scoring GeneralizingEstimator : 940/1849 [00:00<00:00, 1086.62it/s]

53%|#####2 | Scoring GeneralizingEstimator : 978/1849 [00:00<00:00, 1088.88it/s]

55%|#####4 | Scoring GeneralizingEstimator : 1015/1849 [00:00<00:00, 1089.09it/s]

57%|#####6 | Scoring GeneralizingEstimator : 1053/1849 [00:00<00:00, 1090.56it/s]

59%|#####9 | Scoring GeneralizingEstimator : 1092/1849 [00:01<00:00, 1094.18it/s]

61%|######1 | Scoring GeneralizingEstimator : 1129/1849 [00:01<00:00, 1093.95it/s]

63%|######3 | Scoring GeneralizingEstimator : 1167/1849 [00:01<00:00, 1095.68it/s]

65%|######5 | Scoring GeneralizingEstimator : 1206/1849 [00:01<00:00, 1099.05it/s]

67%|######7 | Scoring GeneralizingEstimator : 1243/1849 [00:01<00:00, 1098.80it/s]

69%|######9 | Scoring GeneralizingEstimator : 1281/1849 [00:01<00:00, 1100.21it/s]

71%|#######1 | Scoring GeneralizingEstimator : 1318/1849 [00:01<00:00, 1099.47it/s]

73%|#######3 | Scoring GeneralizingEstimator : 1356/1849 [00:01<00:00, 1099.97it/s]

75%|#######5 | Scoring GeneralizingEstimator : 1394/1849 [00:01<00:00, 1101.03it/s]

77%|#######7 | Scoring GeneralizingEstimator : 1432/1849 [00:01<00:00, 1101.76it/s]

80%|#######9 | Scoring GeneralizingEstimator : 1470/1849 [00:01<00:00, 1102.31it/s]

82%|########1 | Scoring GeneralizingEstimator : 1508/1849 [00:01<00:00, 1103.27it/s]

84%|########3 | Scoring GeneralizingEstimator : 1547/1849 [00:01<00:00, 1106.10it/s]

86%|########5 | Scoring GeneralizingEstimator : 1585/1849 [00:01<00:00, 1106.39it/s]

88%|########7 | Scoring GeneralizingEstimator : 1623/1849 [00:01<00:00, 1107.14it/s]

90%|########9 | Scoring GeneralizingEstimator : 1661/1849 [00:01<00:00, 1107.47it/s]

92%|#########1| Scoring GeneralizingEstimator : 1699/1849 [00:01<00:00, 1108.16it/s]

94%|#########3| Scoring GeneralizingEstimator : 1737/1849 [00:01<00:00, 1107.89it/s]

96%|#########5| Scoring GeneralizingEstimator : 1775/1849 [00:01<00:00, 1108.30it/s]

98%|#########8| Scoring GeneralizingEstimator : 1813/1849 [00:01<00:00, 1108.49it/s]

100%|##########| Scoring GeneralizingEstimator : 1849/1849 [00:01<00:00, 1108.25it/s]

100%|##########| Scoring GeneralizingEstimator : 1849/1849 [00:01<00:00, 1093.34it/s]

Plot the full (generalization) matrix:

fig, ax = plt.subplots(1, 1)

im = ax.imshow(scores, interpolation='lanczos', origin='lower', cmap='RdBu_r',

extent=epochs.times[[0, -1, 0, -1]], vmin=0., vmax=1.)

ax.set_xlabel('Testing Time (s)')

ax.set_ylabel('Training Time (s)')

ax.set_title('Temporal generalization')

ax.axvline(0, color='k')

ax.axhline(0, color='k')

plt.colorbar(im, ax=ax)

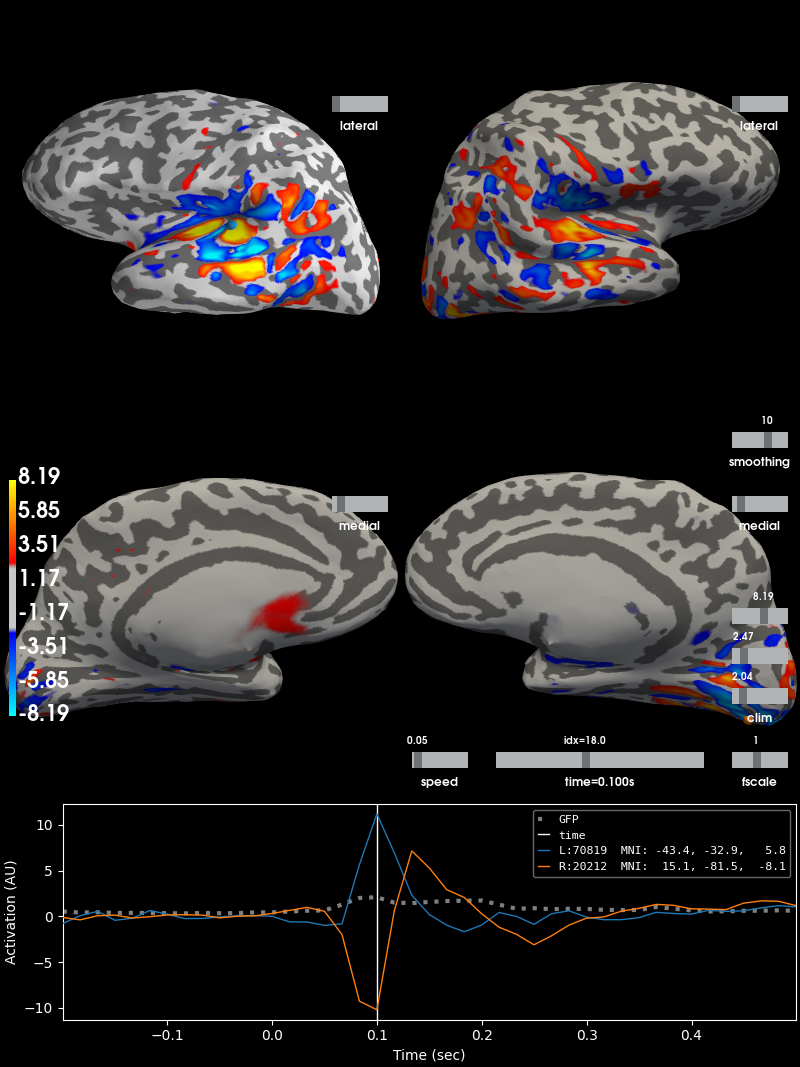

Projecting sensor-space patterns to source space¶

If you use a linear classifier (or regressor) for your data, you can also

project these to source space. For example, using our evoked_time_gen

from before:

cov = mne.compute_covariance(epochs, tmax=0.)

del epochs

fwd = mne.read_forward_solution(

data_path + '/MEG/sample/sample_audvis-meg-eeg-oct-6-fwd.fif')

inv = mne.minimum_norm.make_inverse_operator(

evoked_time_gen.info, fwd, cov, loose=0.)

stc = mne.minimum_norm.apply_inverse(evoked_time_gen, inv, 1. / 9., 'dSPM')

del fwd, inv

Out:

Computing rank from data with rank=None

Using tolerance 3.4e-10 (2.2e-16 eps * 203 dim * 7.6e+03 max singular value)

Estimated rank (grad): 203

GRAD: rank 203 computed from 203 data channels with 0 projectors

Reducing data rank from 203 -> 203

Estimating covariance using EMPIRICAL

Done.

Number of samples used : 1599

[done]

Reading forward solution from /home/circleci/mne_data/MNE-sample-data/MEG/sample/sample_audvis-meg-eeg-oct-6-fwd.fif...

Reading a source space...

Computing patch statistics...

Patch information added...

Distance information added...

[done]

Reading a source space...

Computing patch statistics...

Patch information added...

Distance information added...

[done]

2 source spaces read

Desired named matrix (kind = 3523) not available

Read MEG forward solution (7498 sources, 306 channels, free orientations)

Desired named matrix (kind = 3523) not available

Read EEG forward solution (7498 sources, 60 channels, free orientations)

MEG and EEG forward solutions combined

Source spaces transformed to the forward solution coordinate frame

Computing inverse operator with 203 channels.

203 out of 366 channels remain after picking

Selected 203 channels

Creating the depth weighting matrix...

203 planar channels

limit = 7262/7498 = 10.020866

scale = 2.58122e-08 exp = 0.8

Picked elements from a free-orientation depth-weighting prior into the fixed-orientation one

Average patch normals will be employed in the rotation to the local surface coordinates....

Converting to surface-based source orientations...

[done]

Whitening the forward solution.

Computing rank from covariance with rank=None

Using tolerance 1.6e-13 (2.2e-16 eps * 203 dim * 3.6 max singular value)

Estimated rank (grad): 203

GRAD: rank 203 computed from 203 data channels with 0 projectors

Setting small GRAD eigenvalues to zero (without PCA)

Creating the source covariance matrix

Adjusting source covariance matrix.

Computing SVD of whitened and weighted lead field matrix.

largest singular value = 3.91789

scaling factor to adjust the trace = 6.28301e+18

Preparing the inverse operator for use...

Scaled noise and source covariance from nave = 1 to nave = 1

Created the regularized inverter

The projection vectors do not apply to these channels.

Created the whitener using a noise covariance matrix with rank 203 (0 small eigenvalues omitted)

Computing noise-normalization factors (dSPM)...

[done]

Applying inverse operator to ""...

Picked 203 channels from the data

Computing inverse...

Eigenleads need to be weighted ...

Computing residual...

Explained 76.6% variance

dSPM...

[done]

And this can be visualized using stc.plot:

brain = stc.plot(hemi='split', views=('lat', 'med'), initial_time=0.1,

subjects_dir=subjects_dir)

Out:

Using control points [2.03727704 2.4722366 8.19426193]

Source-space decoding¶

Source space decoding is also possible, but because the number of features can be much larger than in the sensor space, univariate feature selection using ANOVA f-test (or some other metric) can be done to reduce the feature dimension. Interpreting decoding results might be easier in source space as compared to sensor space.

Examples

Exercise¶

Explore other datasets from MNE (e.g. Face dataset from SPM to predict Face vs. Scrambled)

References¶

- 1

Jean-Rémi King, Laura Gwilliams, Chris Holdgraf, Jona Sassenhagen, Alexandre Barachant, Denis Engemann, Eric Larson, and Alexandre Gramfort. Encoding and decoding neuronal dynamics: methodological framework to uncover the algorithms of cognition. hal-01848442, 2018. URL: https://hal.archives-ouvertes.fr/hal-01848442.

- 2

Zoltan J. Koles. The quantitative extraction and topographic mapping of the abnormal components in the clinical EEG. Electroencephalography and Clinical Neurophysiology, 79(6):440–447, 1991. doi:10.1016/0013-4694(91)90163-X.

- 3

Sven Dähne, Frank C. Meinecke, Stefan Haufe, Johannes Höhne, Michael Tangermann, Klaus-Robert Müller, and Vadim V. Nikulin. SPoC: a novel framework for relating the amplitude of neuronal oscillations to behaviorally relevant parameters. NeuroImage, 86:111–122, 2014. doi:10.1016/j.neuroimage.2013.07.079.

- 4

Bertrand Rivet, Antoine Souloumiac, Virginie Attina, and Guillaume Gibert. xDAWN algorithm to enhance evoked potentials: application to brain–computer interface. IEEE Transactions on Biomedical Engineering, 56(8):2035–2043, 2009. doi:10.1109/TBME.2009.2012869.

- 5

Aaron Schurger, Sebastien Marti, and Stanislas Dehaene. Reducing multi-sensor data to a single time course that reveals experimental effects. BMC Neuroscience, 2013. doi:10.1186/1471-2202-14-122.

- 6

Jean-Rémi King, Alexandre Gramfort, Aaron Schurger, Lionel Naccache, and Stanislas Dehaene. Two distinct dynamic modes subtend the detection of unexpected sounds. PLoS ONE, 9(1):e85791, 2014. doi:10.1371/journal.pone.0085791.

- 7

Jean-Rémi King and Stanislas Dehaene. Characterizing the dynamics of mental representations: the temporal generalization method. Trends in Cognitive Sciences, 18(4):203–210, 2014. doi:10.1016/j.tics.2014.01.002.

Total running time of the script: ( 0 minutes 48.658 seconds)

Estimated memory usage: 487 MB