Note

Go to the end to download the full example code.

Convert iEEG data to BIDS format#

In this example, we use MNE-BIDS to create a BIDS-compatible directory of iEEG data. Specifically, we will follow these steps:

Download some iEEG data.

Load the data, extract information, and save in a new BIDS directory.

Check the result and compare it with the standard.

Cite MNE-BIDS.

Repeat the process for the

fsaveragetemplate coordinate space.

The iEEG data will be written by write_raw_bids() with

the addition of extra metadata elements in the following files:

the sidecar file

ieeg.jsonelectrodes.tsvcoordsystem.jsonevents.tsvchannels.tsv

Compared to EEG data, the main differences are within the

coordsystem.json and electrodes.tsv files.

For more information on these files,

refer to the iEEG part of the BIDS specification.

# Authors: The MNE-BIDS developers

# SPDX-License-Identifier: BSD-3-Clause

import shutil

from pathlib import Path

import mne

import nibabel as nib

import numpy as np

from nilearn.plotting import plot_anat

from mne_bids import (

BIDSPath,

convert_montage_to_mri,

convert_montage_to_ras,

get_anat_landmarks,

print_dir_tree,

read_raw_bids,

search_folder_for_text,

template_to_head,

write_anat,

write_raw_bids,

)

Step 1: Download the data#

First, we need some data to work with. We will use the

data downloaded via MNE-Python’s datasets API:

mne.datasets.misc.data_path()

misc_path = mne.datasets.misc.data_path()

# The electrode coords data are in the tsv file format

# which is easily read in using numpy

raw = mne.io.read_raw_fif(misc_path / "seeg" / "sample_seeg_ieeg.fif")

raw.info["line_freq"] = 60 # specify power line frequency as required by BIDS

subjects_dir = misc_path / "seeg" # Freesurfer recon-all directory

Using default location ~/mne_data for misc...

Dataset misc version 0.0 out of date, latest version is 0.27

0.00B [00:00, ?B/s]

787kB [00:00, 6.87MB/s]

1.57MB [00:00, 7.33MB/s]

4.72MB [00:00, 17.9MB/s]

7.60MB [00:00, 22.0MB/s]

9.82MB [00:00, 21.6MB/s]

12.0MB [00:00, 12.6MB/s]

13.7MB [00:01, 9.07MB/s]

15.6MB [00:01, 10.8MB/s]

17.1MB [00:01, 11.0MB/s]

18.5MB [00:01, 9.53MB/s]

19.7MB [00:01, 9.27MB/s]

20.7MB [00:01, 7.40MB/s]

22.0MB [00:02, 8.34MB/s]

23.3MB [00:02, 8.15MB/s]

24.3MB [00:02, 7.53MB/s]

25.7MB [00:02, 8.18MB/s]

26.6MB [00:02, 6.90MB/s]

27.8MB [00:02, 7.70MB/s]

28.6MB [00:03, 6.37MB/s]

29.9MB [00:03, 7.61MB/s]

30.7MB [00:03, 6.37MB/s]

32.0MB [00:03, 7.49MB/s]

32.8MB [00:03, 6.30MB/s]

34.9MB [00:03, 8.04MB/s]

35.7MB [00:04, 5.30MB/s]

38.8MB [00:04, 9.33MB/s]

41.9MB [00:04, 13.4MB/s]

45.1MB [00:04, 17.1MB/s]

48.2MB [00:04, 20.3MB/s]

51.4MB [00:04, 22.7MB/s]

54.7MB [00:04, 25.4MB/s]

57.7MB [00:04, 26.4MB/s]

60.8MB [00:05, 27.6MB/s]

64.0MB [00:05, 28.3MB/s]

67.1MB [00:05, 29.0MB/s]

70.1MB [00:05, 29.2MB/s]

73.4MB [00:05, 29.9MB/s]

76.5MB [00:05, 27.2MB/s]

79.3MB [00:06, 9.98MB/s]

81.4MB [00:06, 9.65MB/s]

83.9MB [00:06, 11.5MB/s]

86.2MB [00:06, 13.4MB/s]

88.7MB [00:06, 15.5MB/s]

90.9MB [00:07, 9.67MB/s]

93.3MB [00:07, 11.7MB/s]

95.7MB [00:07, 13.6MB/s]

98.3MB [00:07, 16.0MB/s]

101MB [00:07, 17.7MB/s]

104MB [00:07, 21.6MB/s]

107MB [00:08, 17.0MB/s]

109MB [00:08, 8.44MB/s]

110MB [00:09, 6.68MB/s]

112MB [00:09, 6.37MB/s]

115MB [00:09, 9.60MB/s]

118MB [00:09, 11.9MB/s]

120MB [00:09, 14.1MB/s]

122MB [00:09, 12.4MB/s]

124MB [00:10, 8.47MB/s]

126MB [00:10, 9.45MB/s]

127MB [00:10, 8.19MB/s]

128MB [00:11, 6.53MB/s]

130MB [00:11, 8.19MB/s]

132MB [00:11, 9.03MB/s]

133MB [00:11, 9.34MB/s]

134MB [00:11, 9.90MB/s]

136MB [00:11, 8.08MB/s]

137MB [00:11, 7.16MB/s]

137MB [00:12, 6.82MB/s]

139MB [00:12, 7.80MB/s]

140MB [00:12, 6.92MB/s]

141MB [00:12, 6.62MB/s]

141MB [00:12, 5.84MB/s]

142MB [00:12, 7.00MB/s]

144MB [00:12, 9.39MB/s]

145MB [00:13, 8.17MB/s]

146MB [00:13, 6.43MB/s]

147MB [00:13, 6.26MB/s]

148MB [00:13, 8.14MB/s]

149MB [00:13, 8.31MB/s]

150MB [00:13, 7.32MB/s]

151MB [00:13, 6.40MB/s]

152MB [00:14, 5.57MB/s]

154MB [00:14, 8.02MB/s]

155MB [00:14, 7.19MB/s]

155MB [00:14, 6.41MB/s]

156MB [00:14, 5.74MB/s]

158MB [00:14, 7.57MB/s]

158MB [00:14, 7.48MB/s]

159MB [00:15, 6.47MB/s]

160MB [00:15, 5.70MB/s]

161MB [00:15, 5.38MB/s]

163MB [00:15, 7.69MB/s]

163MB [00:15, 6.72MB/s]

164MB [00:15, 6.50MB/s]

165MB [00:16, 5.63MB/s]

166MB [00:16, 7.12MB/s]

168MB [00:16, 8.94MB/s]

169MB [00:16, 7.80MB/s]

170MB [00:16, 6.82MB/s]

170MB [00:17, 3.94MB/s]

174MB [00:17, 7.90MB/s]

177MB [00:17, 12.0MB/s]

180MB [00:17, 15.8MB/s]

183MB [00:17, 19.1MB/s]

186MB [00:17, 21.8MB/s]

189MB [00:17, 24.2MB/s]

192MB [00:17, 25.9MB/s]

196MB [00:17, 27.3MB/s]

199MB [00:18, 28.4MB/s]

202MB [00:18, 29.1MB/s]

205MB [00:18, 27.2MB/s]

208MB [00:18, 28.0MB/s]

211MB [00:18, 28.9MB/s]

214MB [00:18, 29.5MB/s]

217MB [00:18, 29.7MB/s]

220MB [00:18, 29.9MB/s]

224MB [00:18, 30.2MB/s]

227MB [00:18, 30.5MB/s]

230MB [00:19, 30.7MB/s]

233MB [00:19, 30.6MB/s]

236MB [00:19, 17.9MB/s]

239MB [00:19, 18.5MB/s]

241MB [00:19, 18.8MB/s]

243MB [00:19, 18.8MB/s]

245MB [00:19, 19.1MB/s]

247MB [00:20, 19.4MB/s]

249MB [00:20, 19.6MB/s]

251MB [00:20, 19.8MB/s]

253MB [00:20, 19.9MB/s]

256MB [00:20, 9.60MB/s]

257MB [00:21, 9.54MB/s]

258MB [00:21, 9.49MB/s]

260MB [00:21, 9.35MB/s]

261MB [00:21, 9.58MB/s]

262MB [00:21, 9.10MB/s]

263MB [00:21, 9.15MB/s]

264MB [00:21, 9.26MB/s]

265MB [00:21, 9.23MB/s]

266MB [00:22, 9.20MB/s]

267MB [00:22, 9.16MB/s]

268MB [00:22, 9.00MB/s]

269MB [00:22, 8.84MB/s]

270MB [00:22, 8.92MB/s]

271MB [00:22, 9.12MB/s]

272MB [00:22, 9.07MB/s]

273MB [00:22, 9.27MB/s]

274MB [00:22, 9.21MB/s]

276MB [00:23, 9.31MB/s]

277MB [00:23, 9.33MB/s]

278MB [00:23, 9.28MB/s]

279MB [00:23, 9.24MB/s]

280MB [00:23, 9.52MB/s]

281MB [00:23, 9.57MB/s]

282MB [00:23, 9.34MB/s]

283MB [00:23, 8.82MB/s]

284MB [00:23, 9.08MB/s]

285MB [00:24, 8.79MB/s]

286MB [00:24, 9.24MB/s]

287MB [00:24, 9.24MB/s]

288MB [00:24, 9.22MB/s]

289MB [00:24, 9.28MB/s]

290MB [00:24, 9.31MB/s]

291MB [00:24, 9.33MB/s]

292MB [00:24, 9.29MB/s]

0.00B [00:00, ?B/s]

0.00B [00:00, ?B/s]

Download complete in 29s (278.8 MB)

Opening raw data file /home/runner/mne_data/MNE-misc-data/seeg/sample_seeg_ieeg.fif...

Range : 1310640 ... 1370605 = 1311.411 ... 1371.411 secs

Ready.

When the locations of the channels in this dataset were found in

Locating Intracranial Electrode Contacts,

the T1 was aligned to ACPC. So, this montage is in an

ACPC-aligned coordinate system.

We can either save the channel positions in the subject’s anatomical

space (from their T1 image) or we can transform to a template space

such as fsaverage. To save them in the individual space, it is

required that the T1 have been aligned to ACPC and then the channel positions

be in terms of that coordinate system. Automated alignment to ACPC has not

been implemented in MNE yet, so if the channel positions are not in

an ACPC-aligned coordinate system, using a template (like fsaverage)

is the best option.

# estimate the transformation from "head" to "mri" space

trans = mne.coreg.estimate_head_mri_t("sample_seeg", subjects_dir)

Now let’s convert the montage to “ras”

montage = raw.get_montage()

montage.apply_trans(trans) # head->mri

convert_montage_to_ras(montage, "sample_seeg", subjects_dir) # mri->ras

BIDS vs MNE-Python Coordinate Systems#

BIDS has many acceptable coordinate systems for iEEG, which can be viewed in appendix VIII of the BIDS specification. However, MNE-BIDS depends on MNE-Python and MNE-Python does not support all these coordinate systems (yet).

MNE-Python has a few tutorials on this topic:

MNE-Python supports using mni_tal and mri coordinate frames,

corresponding to the fsaverage and ACPC (for an ACPC-aligned T1) BIDS

coordinate systems respectively. All other coordinate coordinate frames in

MNE-Python, if written with mne_bids.write_raw_bids(), must have

an mne_bids.BIDSPath.space specified, and will be read in with

the montage channel locations set to the coordinate frame ‘unknown’.

Step 2: Formatting as BIDS#

Now, let us format the Raw object into BIDS.

With this step, we have everything to start a new BIDS directory using

our data. To do that, we can use write_raw_bids()

Generally, write_raw_bids() tries to extract as much

meta data as possible from the raw data and then formats it in a BIDS

compatible way. write_raw_bids() takes a bunch of inputs, most of

which are however optional. The required inputs are:

rawbids_basenamebids_root

… as you can see in the docstring:

print(write_raw_bids.__doc__)

Save raw data to a BIDS-compliant folder structure.

.. warning:: * The original file is simply copied over if the original

file format is BIDS-supported for that datatype. Otherwise,

this function will convert to a BIDS-supported file format

while warning the user. For EEG and iEEG data, conversion

will be to BrainVision format; for MEG, conversion will be

to FIFF.

* ``mne-bids`` will infer the manufacturer information

from the file extension. If your file format is non-standard

for the manufacturer, please update the manufacturer field

in the sidecars manually.

Parameters

----------

raw : mne.io.Raw

The raw data. It must be an instance of `mne.io.Raw` that is not

already loaded from disk unless ``allow_preload`` is explicitly set

to ``True``. See warning for the ``allow_preload`` parameter.

bids_path : BIDSPath

The file to write. The :class:`mne_bids.BIDSPath` instance passed here

**must** have the ``subject``, ``task``, and ``root`` attributes set.

If the ``datatype`` attribute is not set, it will be inferred from the

recording data type found in ``raw``. In case of multiple data types,

the ``.datatype`` attribute must be set.

Example::

bids_path = BIDSPath(subject='01', session='01', task='testing',

acquisition='01', run='01', datatype='meg',

root='/data/BIDS')

This will write the following files in the correct subfolder ``root``::

sub-01_ses-01_task-testing_acq-01_run-01_meg.fif

sub-01_ses-01_task-testing_acq-01_run-01_meg.json

sub-01_ses-01_task-testing_acq-01_run-01_channels.tsv

sub-01_ses-01_acq-01_coordsystem.json

and the following one if ``events`` is not ``None``::

sub-01_ses-01_task-testing_acq-01_run-01_events.tsv

and add a line to the following files::

participants.tsv

scans.tsv

Note that the extension is automatically inferred from the raw

object.

events : path-like | np.ndarray | None

Use this parameter to specify events to write to the ``*_events.tsv``

sidecar file, additionally to the object's :class:`~mne.Annotations`

(which are always written).

If ``path-like``, specifies the location of an MNE events file.

If an array, the MNE events array (shape: ``(n_events, 3)``).

If a path or an array and ``raw.annotations`` exist, the union of

``events`` and ``raw.annotations`` will be written.

Mappings from event names to event codes (listed in the third

column of the MNE events array) must be specified via the ``event_id``

parameter; otherwise, an exception is raised. If

:class:`~mne.Annotations` are present, their descriptions must be

included in ``event_id`` as well.

If ``None``, events will only be inferred from the raw object's

:class:`~mne.Annotations`.

.. note::

If specified, writes the union of ``events`` and

``raw.annotations``. If you wish to **only** write

``raw.annotations``, pass ``events=None``. If you want to

**exclude** the events in ``raw.annotations`` from being written,

call ``raw.set_annotations(None)`` before invoking this function.

.. note::

Either, descriptions of all event codes must be specified via the

``event_id`` parameter or each event must be accompanied by a

row in ``event_metadata``.

event_id : dict | None

Descriptions or names describing the event codes, if you passed

``events``. The descriptions will be written to the ``trial_type``

column in ``*_events.tsv``. The dictionary keys correspond to the event

description,s and the values to the event codes. You must specify a

description for all event codes appearing in ``events``. If your data

contains :class:`~mne.Annotations`, you can use this parameter to

assign event codes to each unique annotation description (mapping from

description to event code).

event_metadata : pandas.DataFrame | None

Metadata for each event in ``events``. Each row corresponds to an event.

extra_columns_descriptions : dict | None

A dictionary that maps column names of the ``event_metadata`` to descriptions.

Each column of ``event_metadata`` must have a corresponding entry in this.

anonymize : dict | None

If `None` (default), no anonymization is performed.

If a dictionary, data will be anonymized depending on the dictionary

keys: ``daysback`` is a required key, ``keep_his`` is optional.

``daysback`` : int

Number of days by which to move back the recording date in time.

In studies with multiple subjects the relative recording date

differences between subjects can be kept by using the same number

of ``daysback`` for all subject anonymizations. ``daysback`` should

be great enough to shift the date prior to 1925 to conform with

BIDS anonymization rules.

``keep_his`` : bool

If ``False`` (default), all subject information next to the

recording date will be overwritten as well. If ``True``, keep

subject information apart from the recording date.

``keep_source`` : bool

Whether to store the name of the ``raw`` input file in the

``source`` column of ``scans.tsv``. By default, this information

is not stored.

format : 'auto' | 'BrainVision' | 'BDF' | 'EDF' | 'FIF' | 'EEGLAB'

Controls the file format of the data after BIDS conversion. If

``'auto'``, MNE-BIDS will attempt to convert the input data to BIDS

without a change of the original file format. A conversion to a

different file format will then only take place if the original file

format lacks some necessary features.

Conversion may be forced to BrainVision, EDF, or EEGLAB for (i)EEG,

to BDF or EDF for EMG, and to FIF for MEG data.

symlink : bool

Instead of copying the source files, only create symbolic links to

preserve storage space. This is only allowed when not anonymizing the

data (i.e., ``anonymize`` must be ``None``).

.. note::

Symlinks currently only work with FIFF files. In case of split

files, only a link to the first file will be created, and

:func:`mne_bids.read_raw_bids` will correctly handle reading the

data again.

.. note::

Symlinks are currently only supported on macOS and Linux. We will

add support for Windows 10 at a later time.

empty_room : mne.io.Raw | BIDSPath | None

The empty-room recording to be associated with this file. This is

only supported for MEG data.

If :class:`~mne.io.Raw`, you may pass raw data that was not preloaded

(otherwise, pass ``allow_preload=True``); i.e., it behaves similar to

the ``raw`` parameter. The session name will be automatically generated

from the raw object's ``info['meas_date']``.

If a :class:`~mne_bids.BIDSPath`, the ``root`` attribute must be the

same as in ``bids_path``. Pass ``None`` (default) if you do not wish to

specify an associated empty-room recording.

.. versionchanged:: 0.11

Accepts :class:`~mne.io.Raw` data.

allow_preload : bool

If ``True``, allow writing of preloaded raw objects (i.e.,

``raw.preload`` is ``True``). Because the original file is ignored, you

must specify what ``format`` to write (not ``auto``).

.. warning::

BIDS was originally designed for unprocessed or minimally processed

data. For this reason, by default, we prevent writing of preloaded

data that may have been modified. Only use this option when

absolutely necessary: for example, manually converting from file

formats not supported by MNE or writing preprocessed derivatives.

Be aware that these use cases are not fully supported.

montage : mne.channels.DigMontage | None

The montage with channel positions if channel position data are

to be stored in a format other than "head" (the internal MNE

coordinate frame that the data in ``raw`` is stored in).

acpc_aligned : bool

It is difficult to check whether the T1 scan is ACPC aligned which

means that "mri" coordinate space is "ACPC" BIDS coordinate space.

So, this flag is required to be True when the digitization data

is in "mri" for intracranial data to confirm that the T1 is

ACPC-aligned.

electrodes_tsv_task : bool

Add the ``task-`` entity to the ``electrodes.tsv`` filename.

Defaults to ``False``.

emg_placement : "Measured" | "ChannelSpecific" | "Other" | None

How the EMG sensor locations were determined. Must be one of the literal strings

if datatype is "emg" and should be ``None`` for all other datatypes.

overwrite : bool

Whether to overwrite existing files or data in files.

Defaults to ``False``.

If ``True``, any existing files with the same BIDS parameters

will be overwritten with the exception of the ``*_participants.tsv``

and ``*_scans.tsv`` files. For these files, parts of pre-existing data

that match the current data will be replaced. For

``*_participants.tsv``, specifically, age, sex and hand fields will be

overwritten, while any manually added fields in ``participants.json``

and ``participants.tsv`` by a user will be retained.

If ``False``, no existing data will be overwritten or

replaced.

verbose : bool | str | int | None

Control verbosity of the logging output. If ``None``, use the default

verbosity level. See the :ref:`logging documentation <tut-logging>` and

:func:`mne.verbose` for details. Should only be passed as a keyword

argument.

Returns

-------

bids_path : BIDSPath

The path of the created data file.

.. note::

If you passed empty-room raw data via ``empty_room``, the

:class:`~mne_bids.BIDSPath` of the empty-room recording can be

retrieved via ``bids_path.find_empty_room(use_sidecar_only=True)``.

Notes

-----

You should ensure that ``raw.info['subject_info']`` and

``raw.info['meas_date']`` are set to proper (not-``None``) values to allow

for the correct computation of each participant's age when creating

``*_participants.tsv``.

This function will convert existing `mne.Annotations` from

``raw.annotations`` to events. Additionally, any events supplied via

``events`` will be written too. To avoid writing of annotations,

remove them from the raw file via ``raw.set_annotations(None)`` before

invoking ``write_raw_bids``.

To write events encoded in a ``STIM`` channel, you first need to create the

events array manually and pass it to this function:

..

events = mne.find_events(raw, min_duration=0.002)

write_raw_bids(..., events=events)

See the documentation of :func:`mne.find_events` for more information on

event extraction from ``STIM`` channels.

When anonymizing ``.edf`` files, then the file format for EDF limits

how far back we can set the recording date. Therefore, all anonymized

EDF datasets will have an internal recording date of ``01-01-1985``,

and the actual recording date will be stored in the ``scans.tsv``

file's ``acq_time`` column.

``write_raw_bids`` will generate a ``dataset_description.json`` file

if it does not already exist. Minimal metadata will be written there.

If one sets ``overwrite`` to ``True`` here, it will not overwrite an

existing ``dataset_description.json`` file.

If you need to add more data there, or overwrite it, then you should

call :func:`mne_bids.make_dataset_description` directly.

When writing EDF or BDF files, all file extensions are forced to be

lower-case, in compliance with the BIDS specification.

See Also

--------

mne.io.Raw.anonymize

mne.find_events

mne.Annotations

mne.events_from_annotations

Let us initialize some of the necessary data for the subject.

# There is a subject, and specific task for the dataset.

subject_id = "1"

task = "motor"

# get MNE-Python directory w/ example data

mne_data_dir = Path(mne.get_config("MNE_DATASETS_MISC_PATH"))

# There is the root directory for where we will write our data.

bids_root = mne_data_dir / "ieeg_bids"

To ensure the output path doesn’t contain any leftover files from previous tests and example runs, we simply delete it.

Warning

Do not delete directories that may contain important data!

Now we just need make a mne_bids.BIDSPath to save the data.

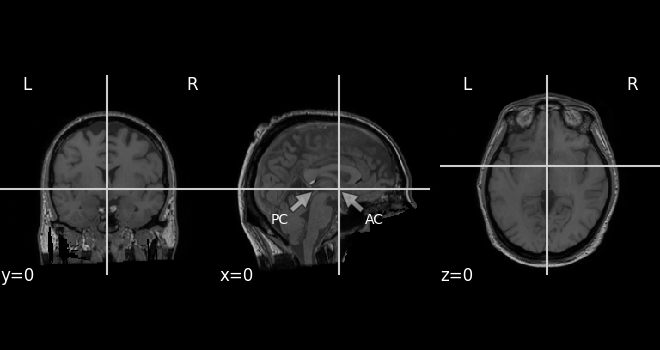

Warning

By passing acpc_aligned=True, we are affirming that

the T1 in this dataset is aligned to ACPC. This is very

difficult to check with a computer which is why this

step is required.

# Now convert our data to be in a new BIDS dataset.

bids_path = BIDSPath(subject=subject_id, task=task, root=bids_root)

# plot T1 to show that it is ACPC-aligned

# note that the origin is centered on the anterior commissure (AC)

# with the y-axis passing through the posterior commissure (PC)

T1_fname = subjects_dir / "sample_seeg" / "mri" / "T1.mgz"

fig = plot_anat(T1_fname, cut_coords=(0, 0, 0))

fig.axes["x"].ax.annotate(

"AC",

(2.0, -2.0),

(30.0, -40.0),

color="w",

arrowprops=dict(facecolor="w", alpha=0.5),

)

fig.axes["x"].ax.annotate(

"PC",

(-31.0, -2.0),

(-80.0, -40.0),

color="w",

arrowprops=dict(facecolor="w", alpha=0.5),

)

# write ACPC-aligned T1

landmarks = get_anat_landmarks(T1_fname, raw.info, trans, "sample_seeg", subjects_dir)

T1_bids_path = write_anat(T1_fname, bids_path, deface=True, landmarks=landmarks)

# write `raw` to BIDS and anonymize it (converts to BrainVision format)

#

# we need to pass the `montage` argument for coordinate frames other than

# "head" which is what MNE uses internally in the `raw` object

#

# `acpc_aligned=True` affirms that our MRI is aligned to ACPC

# if this is not true, convert to `fsaverage` (see below)!

write_raw_bids(

raw,

bids_path,

anonymize=dict(daysback=40000),

montage=montage,

acpc_aligned=True,

overwrite=True,

)

# check our output

print_dir_tree(bids_root)

Writing '/home/runner/mne_data/ieeg_bids/sub-1/anat/sub-1_T1w.json'...

Opening raw data file /home/runner/mne_data/MNE-misc-data/seeg/sample_seeg_ieeg.fif...

Range : 1310640 ... 1370605 = 1311.411 ... 1371.411 secs

Ready.

/home/runner/work/mne-bids/mne-bids/examples/convert_ieeg_to_bids.py:213: RuntimeWarning: Converting data files to BrainVision format for anonymization

write_raw_bids(

Writing '/home/runner/mne_data/ieeg_bids/README'...

Writing '/home/runner/mne_data/ieeg_bids/participants.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/participants.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-ACPC_electrodes.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-ACPC_coordsystem.json'...

The provided raw data contains annotations, but you did not pass an "event_id" mapping from annotation descriptions to event codes. We will generate arbitrary event codes. To specify custom event codes, please pass "event_id".

Used Annotations descriptions: [np.str_('Fixation'), np.str_('Go Cue'), np.str_('ISI Onset'), np.str_('Response')]

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_events.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_events.json'...

Writing '/home/runner/mne_data/ieeg_bids/dataset_description.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_ieeg.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_channels.tsv'...

/home/runner/work/mne-bids/mne-bids/examples/convert_ieeg_to_bids.py:213: RuntimeWarning: Converting data files to BrainVision format

write_raw_bids(

Writing '/home/runner/mne_data/ieeg_bids/sub-1/sub-1_scans.tsv'...

Wrote /home/runner/mne_data/ieeg_bids/sub-1/sub-1_scans.tsv entry with ieeg/sub-1_task-motor_ieeg.vhdr.

|ieeg_bids/

|--- README

|--- dataset_description.json

|--- participants.json

|--- participants.tsv

|--- sub-1/

|------ sub-1_scans.tsv

|------ anat/

|--------- sub-1_T1w.json

|--------- sub-1_T1w.nii.gz

|------ ieeg/

|--------- sub-1_space-ACPC_coordsystem.json

|--------- sub-1_space-ACPC_electrodes.tsv

|--------- sub-1_task-motor_channels.tsv

|--------- sub-1_task-motor_events.json

|--------- sub-1_task-motor_events.tsv

|--------- sub-1_task-motor_ieeg.eeg

|--------- sub-1_task-motor_ieeg.json

|--------- sub-1_task-motor_ieeg.vhdr

|--------- sub-1_task-motor_ieeg.vmrk

MNE-BIDS has created a suitable directory structure for us, and among other

meta data files, it started an events.tsv and channels.tsv file,

and created an initial dataset_description.json file on top!

Now it’s time to manually check the BIDS directory and the meta files to add

all the information that MNE-BIDS could not infer. For instance, you must

describe iEEGReference and iEEGGround yourself.

It’s easy to find these by searching for "n/a" in the sidecar files.

search_folder_for_text("n/a", bids_root)

sub-1/ieeg/sub-1_task-motor_events.json

8 "Description": "Duration of the event in seconds from onset. Must be zero, positive, or 'n/a' if unavailable. A zero value indicates an impulse event. ",

sub-1/ieeg/sub-1_task-motor_ieeg.json

7 "SoftwareFilters": "n/a",

10 "iEEGReference": "n/a",

participants.tsv

1 participa age sex hand weight height

2 sub-1 n/a n/a n/a n/a n/a

sub-1/ieeg/sub-1_task-motor_channels.tsv

1 name type units low_cutof high_cuto descripti sampling_ status status_de

2 LENT 1 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

3 LENT 2 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

4 LENT 3 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

5 LENT 4 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

6 LENT 5 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

7 LENT 6 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

8 LENT 7 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

9 LAMY 1 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

10 LAMY 2 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

11 LAMY 3 SEEG V 0.0 499.70605 StereoEEG 999.41210 good n/a

...

sub-1/ieeg/sub-1_space-ACPC_electrodes.tsv

1 name x y z size

2 LENT 1 -0.021200 0.0158514 -0.039700 n/a

3 LENT 2 -0.023233 0.0168625 -0.038201 n/a

4 LENT 3 -0.026400 0.0185514 -0.035700 n/a

5 LENT 4 -0.029233 0.0198625 -0.033922 n/a

6 LENT 5 -0.031700 0.0206514 -0.032200 n/a

7 LENT 6 -0.034122 0.0217514 -0.030478 n/a

8 LENT 7 -0.036855 0.0225292 -0.028108 n/a

9 LAMY 1 -0.026011 -0.000693 -0.015478 n/a

10 LAMY 2 -0.030600 -0.000148 -0.015200 n/a

11 LAMY 3 -0.035536 0.0011150 -0.014882 n/a

...

Remember that there is a convenient JavaScript tool to validate all your BIDS directories called the “BIDS-validator”, available as a web version and a command line tool:

Web version: https://bids-standard.github.io/bids-validator/

Command line tool: https://www.npmjs.com/package/bids-validator

Step 3: Load channels from BIDS-formatted dataset and compare#

Now we have written our BIDS directory. We can use

read_raw_bids() to read in the data.

# read in the BIDS dataset to plot the coordinates

raw2 = read_raw_bids(bids_path=bids_path)

Extracting parameters from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_ieeg.vhdr...

Setting channel info structure...

Reading events from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_events.tsv.

Reading channel info from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_channels.tsv.

Reading electrode coords from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-ACPC_electrodes.tsv.

Now we have to go back to “head” coordinates.

Note

If you were downloading this from OpenNeuro, you would

have to run the Freesurfer recon-all to get the transforms.

montage2 = raw2.get_montage()

# we need to go from scanner RAS back to surface RAS (requires recon-all)

convert_montage_to_mri(montage2, "sample_seeg", subjects_dir=subjects_dir)

# this uses Freesurfer recon-all subject directory

montage2.add_estimated_fiducials("sample_seeg", subjects_dir=subjects_dir)

# get head->mri trans, invert from mri->head

trans2 = mne.transforms.invert_transform(mne.channels.compute_native_head_t(montage2))

# now the montage is properly in "head" and ready for analysis in MNE

raw2.set_montage(montage2)

# get the monage, apply the trans and make sure it's the same

# note: the head coordinates may differ because they are defined by

# the fiducials which are estimated; as long as the head->mri trans

# is computed with the same fiducials, the coordinates will be the same

# in ACPC space which is what matters

montage = raw.get_montage() # the original montage in 'head' coordinates

montage.apply_trans(trans)

montage2 = raw2.get_montage() # the recovered montage in 'head' coordinates

montage2.apply_trans(trans2)

# compare with standard

print(

f"Recovered coordinate: {montage2.get_positions()['ch_pos']['LENT 1']}\n"

f"Saved coordinate: {montage.get_positions()['ch_pos']['LENT 1']}"

)

Recovered coordinate: [-0.02430001 0.02610001 -0.05600001]

Saved coordinate: [-0.0243 0.0261 -0.056 ]

Step 4: Cite mne-bids#

We can see that the appropriate citations are already written in the README. If you are preparing a manuscript, please make sure to also cite MNE-BIDS there.

References

----------

Appelhoff, S., Sanderson, M., Brooks, T., Vliet, M., Quentin, R., Holdgraf, C., Chaumon, M., Mikulan, E., Tavabi, K., Höchenberger, R., Welke, D., Brunner, C., Rockhill, A., Larson, E., Gramfort, A. and Jas, M. (2019). MNE-BIDS: Organizing electrophysiological data into the BIDS format and facilitating their analysis. Journal of Open Source Software 4: (1896).https://doi.org/10.21105/joss.01896

Holdgraf, C., Appelhoff, S., Bickel, S., Bouchard, K., D'Ambrosio, S., David, O., … Hermes, D. (2019). iEEG-BIDS, extending the Brain Imaging Data Structure specification to human intracranial electrophysiology. Scientific Data, 6, 102. https://doi.org/10.1038/s41597-019-0105-7

Step 5: Store coordinates in a template space#

Alternatively, if your T1 is not aligned to ACPC-space or you prefer to

store the coordinates in a template space (e.g. fsaverage) for another

reason, you can also do that.

Here we’ll use the MNI Talairach transform to get to fsaverage space

from “mri” aka surface RAS space.

fsaverage is very useful for group analysis as shown in

Working with sEEG data. Note, this is only a linear transform and so

one loses quite a bit of accuracy relative to the needs of intracranial

researchers so it is quite suboptimal. A better option is to use a

symmetric diffeomorphic transform to create a one-to-one mapping of brain

voxels from the individual’s brain to the template as shown in

Locating intracranial electrode contacts. Even so, it’s better to provide the coordinates

in the individual’s brain space, as was done above, so that the researcher

who uses the coordinates has the ability to tranform them to a template

of their choice.

Note

For fsaverage, the template coordinate system was defined

so that scanner RAS is equivalent to surface RAS.

BIDS requires that template data be in scanner RAS so for

coordinate frames where this is not the case, the coordinates

must be converted (see below).

# ensure the output path doesn't contain any leftover files from previous

# tests and example runs

if bids_root.exists():

shutil.rmtree(bids_root)

# load our raw data again

raw = mne.io.read_raw_fif(misc_path / "seeg" / "sample_seeg_ieeg.fif")

raw.info["line_freq"] = 60 # specify power line frequency as required by BIDS

# get Talairach transform

mri_mni_t = mne.read_talxfm("sample_seeg", subjects_dir)

Opening raw data file /home/runner/mne_data/MNE-misc-data/seeg/sample_seeg_ieeg.fif...

Range : 1310640 ... 1370605 = 1311.411 ... 1371.411 secs

Ready.

Now let’s convert the montage to MNI Talairach (“mni_tal”).

montage = raw.get_montage()

montage.apply_trans(trans) # head->mri

montage.apply_trans(mri_mni_t)

# write to BIDS, this time with a template coordinate system

write_raw_bids(

raw, bids_path, anonymize=dict(daysback=40000), montage=montage, overwrite=True

)

# read in the BIDS dataset

raw2 = read_raw_bids(bids_path=bids_path)

Opening raw data file /home/runner/mne_data/MNE-misc-data/seeg/sample_seeg_ieeg.fif...

Range : 1310640 ... 1370605 = 1311.411 ... 1371.411 secs

Ready.

/home/runner/work/mne-bids/mne-bids/examples/convert_ieeg_to_bids.py:350: RuntimeWarning: Converting data files to BrainVision format for anonymization

write_raw_bids(

Writing '/home/runner/mne_data/ieeg_bids/README'...

Writing '/home/runner/mne_data/ieeg_bids/participants.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/participants.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-fsaverage_electrodes.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-fsaverage_coordsystem.json'...

The provided raw data contains annotations, but you did not pass an "event_id" mapping from annotation descriptions to event codes. We will generate arbitrary event codes. To specify custom event codes, please pass "event_id".

Used Annotations descriptions: [np.str_('Fixation'), np.str_('Go Cue'), np.str_('ISI Onset'), np.str_('Response')]

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_events.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_events.json'...

Writing '/home/runner/mne_data/ieeg_bids/dataset_description.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_ieeg.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_channels.tsv'...

/home/runner/work/mne-bids/mne-bids/examples/convert_ieeg_to_bids.py:350: RuntimeWarning: Converting data files to BrainVision format

write_raw_bids(

Writing '/home/runner/mne_data/ieeg_bids/sub-1/sub-1_scans.tsv'...

Wrote /home/runner/mne_data/ieeg_bids/sub-1/sub-1_scans.tsv entry with ieeg/sub-1_task-motor_ieeg.vhdr.

Extracting parameters from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_ieeg.vhdr...

Setting channel info structure...

Reading events from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_events.tsv.

Reading channel info from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_channels.tsv.

Reading electrode coords from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-fsaverage_electrodes.tsv.

MNE-Python uses head coordinates with a head -> mri trans so we

need to make sure to get our data in this form. As shown below, the montage

is in the mni_tal coordinate frame but doesn’t have fiducials. The

head coordinate frame is defined based on the fiducial points so we need

to add these. Fortunately, there is a convenient function

(mne_bids.template_to_head()) that loads stored fiducials and takes

care of the transformations. Once this function is applied, you can use

the raw object and the trans as in any MNE example

(e.g. Working with sEEG data).

# use `coord_frame='mri'` to indicate that the montage is in surface RAS

# and `unit='m'` to indicate that the units are in meters

trans2 = template_to_head(raw2.info, space="fsaverage", coord_frame="mri", unit="m")[1]

# this a bit confusing since we transformed from mri->mni and now we're

# saying we're back in 'mri' but that is because we were in the surface RAS

# coordinate frame of `sample_seeg` and transformed to 'mni_tal', which is the

# surface RAS coordinate frame for `fsaverage`: since MNE denotes surface RAS

# as 'mri', both coordinate frames are 'mri', it's just that 'mni_tal' is 'mri'

# when the subject is 'fsaverage'

Let’s check that we can recover the original coordinates from the BIDS

dataset now that we are working in the head coordinate frame with a

head -> mri trans which is the setup MNE-Python is designed around.

# check that we can recover the coordinates

print(

"Recovered coordinate head: {recovered}\n"

"Original coordinate head: {original}".format(

recovered=raw2.info["chs"][0]["loc"][:3], original=raw.info["chs"][0]["loc"][:3]

)

)

# check difference in trans

print(

f"Recovered trans:\n{trans2['trans'].round(3)}\n"

# combine head->mri with mri->mni to get head->mni

# and then invert to get mni->head

"Original trans:\n"

f"{np.linalg.inv(np.dot(trans['trans'], mri_mni_t['trans'])).round(3)}"

)

# ensure that the data in MNI coordinates is exactly the same

# (within computer precision)

montage2 = raw2.get_montage() # get montage after transformed back to head

montage2.apply_trans(trans2)

print(

f"Recovered coordinate: {montage2.get_positions()['ch_pos']['LENT 1']}\n"

f"Original coordinate: {montage.get_positions()['ch_pos']['LENT 1']}"

)

Recovered coordinate head: [-0.02488703 0.03772073 -0.0126908 ]

Original coordinate head: [-0.02599896 0.03496219 -0.01177379]

Recovered trans:

[[ 1. -0.004 -0. 0.002]

[ 0.004 0.998 -0.057 -0.029]

[ 0. 0.057 0.998 -0.041]

[ 0. 0. 0. 1. ]]

Original trans:

[[ 0.966 0.029 0.006 -0.003]

[-0.003 0.926 0.126 0.028]

[-0.001 -0.053 0.946 0.04 ]

[ 0. 0. 0. 1. ]]

Recovered coordinate: [-0.0231477 0.00949445 -0.05183359]

Original coordinate: [-0.0231477 0.00949445 -0.05183359]

As you can see the coordinates stored in the raw object are slightly off.

This is because the head coordinate frame is defined by the fiducials

(nasion, left and right pre-auricular points), and, in the first case,

the fiducials were found on the individual anatomy and then transformed

to MNI space, whereas, in the second case, they were found directly on

the template brain (this was done once for the template so that we could

just load it from a file). This difference means that there are slightly

different head->mri transforms. Once these transforms are applied, however,

the positions are the same in MNI coordinates which is what is important.

As a final step, let’s go over how to assign coordinate systems that are

not recognized by MNE-Python. Many template coordinate systems are allowed by

BIDS but are not used in MNE-Python. For these templates, the fiducials have

been found and the transformations have been pre-computed so that we can

get our coordinates in the head coordinate frame that MNE-Python uses.

Note

As of this writing, BIDS accepts channel coordinates in reference to the

the following template spaces: ICBM452AirSpace,

ICBM452Warp5Space, IXI549Space, fsaverage, fsaverageSym,

fsLR, MNIColin27, MNI152Lin,

MNI152NLin2009[a-c][Sym|Asym], MNI152NLin6Sym,

MNI152NLin6ASym, MNI305, NIHPD, OASIS30AntsOASISAnts,

OASIS30Atropos, Talairach and UNCInfant. As discussed above,

it is recommended to share the coordinates in the individual subject’s

anatomical reference frame so that researchers who use the data can

transform the coordinates to any of these templates that they choose.

BIDS requires that the template be stored in scanner RAS coordinates

so first we’ll convert our original data to scanner RAS and then

convert it back. Just in case the template electrode coordinates are

provided in voxels or the unit is not specified, these options are able

to be overridden in mne_bids.template_to_head() for ease of use.

Warning

If no coordinate frame is passed to mne_bids.template_to_head()

it will infer voxels if the coordinates are only positive and

scanner RAS otherwise. Be sure not to use the wrong coordinate

frame! surface RAS and scanner RAS are quite similar which

is especially confusing, but, fortunately, in most of the Freesurfer

template coordinate systems surface RAS is identical to

scanner RAS. surface RAS is a Freesurfer coordinate frame so

it is most likely to be used with Freesurfer template coordinate

systems). This is the case for fsaverage, MNI305 and

fsaverageSym but not fsLR.

The template should be in scanner RAS:

# ensure the output path doesn't contain any leftover files from previous

# tests and example runs

if bids_root.exists():

shutil.rmtree(bids_root)

# get a template mgz image to transform the montage to voxel coordinates

subjects_dir = mne.datasets.sample.data_path() / "subjects"

template_T1 = nib.load(subjects_dir / "fsaverage" / "mri" / "T1.mgz")

# get voxels to surface RAS and scanner RAS transforms

vox_mri_t = template_T1.header.get_vox2ras_tkr() # surface RAS

vox_ras_t = template_T1.header.get_vox2ras() # scanner RAS

raw = mne.io.read_raw_fif(

misc_path / "seeg" / "sample_seeg_ieeg.fif" # load our raw data again

)

montage = raw.get_montage() # get the original montage

montage.apply_trans(trans) # head->mri

montage.apply_trans(mri_mni_t) # mri->mni

pos = montage.get_positions()

ch_pos = np.array(list(pos["ch_pos"].values())) # get an array of positions

# mri -> vox and m -> mm

ch_pos = mne.transforms.apply_trans(np.linalg.inv(vox_mri_t), ch_pos * 1000)

ch_pos = mne.transforms.apply_trans(vox_ras_t, ch_pos)

montage_ras = mne.channels.make_dig_montage(

ch_pos=dict(zip(pos["ch_pos"].keys(), ch_pos)), coord_frame="ras"

)

# specify our standard template coordinate system space

bids_path.update(datatype="ieeg", space="fsaverage")

# write to BIDS, this time with a template coordinate system in voxels

write_raw_bids(

raw, bids_path, anonymize=dict(daysback=40000), montage=montage_ras, overwrite=True

)

Opening raw data file /home/runner/mne_data/MNE-misc-data/seeg/sample_seeg_ieeg.fif...

Range : 1310640 ... 1370605 = 1311.411 ... 1371.411 secs

Ready.

Opening raw data file /home/runner/mne_data/MNE-misc-data/seeg/sample_seeg_ieeg.fif...

Range : 1310640 ... 1370605 = 1311.411 ... 1371.411 secs

Ready.

/home/runner/work/mne-bids/mne-bids/examples/convert_ieeg_to_bids.py:493: RuntimeWarning: Converting data files to BrainVision format for anonymization

write_raw_bids(

Writing '/home/runner/mne_data/ieeg_bids/README'...

Writing '/home/runner/mne_data/ieeg_bids/participants.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/participants.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-fsaverage_electrodes.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-fsaverage_coordsystem.json'...

The provided raw data contains annotations, but you did not pass an "event_id" mapping from annotation descriptions to event codes. We will generate arbitrary event codes. To specify custom event codes, please pass "event_id".

Used Annotations descriptions: [np.str_('Fixation'), np.str_('Go Cue'), np.str_('ISI Onset'), np.str_('Response')]

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_space-fsaverage_events.tsv'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_space-fsaverage_events.json'...

Writing '/home/runner/mne_data/ieeg_bids/dataset_description.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_space-fsaverage_ieeg.json'...

Writing '/home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_space-fsaverage_channels.tsv'...

/home/runner/work/mne-bids/mne-bids/examples/convert_ieeg_to_bids.py:493: RuntimeWarning: Converting data files to BrainVision format

write_raw_bids(

Writing '/home/runner/mne_data/ieeg_bids/sub-1/sub-1_scans.tsv'...

Wrote /home/runner/mne_data/ieeg_bids/sub-1/sub-1_scans.tsv entry with ieeg/sub-1_task-motor_space-fsaverage_ieeg.vhdr.

BIDSPath(

root: /home/runner/mne_data/ieeg_bids

datatype: ieeg

basename: sub-1_task-motor_space-fsaverage_ieeg.vhdr)

Now, let’s load our data and convert our montage to head.

raw2 = read_raw_bids(bids_path=bids_path)

trans2 = template_to_head( # unit='auto' automatically determines it's in mm

raw2.info, space="fsaverage", coord_frame="ras", unit="auto"

)[1]

Extracting parameters from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_space-fsaverage_ieeg.vhdr...

Setting channel info structure...

Reading events from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_space-fsaverage_events.tsv.

Reading channel info from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_task-motor_space-fsaverage_channels.tsv.

Reading electrode coords from /home/runner/mne_data/ieeg_bids/sub-1/ieeg/sub-1_space-fsaverage_electrodes.tsv.

Let’s check to make sure again that the original coordinates from the BIDS dataset were recovered.

montage2 = raw2.get_montage() # get montage after transformed back to head

montage2.apply_trans(trans2) # apply trans to go back to 'mri'

print(

f"Recovered coordinate: {montage2.get_positions()['ch_pos']['LENT 1']}\n"

f"Original coordinate: {montage.get_positions()['ch_pos']['LENT 1']}"

)

Recovered coordinate: [-0.0231477 0.00949445 -0.05183359]

Original coordinate: [-0.0231477 0.00949445 -0.05183359]

In summary, as we saw, these standard template spaces that are allowable by BIDS are quite complicated. We therefore only cover these cases because datasets are allowed to be in these coordinate systems, and we want to be able to analyze them with MNE-Python. BIDS data in a template coordinate space doesn’t allow you to convert to a template of your choosing so it is better to save the raw data in the individual’s ACPC space. Thus, we recommend, if at all possible, saving BIDS iEEG data in ACPC coordinate space corresponding to the individual subject’s brain, not in a template coordinate frame.

Total running time of the script: (0 minutes 33.557 seconds)