Note

Go to the end to download the full example code.

Spatiotemporal permutation F-test on full sensor data#

Tests for differential evoked responses in at least one condition using a permutation clustering test. The FieldTrip neighbor templates will be used to determine the adjacency between sensors. This serves as a spatial prior to the clustering. Spatiotemporal clusters will then be visualized using custom matplotlib code.

Here, the unit of observation is epochs from a specific study subject. However, the same logic applies when the unit observation is a number of study subject each of whom contribute their own averaged data (i.e., an average of their epochs). This would then be considered an analysis at the “2nd level”.

See the FieldTrip tutorial for a caveat regarding the possible interpretation of “significant” clusters.

For more information on cluster-based permutation testing in MNE-Python, see also: Non-parametric 1 sample cluster statistic on single trial power.

# Authors: Denis Engemann <denis.engemann@gmail.com>

# Jona Sassenhagen <jona.sassenhagen@gmail.com>

# Alex Rockhill <aprockhill@mailbox.org>

# Stefan Appelhoff <stefan.appelhoff@mailbox.org>

#

# License: BSD-3-Clause

# Copyright the MNE-Python contributors.

import matplotlib.pyplot as plt

import numpy as np

import scipy.stats

from mpl_toolkits.axes_grid1 import make_axes_locatable

import mne

from mne.channels import find_ch_adjacency

from mne.datasets import sample

from mne.stats import combine_adjacency, spatio_temporal_cluster_test

from mne.viz import plot_compare_evokeds

Set parameters#

data_path = sample.data_path()

meg_path = data_path / "MEG" / "sample"

raw_fname = meg_path / "sample_audvis_filt-0-40_raw.fif"

event_fname = meg_path / "sample_audvis_filt-0-40_raw-eve.fif"

event_id = {"Aud/L": 1, "Aud/R": 2, "Vis/L": 3, "Vis/R": 4}

tmin = -0.2

tmax = 0.5

# Setup for reading the raw data

raw = mne.io.read_raw_fif(raw_fname, preload=True)

raw.filter(1, 25)

events = mne.read_events(event_fname)

Opening raw data file /home/circleci/mne_data/MNE-sample-data/MEG/sample/sample_audvis_filt-0-40_raw.fif...

Read a total of 4 projection items:

PCA-v1 (1 x 102) idle

PCA-v2 (1 x 102) idle

PCA-v3 (1 x 102) idle

Average EEG reference (1 x 60) idle

Range : 6450 ... 48149 = 42.956 ... 320.665 secs

Ready.

Reading 0 ... 41699 = 0.000 ... 277.709 secs...

Filtering raw data in 1 contiguous segment

Setting up band-pass filter from 1 - 25 Hz

FIR filter parameters

---------------------

Designing a one-pass, zero-phase, non-causal bandpass filter:

- Windowed time-domain design (firwin) method

- Hamming window with 0.0194 passband ripple and 53 dB stopband attenuation

- Lower passband edge: 1.00

- Lower transition bandwidth: 1.00 Hz (-6 dB cutoff frequency: 0.50 Hz)

- Upper passband edge: 25.00 Hz

- Upper transition bandwidth: 6.25 Hz (-6 dB cutoff frequency: 28.12 Hz)

- Filter length: 497 samples (3.310 s)

Read epochs for the channel of interest#

picks = mne.pick_types(raw.info, meg="mag", eog=True)

reject = dict(mag=4e-12, eog=150e-6)

epochs = mne.Epochs(

raw,

events,

event_id,

tmin,

tmax,

picks=picks,

decim=2, # just for speed!

baseline=None,

reject=reject,

preload=True,

)

epochs.drop_channels(["EOG 061"])

epochs.equalize_event_counts(event_id)

# Obtain the data as a 3D matrix and transpose it such that

# the dimensions are as expected for the cluster permutation test:

# n_epochs × n_times × n_channels

X = [epochs[event_name].get_data(copy=False) for event_name in event_id]

X = [np.transpose(x, (0, 2, 1)) for x in X]

Not setting metadata

288 matching events found

No baseline correction applied

Created an SSP operator (subspace dimension = 3)

3 projection items activated

Using data from preloaded Raw for 288 events and 106 original time points (prior to decimation) ...

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on MAG : ['MEG 1711']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on MAG : ['MEG 1711']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

Rejecting epoch based on EOG : ['EOG 061']

48 bad epochs dropped

Dropped 16 epochs: 50, 51, 84, 93, 95, 96, 129, 146, 149, 154, 158, 195, 201, 203, 211, 212

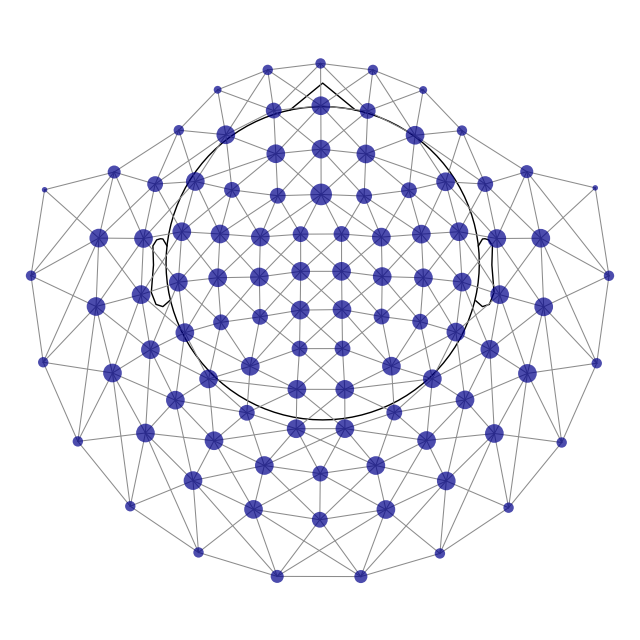

Find the FieldTrip neighbor definition to setup sensor adjacency#

adjacency, ch_names = find_ch_adjacency(epochs.info, ch_type="mag")

print(type(adjacency)) # it's a sparse matrix!

mne.viz.plot_ch_adjacency(epochs.info, adjacency, ch_names)

Reading adjacency matrix for neuromag306mag.

<class 'scipy.sparse._csr.csr_array'>

Compute permutation statistic#

How does it work? We use clustering to “bind” together features which are similar. Our features are the magnetic fields measured over our sensor array at different times. This reduces the multiple comparison problem. To compute the actual test-statistic, we first sum all F-values in all clusters. We end up with one statistic for each cluster. Then we generate a distribution from the data by shuffling our conditions between our samples and recomputing our clusters and the test statistics. We test for the significance of a given cluster by computing the probability of observing a cluster of that size [1][2].

# We are running an F test, so we look at the upper tail

# see also: https://stats.stackexchange.com/a/73993

tail = 1

# We want to set a critical test statistic (here: F), to determine when

# clusters are being formed. Using Scipy's percent point function of the F

# distribution, we can conveniently select a threshold that corresponds to

# some alpha level that we arbitrarily pick.

alpha_cluster_forming = 0.001

# For an F test we need the degrees of freedom for the numerator

# (number of conditions - 1) and the denominator (number of observations

# - number of conditions):

n_conditions = len(event_id)

n_observations = len(X[0])

dfn = n_conditions - 1

dfd = n_observations - n_conditions

# Note: we calculate 1 - alpha_cluster_forming to get the critical value

# on the right tail

f_thresh = scipy.stats.f.ppf(1 - alpha_cluster_forming, dfn=dfn, dfd=dfd)

# run the cluster based permutation analysis

cluster_stats = spatio_temporal_cluster_test(

X,

n_permutations=1000,

threshold=f_thresh,

tail=tail,

n_jobs=None,

buffer_size=None,

adjacency=adjacency,

)

F_obs, clusters, p_values, _ = cluster_stats

stat_fun(H1): min=0.0033787374718191373 max=207.77535362731717

Running initial clustering …

Found 22 clusters

0%| | Permuting : 0/999 [00:00<?, ?it/s]

1%| | Permuting : 7/999 [00:00<00:04, 206.23it/s]

2%|▏ | Permuting : 16/999 [00:00<00:04, 237.27it/s]

3%|▎ | Permuting : 25/999 [00:00<00:03, 247.76it/s]

3%|▎ | Permuting : 34/999 [00:00<00:03, 253.01it/s]

4%|▍ | Permuting : 41/999 [00:00<00:03, 242.39it/s]

5%|▌ | Permuting : 50/999 [00:00<00:03, 247.06it/s]

6%|▌ | Permuting : 58/999 [00:00<00:03, 245.50it/s]

7%|▋ | Permuting : 68/999 [00:00<00:03, 253.13it/s]

8%|▊ | Permuting : 78/999 [00:00<00:03, 259.04it/s]

9%|▊ | Permuting : 87/999 [00:00<00:03, 260.03it/s]

9%|▉ | Permuting : 94/999 [00:00<00:03, 253.98it/s]

10%|█ | Permuting : 102/999 [00:00<00:03, 252.22it/s]

11%|█ | Permuting : 111/999 [00:00<00:03, 253.75it/s]

12%|█▏ | Permuting : 119/999 [00:00<00:03, 252.18it/s]

13%|█▎ | Permuting : 127/999 [00:00<00:03, 250.80it/s]

13%|█▎ | Permuting : 134/999 [00:00<00:03, 246.97it/s]

14%|█▍ | Permuting : 140/999 [00:00<00:03, 241.05it/s]

15%|█▍ | Permuting : 149/999 [00:00<00:03, 243.22it/s]

16%|█▌ | Permuting : 157/999 [00:00<00:03, 242.75it/s]

17%|█▋ | Permuting : 166/999 [00:00<00:03, 244.65it/s]

17%|█▋ | Permuting : 174/999 [00:00<00:03, 244.07it/s]

18%|█▊ | Permuting : 181/999 [00:00<00:03, 241.38it/s]

19%|█▉ | Permuting : 188/999 [00:00<00:03, 238.92it/s]

20%|█▉ | Permuting : 195/999 [00:00<00:03, 236.74it/s]

20%|██ | Permuting : 204/999 [00:00<00:03, 238.87it/s]

21%|██ | Permuting : 212/999 [00:00<00:03, 238.78it/s]

22%|██▏ | Permuting : 221/999 [00:00<00:03, 240.68it/s]

23%|██▎ | Permuting : 229/999 [00:00<00:03, 240.19it/s]

24%|██▎ | Permuting : 236/999 [00:00<00:03, 238.11it/s]

24%|██▍ | Permuting : 244/999 [00:01<00:03, 238.06it/s]

25%|██▌ | Permuting : 252/999 [00:01<00:03, 238.01it/s]

26%|██▌ | Permuting : 259/999 [00:01<00:03, 236.15it/s]

27%|██▋ | Permuting : 267/999 [00:01<00:03, 236.23it/s]

27%|██▋ | Permuting : 274/999 [00:01<00:03, 234.50it/s]

28%|██▊ | Permuting : 282/999 [00:01<00:03, 234.67it/s]

29%|██▉ | Permuting : 290/999 [00:01<00:03, 234.83it/s]

30%|██▉ | Permuting : 298/999 [00:01<00:02, 235.00it/s]

31%|███ | Permuting : 306/999 [00:01<00:02, 235.15it/s]

31%|███▏ | Permuting : 314/999 [00:01<00:02, 235.29it/s]

32%|███▏ | Permuting : 321/999 [00:01<00:02, 233.72it/s]

33%|███▎ | Permuting : 329/999 [00:01<00:02, 233.94it/s]

34%|███▎ | Permuting : 337/999 [00:01<00:02, 234.12it/s]

35%|███▍ | Permuting : 347/999 [00:01<00:02, 237.40it/s]

36%|███▌ | Permuting : 357/999 [00:01<00:02, 240.73it/s]

37%|███▋ | Permuting : 366/999 [00:01<00:02, 242.20it/s]

38%|███▊ | Permuting : 376/999 [00:01<00:02, 245.20it/s]

39%|███▊ | Permuting : 386/999 [00:01<00:02, 248.03it/s]

40%|███▉ | Permuting : 396/999 [00:01<00:02, 250.72it/s]

41%|████ | Permuting : 406/999 [00:01<00:02, 253.24it/s]

41%|████▏ | Permuting : 414/999 [00:01<00:02, 252.39it/s]

42%|████▏ | Permuting : 422/999 [00:01<00:02, 251.44it/s]

43%|████▎ | Permuting : 431/999 [00:01<00:02, 252.28it/s]

44%|████▍ | Permuting : 439/999 [00:01<00:02, 251.49it/s]

45%|████▍ | Permuting : 447/999 [00:01<00:02, 250.75it/s]

45%|████▌ | Permuting : 454/999 [00:01<00:02, 248.47it/s]

46%|████▌ | Permuting : 461/999 [00:01<00:02, 246.31it/s]

47%|████▋ | Permuting : 469/999 [00:01<00:02, 245.85it/s]

48%|████▊ | Permuting : 476/999 [00:01<00:02, 243.83it/s]

48%|████▊ | Permuting : 484/999 [00:01<00:02, 243.39it/s]

49%|████▉ | Permuting : 492/999 [00:02<00:02, 243.01it/s]

50%|████▉ | Permuting : 499/999 [00:02<00:02, 241.16it/s]

51%|█████ | Permuting : 506/999 [00:02<00:02, 239.42it/s]

51%|█████▏ | Permuting : 513/999 [00:02<00:02, 237.76it/s]

52%|█████▏ | Permuting : 521/999 [00:02<00:02, 237.76it/s]

53%|█████▎ | Permuting : 530/999 [00:02<00:01, 239.30it/s]

54%|█████▍ | Permuting : 538/999 [00:02<00:01, 239.20it/s]

55%|█████▍ | Permuting : 547/999 [00:02<00:01, 240.65it/s]

56%|█████▌ | Permuting : 555/999 [00:02<00:01, 240.50it/s]

56%|█████▋ | Permuting : 563/999 [00:02<00:01, 240.34it/s]

57%|█████▋ | Permuting : 572/999 [00:02<00:01, 241.71it/s]

58%|█████▊ | Permuting : 583/999 [00:02<00:01, 246.08it/s]

60%|█████▉ | Permuting : 595/999 [00:02<00:01, 251.73it/s]

61%|██████ | Permuting : 606/999 [00:02<00:01, 255.56it/s]

62%|██████▏ | Permuting : 617/999 [00:02<00:01, 259.20it/s]

63%|██████▎ | Permuting : 628/999 [00:02<00:01, 262.66it/s]

64%|██████▍ | Permuting : 639/999 [00:02<00:01, 265.94it/s]

65%|██████▌ | Permuting : 650/999 [00:02<00:01, 269.04it/s]

66%|██████▌ | Permuting : 661/999 [00:02<00:01, 271.98it/s]

67%|██████▋ | Permuting : 672/999 [00:02<00:01, 274.76it/s]

68%|██████▊ | Permuting : 682/999 [00:02<00:01, 275.85it/s]

69%|██████▉ | Permuting : 693/999 [00:02<00:01, 278.44it/s]

71%|███████ | Permuting : 705/999 [00:02<00:01, 282.39it/s]

72%|███████▏ | Permuting : 716/999 [00:02<00:00, 284.56it/s]

73%|███████▎ | Permuting : 727/999 [00:02<00:00, 286.71it/s]

74%|███████▍ | Permuting : 738/999 [00:02<00:00, 288.64it/s]

75%|███████▍ | Permuting : 748/999 [00:02<00:00, 289.04it/s]

76%|███████▌ | Permuting : 759/999 [00:02<00:00, 290.92it/s]

77%|███████▋ | Permuting : 769/999 [00:02<00:00, 291.22it/s]

78%|███████▊ | Permuting : 781/999 [00:03<00:00, 294.49it/s]

79%|███████▉ | Permuting : 792/999 [00:03<00:00, 296.10it/s]

80%|████████ | Permuting : 803/999 [00:03<00:00, 297.64it/s]

81%|████████▏ | Permuting : 813/999 [00:03<00:00, 297.60it/s]

82%|████████▏ | Permuting : 823/999 [00:03<00:00, 297.58it/s]

83%|████████▎ | Permuting : 833/999 [00:03<00:00, 297.54it/s]

84%|████████▍ | Permuting : 844/999 [00:03<00:00, 299.01it/s]

85%|████████▌ | Permuting : 854/999 [00:03<00:00, 298.88it/s]

86%|████████▋ | Permuting : 864/999 [00:03<00:00, 298.74it/s]

87%|████████▋ | Permuting : 874/999 [00:03<00:00, 298.59it/s]

89%|████████▊ | Permuting : 886/999 [00:03<00:00, 301.52it/s]

90%|████████▉ | Permuting : 898/999 [00:03<00:00, 304.26it/s]

91%|█████████ | Permuting : 909/999 [00:03<00:00, 305.39it/s]

92%|█████████▏| Permuting : 921/999 [00:03<00:00, 307.96it/s]

93%|█████████▎| Permuting : 934/999 [00:03<00:00, 311.89it/s]

95%|█████████▍| Permuting : 946/999 [00:03<00:00, 314.12it/s]

96%|█████████▌| Permuting : 957/999 [00:03<00:00, 314.75it/s]

97%|█████████▋| Permuting : 969/999 [00:03<00:00, 316.86it/s]

98%|█████████▊| Permuting : 981/999 [00:03<00:00, 318.84it/s]

99%|█████████▉| Permuting : 993/999 [00:03<00:00, 320.72it/s]

100%|██████████| Permuting : 999/999 [00:03<00:00, 321.41it/s]

100%|██████████| Permuting : 999/999 [00:03<00:00, 273.06it/s]

Note

Note how we only specified an adjacency for sensors! However,

because we used mne.stats.spatio_temporal_cluster_test(),

an adjacency for time points was automatically taken into

account. That is, at time point N, the time points N - 1 and

N + 1 were considered as adjacent (this is also called “lattice

adjacency”). This is only possible because we ran the analysis on

2D data (times × channels) per observation … for 3D data per

observation (e.g., times × frequencies × channels), we will need

to use mne.stats.combine_adjacency(), as shown further

below.

Note also that the same functions work with source estimates. The only differences are the origin of the data, the size, and the adjacency definition. It can be used for single trials or for groups of subjects.

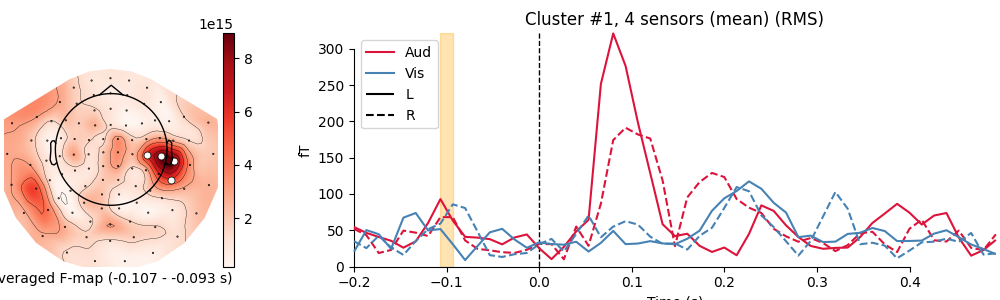

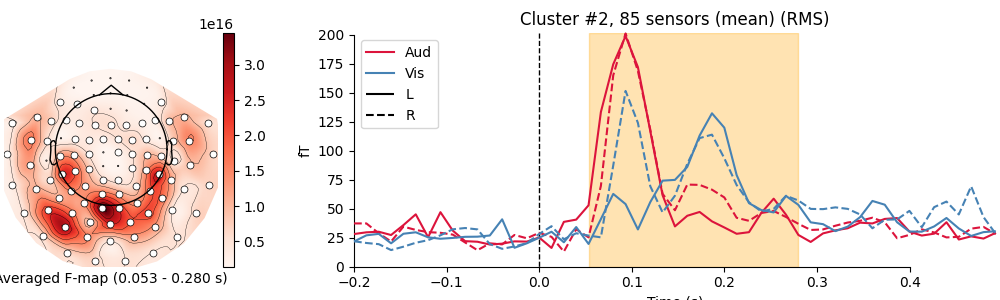

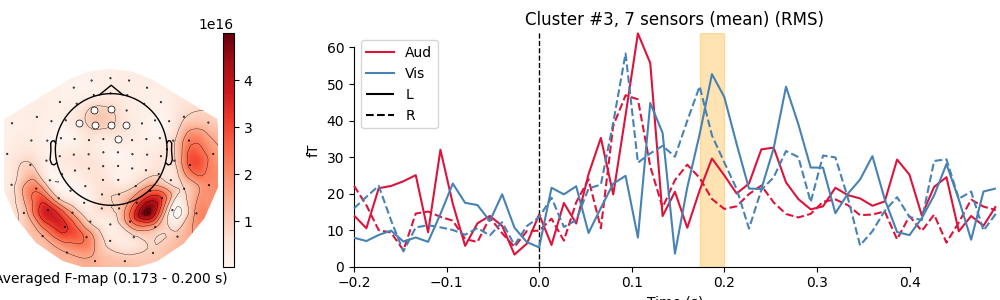

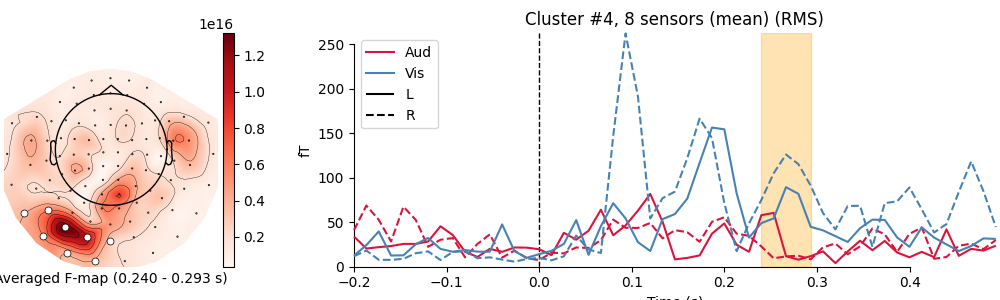

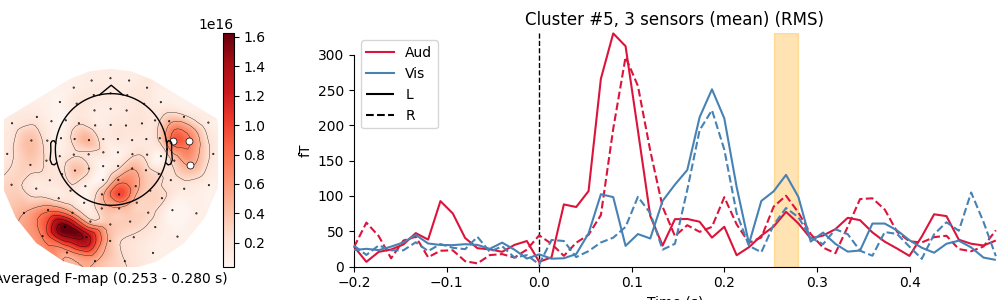

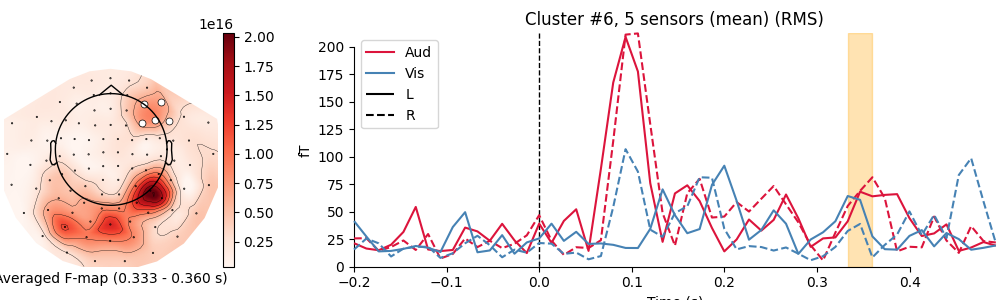

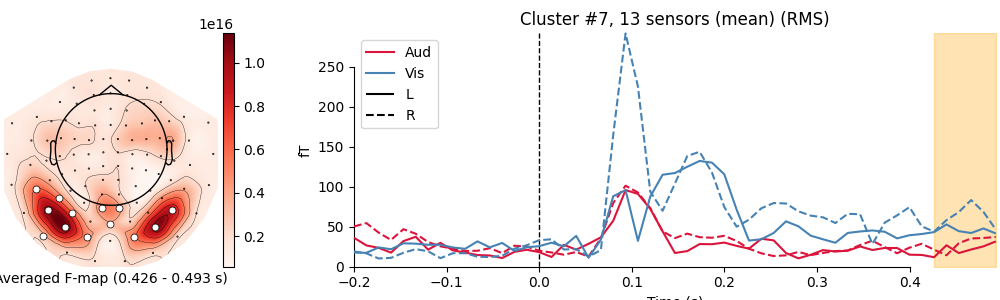

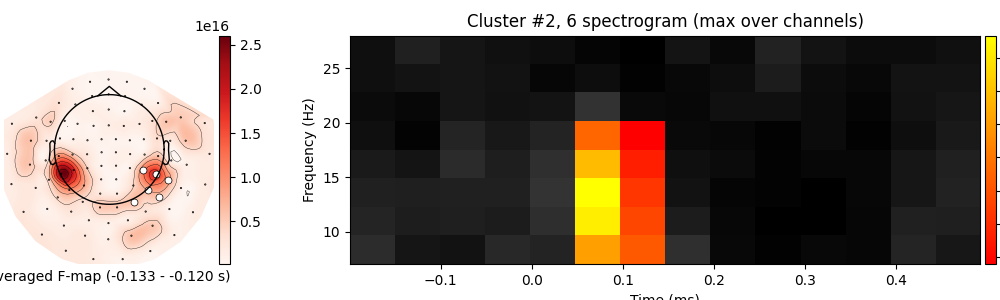

Visualize clusters#

# We subselect clusters that we consider significant at an arbitrarily

# picked alpha level: "p_accept".

# NOTE: remember the caveats with respect to "significant" clusters that

# we mentioned in the introduction of this tutorial!

p_accept = 0.01

good_cluster_inds = np.where(p_values < p_accept)[0]

# configure variables for visualization

colors = {"Aud": "crimson", "Vis": "steelblue"}

linestyles = {"L": "-", "R": "--"}

# organize data for plotting

evokeds = {cond: epochs[cond].average() for cond in event_id}

# loop over clusters

for i_clu, clu_idx in enumerate(good_cluster_inds):

# unpack cluster information, get unique indices

time_inds, space_inds = np.squeeze(clusters[clu_idx])

ch_inds = np.unique(space_inds)

time_inds = np.unique(time_inds)

# get topography for F stat

f_map = F_obs[time_inds, ...].mean(axis=0)

# get signals at the sensors contributing to the cluster

sig_times = epochs.times[time_inds]

# create spatial mask

mask = np.zeros((f_map.shape[0], 1), dtype=bool)

mask[ch_inds, :] = True

# initialize figure

fig, ax_topo = plt.subplots(1, 1, figsize=(10, 3), layout="constrained")

# plot average test statistic and mark significant sensors

f_evoked = mne.EvokedArray(f_map[:, np.newaxis], epochs.info, tmin=0)

f_evoked.plot_topomap(

times=0,

mask=mask,

axes=ax_topo,

cmap="Reds",

vlim=(np.min, np.max),

show=False,

colorbar=False,

mask_params=dict(markersize=10),

)

image = ax_topo.images[0]

# remove the title that would otherwise say "0.000 s"

ax_topo.set_title("")

# create additional axes (for ERF and colorbar)

divider = make_axes_locatable(ax_topo)

# add axes for colorbar

ax_colorbar = divider.append_axes("right", size="5%", pad=0.05)

plt.colorbar(image, cax=ax_colorbar)

ax_topo.set_xlabel(

"Averaged F-map ({:0.3f} - {:0.3f} s)".format(*sig_times[[0, -1]])

)

# add new axis for time courses and plot time courses

ax_signals = divider.append_axes("right", size="300%", pad=1.2)

title = f"Cluster #{i_clu + 1}, {len(ch_inds)} sensor"

if len(ch_inds) > 1:

title += "s (mean)"

plot_compare_evokeds(

evokeds,

title=title,

picks=ch_inds,

axes=ax_signals,

colors=colors,

linestyles=linestyles,

show=False,

split_legend=True,

truncate_yaxis="auto",

)

# plot temporal cluster extent

ymin, ymax = ax_signals.get_ylim()

ax_signals.fill_betweenx(

(ymin, ymax), sig_times[0], sig_times[-1], color="orange", alpha=0.3

)

plt.show()

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

combining channels using RMS (mag channels)

Permutation statistic for time-frequencies#

Let’s do the same thing with the time-frequency decomposition of the data (see Frequency and time-frequency sensor analysis for a tutorial and Time-frequency on simulated data (Multitaper vs. Morlet vs. Stockwell vs. Hilbert) for a comparison of time-frequency methods) to show how cluster permutations can be done on higher-dimensional data.

decim = 4

freqs = np.arange(7, 30, 3) # define frequencies of interest

n_cycles = freqs / freqs[0]

epochs_power = list()

for condition in [epochs[k] for k in ("Aud/L", "Vis/L")]:

this_tfr = condition.compute_tfr(

method="morlet",

freqs=freqs,

n_cycles=n_cycles,

decim=decim,

average=False,

return_itc=False,

)

this_tfr.apply_baseline(mode="ratio", baseline=(None, 0))

epochs_power.append(this_tfr.data)

# transpose again to (epochs, frequencies, times, channels)

X = [np.transpose(x, (0, 2, 3, 1)) for x in epochs_power]

Applying baseline correction (mode: ratio)

Applying baseline correction (mode: ratio)

Remember the note on the adjacency matrix from above: For 3D data, as here,

we must use mne.stats.combine_adjacency() to extend the

sensor-based adjacency to incorporate the time-frequency plane as well.

Here, the integer inputs are converted into a lattice and combined with the sensor adjacency matrix so that data at similar times and with similar frequencies and at close sensor locations are clustered together.

# our data at each observation is of shape frequencies × times × channels

tfr_adjacency = combine_adjacency(len(freqs), len(this_tfr.times), adjacency)

Now we can run the cluster permutation test, but first we have to set a threshold. This example decimates in time and uses few frequencies so we need to increase the threshold from the default value in order to have differentiated clusters (i.e., so that our algorithm doesn’t just find one large cluster). For a more principled method of setting this parameter, threshold-free cluster enhancement may be used. See Statistical inference for a discussion.

# This time we don't calculate a threshold based on the F distribution.

# We might as well select an arbitrary threshold for cluster forming

tfr_threshold = 15.0

# run cluster based permutation analysis

cluster_stats = spatio_temporal_cluster_test(

X,

n_permutations=1000,

threshold=tfr_threshold,

tail=1,

n_jobs=None,

buffer_size=None,

adjacency=tfr_adjacency,

)

stat_fun(H1): min=6.752417666025854e-07 max=38.408365559505015

Running initial clustering …

Found 3 clusters

0%| | Permuting : 0/999 [00:00<?, ?it/s]

0%| | Permuting : 3/999 [00:00<00:12, 76.72it/s]

1%| | Permuting : 8/999 [00:00<00:30, 32.79it/s]

1%| | Permuting : 12/999 [00:00<00:23, 42.28it/s]

2%|▏ | Permuting : 19/999 [00:00<00:16, 61.13it/s]

2%|▏ | Permuting : 23/999 [00:00<00:14, 67.38it/s]

3%|▎ | Permuting : 26/999 [00:00<00:14, 68.57it/s]

3%|▎ | Permuting : 30/999 [00:00<00:13, 73.40it/s]

4%|▎ | Permuting : 36/999 [00:00<00:11, 83.04it/s]

4%|▍ | Permuting : 40/999 [00:00<00:11, 85.09it/s]

4%|▍ | Permuting : 42/999 [00:00<00:11, 80.92it/s]

5%|▍ | Permuting : 46/999 [00:00<00:11, 82.96it/s]

5%|▌ | Permuting : 50/999 [00:00<00:11, 85.71it/s]

6%|▌ | Permuting : 55/999 [00:00<00:10, 90.41it/s]

6%|▌ | Permuting : 56/999 [00:00<00:13, 70.74it/s]

6%|▌ | Permuting : 59/999 [00:00<00:13, 71.21it/s]

6%|▋ | Permuting : 63/999 [00:00<00:12, 73.35it/s]

7%|▋ | Permuting : 69/999 [00:00<00:11, 78.68it/s]

7%|▋ | Permuting : 71/999 [00:00<00:12, 76.96it/s]

8%|▊ | Permuting : 75/999 [00:00<00:11, 78.64it/s]

8%|▊ | Permuting : 78/999 [00:01<00:11, 79.23it/s]

8%|▊ | Permuting : 81/999 [00:01<00:11, 79.26it/s]

8%|▊ | Permuting : 83/999 [00:01<00:11, 77.58it/s]

9%|▊ | Permuting : 86/999 [00:01<00:11, 77.59it/s]

9%|▊ | Permuting : 87/999 [00:01<00:14, 64.26it/s]

9%|▉ | Permuting : 93/999 [00:01<00:13, 69.44it/s]

10%|▉ | Permuting : 99/999 [00:01<00:12, 74.42it/s]

11%|█ | Permuting : 105/999 [00:01<00:11, 79.19it/s]

11%|█ | Permuting : 109/999 [00:01<00:11, 80.46it/s]

12%|█▏ | Permuting : 115/999 [00:01<00:10, 84.54it/s]

12%|█▏ | Permuting : 120/999 [00:01<00:10, 87.49it/s]

13%|█▎ | Permuting : 125/999 [00:01<00:09, 90.32it/s]

13%|█▎ | Permuting : 131/999 [00:01<00:09, 94.41it/s]

14%|█▎ | Permuting : 137/999 [00:01<00:08, 98.32it/s]

14%|█▍ | Permuting : 142/999 [00:01<00:08, 100.69it/s]

15%|█▍ | Permuting : 147/999 [00:01<00:08, 102.13it/s]

15%|█▌ | Permuting : 153/999 [00:01<00:08, 104.95it/s]

16%|█▌ | Permuting : 158/999 [00:01<00:07, 106.99it/s]

16%|█▌ | Permuting : 160/999 [00:01<00:08, 104.10it/s]

16%|█▋ | Permuting : 164/999 [00:01<00:07, 104.80it/s]

17%|█▋ | Permuting : 169/999 [00:01<00:07, 106.86it/s]

17%|█▋ | Permuting : 172/999 [00:01<00:09, 90.05it/s]

18%|█▊ | Permuting : 178/999 [00:02<00:08, 93.61it/s]

18%|█▊ | Permuting : 181/999 [00:02<00:08, 92.89it/s]

19%|█▊ | Permuting : 186/999 [00:02<00:08, 95.17it/s]

19%|█▉ | Permuting : 191/999 [00:02<00:08, 97.38it/s]

20%|██ | Permuting : 202/999 [00:02<00:07, 106.92it/s]

20%|██ | Permuting : 204/999 [00:02<00:07, 104.29it/s]

21%|██ | Permuting : 209/999 [00:02<00:07, 106.15it/s]

21%|██▏ | Permuting : 214/999 [00:02<00:07, 107.96it/s]

22%|██▏ | Permuting : 217/999 [00:02<00:07, 106.49it/s]

22%|██▏ | Permuting : 221/999 [00:02<00:07, 107.03it/s]

22%|██▏ | Permuting : 224/999 [00:02<00:07, 105.60it/s]

23%|██▎ | Permuting : 226/999 [00:02<00:07, 102.96it/s]

23%|██▎ | Permuting : 231/999 [00:02<00:07, 104.94it/s]

23%|██▎ | Permuting : 234/999 [00:02<00:07, 103.67it/s]

24%|██▎ | Permuting : 235/999 [00:02<00:08, 88.53it/s]

24%|██▍ | Permuting : 242/999 [00:02<00:08, 93.17it/s]

24%|██▍ | Permuting : 244/999 [00:02<00:08, 91.64it/s]

25%|██▍ | Permuting : 248/999 [00:02<00:08, 92.09it/s]

25%|██▌ | Permuting : 254/999 [00:02<00:07, 94.96it/s]

26%|██▌ | Permuting : 259/999 [00:02<00:07, 97.11it/s]

27%|██▋ | Permuting : 268/999 [00:02<00:07, 103.36it/s]

28%|██▊ | Permuting : 275/999 [00:02<00:06, 107.33it/s]

28%|██▊ | Permuting : 280/999 [00:02<00:06, 109.03it/s]

29%|██▊ | Permuting : 285/999 [00:02<00:06, 110.67it/s]

29%|██▉ | Permuting : 292/999 [00:02<00:06, 114.01it/s]

30%|██▉ | Permuting : 296/999 [00:03<00:06, 113.51it/s]

30%|██▉ | Permuting : 299/999 [00:03<00:06, 112.51it/s]

30%|███ | Permuting : 301/999 [00:03<00:06, 109.59it/s]

31%|███ | Permuting : 306/999 [00:03<00:07, 97.27it/s]

31%|███ | Permuting : 308/999 [00:03<00:07, 95.86it/s]

31%|███ | Permuting : 311/999 [00:03<00:07, 95.08it/s]

31%|███▏ | Permuting : 313/999 [00:03<00:07, 93.20it/s]

32%|███▏ | Permuting : 316/999 [00:03<00:07, 92.52it/s]

32%|███▏ | Permuting : 320/999 [00:03<00:07, 93.55it/s]

32%|███▏ | Permuting : 324/999 [00:03<00:07, 94.00it/s]

33%|███▎ | Permuting : 328/999 [00:03<00:07, 94.99it/s]

33%|███▎ | Permuting : 334/999 [00:03<00:06, 98.32it/s]

34%|███▍ | Permuting : 340/999 [00:03<00:06, 101.54it/s]

35%|███▍ | Permuting : 347/999 [00:03<00:06, 105.88it/s]

35%|███▌ | Permuting : 354/999 [00:03<00:05, 110.07it/s]

36%|███▌ | Permuting : 355/999 [00:03<00:06, 94.62it/s]

36%|███▌ | Permuting : 358/999 [00:03<00:06, 93.90it/s]

36%|███▌ | Permuting : 360/999 [00:03<00:06, 92.10it/s]

37%|███▋ | Permuting : 369/999 [00:03<00:06, 98.16it/s]

37%|███▋ | Permuting : 372/999 [00:03<00:06, 97.83it/s]

38%|███▊ | Permuting : 377/999 [00:03<00:06, 98.54it/s]

38%|███▊ | Permuting : 381/999 [00:03<00:06, 99.33it/s]

38%|███▊ | Permuting : 383/999 [00:04<00:06, 97.59it/s]

39%|███▉ | Permuting : 394/999 [00:04<00:05, 106.60it/s]

40%|███▉ | Permuting : 399/999 [00:04<00:05, 107.56it/s]

40%|████ | Permuting : 404/999 [00:04<00:05, 108.55it/s]

41%|████ | Permuting : 411/999 [00:04<00:05, 112.35it/s]

41%|████▏ | Permuting : 414/999 [00:04<00:05, 110.72it/s]

42%|████▏ | Permuting : 420/999 [00:04<00:05, 112.76it/s]

42%|████▏ | Permuting : 424/999 [00:04<00:05, 113.00it/s]

43%|████▎ | Permuting : 430/999 [00:04<00:04, 115.67it/s]

44%|████▎ | Permuting : 436/999 [00:04<00:04, 118.25it/s]

44%|████▍ | Permuting : 442/999 [00:04<00:05, 105.68it/s]

45%|████▍ | Permuting : 448/999 [00:04<00:05, 107.69it/s]

45%|████▌ | Permuting : 451/999 [00:04<00:05, 107.00it/s]

46%|████▌ | Permuting : 456/999 [00:04<00:05, 107.95it/s]

46%|████▌ | Permuting : 460/999 [00:04<00:04, 108.37it/s]

46%|████▋ | Permuting : 463/999 [00:04<00:05, 106.99it/s]

47%|████▋ | Permuting : 469/999 [00:04<00:04, 109.37it/s]

47%|████▋ | Permuting : 474/999 [00:04<00:04, 110.88it/s]

48%|████▊ | Permuting : 477/999 [00:04<00:04, 109.37it/s]

48%|████▊ | Permuting : 482/999 [00:04<00:04, 110.92it/s]

49%|████▊ | Permuting : 486/999 [00:04<00:04, 110.43it/s]

49%|████▉ | Permuting : 489/999 [00:04<00:04, 109.58it/s]

49%|████▉ | Permuting : 491/999 [00:04<00:04, 106.83it/s]

50%|████▉ | Permuting : 497/999 [00:04<00:04, 109.74it/s]

50%|█████ | Permuting : 501/999 [00:05<00:05, 96.97it/s]

50%|█████ | Permuting : 504/999 [00:05<00:05, 96.15it/s]

51%|█████ | Permuting : 509/999 [00:05<00:04, 98.09it/s]

52%|█████▏ | Permuting : 515/999 [00:05<00:04, 101.09it/s]

52%|█████▏ | Permuting : 520/999 [00:05<00:04, 101.32it/s]

53%|█████▎ | Permuting : 525/999 [00:05<00:04, 103.10it/s]

53%|█████▎ | Permuting : 531/999 [00:05<00:04, 105.98it/s]

54%|█████▎ | Permuting : 535/999 [00:05<00:04, 105.86it/s]

54%|█████▍ | Permuting : 538/999 [00:05<00:04, 104.52it/s]

54%|█████▍ | Permuting : 544/999 [00:05<00:04, 106.78it/s]

55%|█████▍ | Permuting : 549/999 [00:05<00:04, 108.43it/s]

55%|█████▌ | Permuting : 551/999 [00:05<00:04, 105.98it/s]

56%|█████▌ | Permuting : 558/999 [00:05<00:04, 109.77it/s]

56%|█████▋ | Permuting : 562/999 [00:05<00:04, 96.99it/s]

57%|█████▋ | Permuting : 566/999 [00:05<00:04, 97.26it/s]

57%|█████▋ | Permuting : 571/999 [00:05<00:04, 99.12it/s]

58%|█████▊ | Permuting : 576/999 [00:05<00:04, 100.93it/s]

58%|█████▊ | Permuting : 582/999 [00:05<00:04, 103.82it/s]

59%|█████▊ | Permuting : 585/999 [00:05<00:04, 102.67it/s]

59%|█████▉ | Permuting : 590/999 [00:05<00:03, 104.41it/s]

60%|██████ | Permuting : 600/999 [00:05<00:03, 111.18it/s]

60%|██████ | Permuting : 603/999 [00:06<00:03, 110.33it/s]

61%|██████ | Permuting : 606/999 [00:06<00:03, 108.84it/s]

62%|██████▏ | Permuting : 616/999 [00:06<00:03, 116.26it/s]

62%|██████▏ | Permuting : 618/999 [00:06<00:03, 113.72it/s]

62%|██████▏ | Permuting : 624/999 [00:06<00:03, 116.18it/s]

63%|██████▎ | Permuting : 628/999 [00:06<00:03, 116.25it/s]

63%|██████▎ | Permuting : 631/999 [00:06<00:03, 98.55it/s]

63%|██████▎ | Permuting : 634/999 [00:06<00:03, 97.69it/s]

64%|██████▍ | Permuting : 638/999 [00:06<00:03, 98.45it/s]

64%|██████▍ | Permuting : 641/999 [00:06<00:03, 97.57it/s]

65%|██████▍ | Permuting : 646/999 [00:06<00:03, 99.45it/s]

65%|██████▌ | Permuting : 653/999 [00:06<00:03, 103.48it/s]

66%|██████▌ | Permuting : 658/999 [00:06<00:03, 104.57it/s]

66%|██████▋ | Permuting : 663/999 [00:06<00:03, 105.59it/s]

67%|██████▋ | Permuting : 669/999 [00:06<00:03, 108.37it/s]

68%|██████▊ | Permuting : 678/999 [00:06<00:02, 113.84it/s]

68%|██████▊ | Permuting : 684/999 [00:06<00:02, 116.34it/s]

69%|██████▉ | Permuting : 690/999 [00:06<00:02, 118.76it/s]

70%|██████▉ | Permuting : 695/999 [00:06<00:02, 119.93it/s]

70%|███████ | Permuting : 701/999 [00:06<00:02, 122.26it/s]

71%|███████ | Permuting : 706/999 [00:06<00:02, 123.31it/s]

71%|███████ | Permuting : 708/999 [00:06<00:02, 119.95it/s]

71%|███████ | Permuting : 710/999 [00:07<00:02, 104.71it/s]

71%|███████▏ | Permuting : 713/999 [00:07<00:02, 103.82it/s]

72%|███████▏ | Permuting : 718/999 [00:07<00:02, 104.80it/s]

72%|███████▏ | Permuting : 723/999 [00:07<00:02, 106.12it/s]

73%|███████▎ | Permuting : 730/999 [00:07<00:02, 109.82it/s]

73%|███████▎ | Permuting : 734/999 [00:07<00:02, 109.51it/s]

74%|███████▍ | Permuting : 738/999 [00:07<00:02, 109.87it/s]

74%|███████▍ | Permuting : 744/999 [00:07<00:02, 112.52it/s]

75%|███████▍ | Permuting : 746/999 [00:07<00:02, 109.99it/s]

75%|███████▌ | Permuting : 751/999 [00:07<00:02, 111.51it/s]

75%|███████▌ | Permuting : 753/999 [00:07<00:02, 109.42it/s]

76%|███████▌ | Permuting : 755/999 [00:07<00:02, 106.73it/s]

76%|███████▋ | Permuting : 762/999 [00:07<00:02, 110.17it/s]

77%|███████▋ | Permuting : 770/999 [00:07<00:01, 115.14it/s]

77%|███████▋ | Permuting : 771/999 [00:07<00:02, 99.70it/s]

78%|███████▊ | Permuting : 778/999 [00:07<00:02, 103.75it/s]

79%|███████▊ | Permuting : 785/999 [00:07<00:01, 107.70it/s]

79%|███████▉ | Permuting : 791/999 [00:07<00:01, 110.41it/s]

79%|███████▉ | Permuting : 794/999 [00:07<00:01, 108.98it/s]

80%|███████▉ | Permuting : 798/999 [00:07<00:01, 109.37it/s]

80%|████████ | Permuting : 800/999 [00:07<00:01, 107.42it/s]

81%|████████ | Permuting : 805/999 [00:07<00:01, 109.08it/s]

81%|████████ | Permuting : 811/999 [00:07<00:01, 111.88it/s]

82%|████████▏ | Permuting : 817/999 [00:07<00:01, 114.60it/s]

82%|████████▏ | Permuting : 823/999 [00:07<00:01, 117.23it/s]

83%|████████▎ | Permuting : 825/999 [00:08<00:01, 114.14it/s]

83%|████████▎ | Permuting : 830/999 [00:08<00:01, 115.58it/s]

84%|████████▎ | Permuting : 836/999 [00:08<00:01, 118.22it/s]

84%|████████▍ | Permuting : 840/999 [00:08<00:01, 101.79it/s]

84%|████████▍ | Permuting : 843/999 [00:08<00:01, 100.77it/s]

85%|████████▍ | Permuting : 846/999 [00:08<00:01, 99.79it/s]

85%|████████▌ | Permuting : 850/999 [00:08<00:01, 100.52it/s]

85%|████████▌ | Permuting : 852/999 [00:08<00:01, 98.91it/s]

86%|████████▌ | Permuting : 857/999 [00:08<00:01, 100.83it/s]

86%|████████▌ | Permuting : 861/999 [00:08<00:01, 100.95it/s]

87%|████████▋ | Permuting : 869/999 [00:08<00:01, 105.69it/s]

87%|████████▋ | Permuting : 872/999 [00:08<00:01, 104.44it/s]

88%|████████▊ | Permuting : 877/999 [00:08<00:01, 106.20it/s]

89%|████████▉ | Permuting : 889/999 [00:08<00:00, 116.20it/s]

90%|████████▉ | Permuting : 895/999 [00:08<00:00, 118.72it/s]

90%|█████████ | Permuting : 902/999 [00:08<00:00, 122.37it/s]

91%|█████████ | Permuting : 910/999 [00:08<00:00, 111.46it/s]

92%|█████████▏| Permuting : 915/999 [00:08<00:00, 112.21it/s]

93%|█████████▎| Permuting : 925/999 [00:08<00:00, 119.04it/s]

94%|█████████▍| Permuting : 937/999 [00:08<00:00, 127.05it/s]

95%|█████████▍| Permuting : 945/999 [00:08<00:00, 130.42it/s]

95%|█████████▍| Permuting : 949/999 [00:08<00:00, 130.00it/s]

95%|█████████▌| Permuting : 953/999 [00:09<00:00, 128.11it/s]

96%|█████████▌| Permuting : 960/999 [00:09<00:00, 131.03it/s]

96%|█████████▋| Permuting : 962/999 [00:09<00:00, 127.75it/s]

97%|█████████▋| Permuting : 968/999 [00:09<00:00, 129.74it/s]

97%|█████████▋| Permuting : 974/999 [00:09<00:00, 131.67it/s]

98%|█████████▊| Permuting : 979/999 [00:09<00:00, 131.55it/s]

98%|█████████▊| Permuting : 984/999 [00:09<00:00, 131.46it/s]

99%|█████████▉| Permuting : 990/999 [00:09<00:00, 133.36it/s]

100%|█████████▉| Permuting : 996/999 [00:09<00:00, 135.21it/s]

100%|██████████| Permuting : 999/999 [00:09<00:00, 134.99it/s]

100%|██████████| Permuting : 999/999 [00:09<00:00, 106.92it/s]

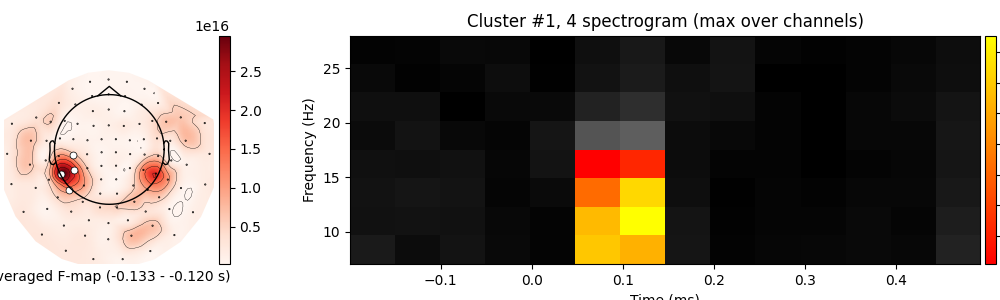

Finally, we can plot our results. It is difficult to visualize clusters in time-frequency-sensor space; plotting time-frequency spectrograms and plotting topomaps display time-frequency and sensor space respectively but they are difficult to combine. We will plot topomaps with the clustered sensors colored in white adjacent to spectrograms in order to provide a visualization of the results. This is a dimensionally limited view, however. Each sensor has its own significant time-frequencies, but, in order to display a single spectrogram, all the time-frequencies that are significant for any sensor in the cluster are plotted as significant. This is a difficulty inherent to visualizing high-dimensional data and should be taken into consideration when interpreting results.

F_obs, clusters, p_values, _ = cluster_stats

good_cluster_inds = np.where(p_values < p_accept)[0]

for i_clu, clu_idx in enumerate(good_cluster_inds):

# unpack cluster information, get unique indices

freq_inds, time_inds, space_inds = clusters[clu_idx]

ch_inds = np.unique(space_inds)

time_inds = np.unique(time_inds)

freq_inds = np.unique(freq_inds)

# get topography for F stat

f_map = F_obs[freq_inds].mean(axis=0)

f_map = f_map[time_inds].mean(axis=0)

# get signals at the sensors contributing to the cluster

sig_times = epochs.times[time_inds]

# initialize figure

fig, ax_topo = plt.subplots(1, 1, figsize=(10, 3), layout="constrained")

# create spatial mask

mask = np.zeros((f_map.shape[0], 1), dtype=bool)

mask[ch_inds, :] = True

# plot average test statistic and mark significant sensors

f_evoked = mne.EvokedArray(f_map[:, np.newaxis], epochs.info, tmin=0)

f_evoked.plot_topomap(

times=0,

mask=mask,

axes=ax_topo,

cmap="Reds",

vlim=(np.min, np.max),

show=False,

colorbar=False,

mask_params=dict(markersize=10),

)

image = ax_topo.images[0]

# create additional axes (for ERF and colorbar)

divider = make_axes_locatable(ax_topo)

# add axes for colorbar

ax_colorbar = divider.append_axes("right", size="5%", pad=0.05)

plt.colorbar(image, cax=ax_colorbar)

ax_topo.set_xlabel(

"Averaged F-map ({:0.3f} - {:0.3f} s)".format(*sig_times[[0, -1]])

)

# remove the title that would otherwise say "0.000 s"

ax_topo.set_title("")

# add new axis for spectrogram

ax_spec = divider.append_axes("right", size="300%", pad=1.2)

title = f"Cluster #{i_clu + 1}, {len(ch_inds)} spectrogram"

if len(ch_inds) > 1:

title += " (max over channels)"

F_obs_plot = F_obs[..., ch_inds].max(axis=-1)

F_obs_plot_sig = np.zeros(F_obs_plot.shape) * np.nan

F_obs_plot_sig[tuple(np.meshgrid(freq_inds, time_inds))] = F_obs_plot[

tuple(np.meshgrid(freq_inds, time_inds))

]

for f_image, cmap in zip([F_obs_plot, F_obs_plot_sig], ["gray", "autumn"]):

c = ax_spec.imshow(

f_image,

cmap=cmap,

aspect="auto",

origin="lower",

extent=[epochs.times[0], epochs.times[-1], freqs[0], freqs[-1]],

)

ax_spec.set_xlabel("Time (ms)")

ax_spec.set_ylabel("Frequency (Hz)")

ax_spec.set_title(title)

# add another colorbar

ax_colorbar2 = divider.append_axes("right", size="5%", pad=0.05)

plt.colorbar(c, cax=ax_colorbar2)

ax_colorbar2.set_ylabel("F-stat")

# clean up viz

plt.show()

Exercises#

References#

Total running time of the script: (0 minutes 21.212 seconds)