Note

Click here to download the full example code

Morph volumetric source estimate#

This example demonstrates how to morph an individual subject’s

mne.VolSourceEstimate to a common reference space. We achieve this

using mne.SourceMorph. Data will be morphed based on

an affine transformation and a nonlinear registration method

known as Symmetric Diffeomorphic Registration (SDR) by

1.

Transformation is estimated from the subject’s anatomical T1 weighted MRI (brain) to FreeSurfer’s ‘fsaverage’ T1 weighted MRI (brain).

Afterwards the transformation will be applied to the volumetric source estimate. The result will be plotted, showing the fsaverage T1 weighted anatomical MRI, overlaid with the morphed volumetric source estimate.

# Author: Tommy Clausner <tommy.clausner@gmail.com>

#

# License: BSD-3-Clause

import os

import nibabel as nib

import mne

from mne.datasets import sample, fetch_fsaverage

from mne.minimum_norm import apply_inverse, read_inverse_operator

from nilearn.plotting import plot_glass_brain

print(__doc__)

Setup paths

sample_dir_raw = sample.data_path()

sample_dir = os.path.join(sample_dir_raw, 'MEG', 'sample')

subjects_dir = os.path.join(sample_dir_raw, 'subjects')

fname_evoked = os.path.join(sample_dir, 'sample_audvis-ave.fif')

fname_inv = os.path.join(sample_dir, 'sample_audvis-meg-vol-7-meg-inv.fif')

fname_t1_fsaverage = os.path.join(subjects_dir, 'fsaverage', 'mri',

'brain.mgz')

fetch_fsaverage(subjects_dir) # ensure fsaverage src exists

fname_src_fsaverage = subjects_dir + '/fsaverage/bem/fsaverage-vol-5-src.fif'

0 files missing from root.txt in /home/circleci/mne_data/MNE-sample-data/subjects

0 files missing from bem.txt in /home/circleci/mne_data/MNE-sample-data/subjects/fsaverage

Compute example data. For reference see Compute MNE-dSPM inverse solution on evoked data in volume source space.

Load data:

evoked = mne.read_evokeds(fname_evoked, condition=0, baseline=(None, 0))

inverse_operator = read_inverse_operator(fname_inv)

# Apply inverse operator

stc = apply_inverse(evoked, inverse_operator, 1.0 / 3.0 ** 2, "dSPM")

# To save time

stc.crop(0.09, 0.09)

Reading /home/circleci/mne_data/MNE-sample-data/MEG/sample/sample_audvis-ave.fif ...

Read a total of 4 projection items:

PCA-v1 (1 x 102) active

PCA-v2 (1 x 102) active

PCA-v3 (1 x 102) active

Average EEG reference (1 x 60) active

Found the data of interest:

t = -199.80 ... 499.49 ms (Left Auditory)

0 CTF compensation matrices available

nave = 55 - aspect type = 100

Projections have already been applied. Setting proj attribute to True.

Applying baseline correction (mode: mean)

Reading inverse operator decomposition from /home/circleci/mne_data/MNE-sample-data/MEG/sample/sample_audvis-meg-vol-7-meg-inv.fif...

Reading inverse operator info...

[done]

Reading inverse operator decomposition...

[done]

305 x 305 full covariance (kind = 1) found.

Read a total of 4 projection items:

PCA-v1 (1 x 102) active

PCA-v2 (1 x 102) active

PCA-v3 (1 x 102) active

Average EEG reference (1 x 60) active

Noise covariance matrix read.

11271 x 11271 diagonal covariance (kind = 2) found.

Source covariance matrix read.

Did not find the desired covariance matrix (kind = 6)

11271 x 11271 diagonal covariance (kind = 5) found.

Depth priors read.

Did not find the desired covariance matrix (kind = 3)

Reading a source space...

[done]

1 source spaces read

Read a total of 4 projection items:

PCA-v1 (1 x 102) active

PCA-v2 (1 x 102) active

PCA-v3 (1 x 102) active

Average EEG reference (1 x 60) active

Source spaces transformed to the inverse solution coordinate frame

Preparing the inverse operator for use...

Scaled noise and source covariance from nave = 1 to nave = 55

Created the regularized inverter

Created an SSP operator (subspace dimension = 3)

Created the whitener using a noise covariance matrix with rank 302 (3 small eigenvalues omitted)

Computing noise-normalization factors (dSPM)...

[done]

Applying inverse operator to "Left Auditory"...

Picked 305 channels from the data

Computing inverse...

Eigenleads need to be weighted ...

Computing residual...

Explained 59.7% variance

Combining the current components...

dSPM...

[done]

Get a SourceMorph object for VolSourceEstimate#

subject_from can typically be inferred from

src,

and subject_to is set to ‘fsaverage’ by default. subjects_dir can be

None when set in the environment. In that case SourceMorph can be initialized

taking src as only argument. See mne.SourceMorph for more

details.

The default parameter setting for zooms will cause the reference volumes to be resliced before computing the transform. A value of ‘5’ would cause the function to reslice to an isotropic voxel size of 5 mm. The higher this value the less accurate but faster the computation will be.

The recommended way to use this is to morph to a specific destination source

space so that different subject_from morphs will go to the same space.`

A standard usage for volumetric data reads:

src_fs = mne.read_source_spaces(fname_src_fsaverage)

morph = mne.compute_source_morph(

inverse_operator['src'], subject_from='sample', subjects_dir=subjects_dir,

niter_affine=[10, 10, 5], niter_sdr=[10, 10, 5], # just for speed

src_to=src_fs, verbose=True)

Reading a source space...

[done]

1 source spaces read

Volume source space(s) present...

Loading /home/circleci/mne_data/MNE-sample-data/subjects/sample/mri/brain.mgz as "from" volume

Loading /home/circleci/mne_data/MNE-sample-data/subjects/fsaverage/mri/brain.mgz as "to" volume

Computing registration...

Reslicing to zooms=(4.999999888241291, 4.999999888241291, 4.999999888241291) for translation ...

Optimizing translation:

Optimizing level 2 [max iter: 10]

Optimizing level 1 [max iter: 10]

Optimizing level 0 [max iter: 5]

Translation: 22.6 mm

R²: 92.5%

Optimizing rigid:

Optimizing level 2 [max iter: 10]

Optimizing level 1 [max iter: 10]

Optimizing level 0 [max iter: 5]

Translation: 22.6 mm

Rotation: 21.0°

R²: 96.5%

Optimizing affine:

Optimizing level 2 [max iter: 10]

Optimizing level 1 [max iter: 10]

Optimizing level 0 [max iter: 5]

R²: 97.0%

Optimizing sdr:

R²: 98.9%

[done]

Apply morph to VolSourceEstimate#

The morph can be applied to the source estimate data, by giving it as the

first argument to the morph.apply() method.

Note

Volumetric morphing is much slower than surface morphing because the

volume for each time point is individually resampled and SDR morphed.

The mne.SourceMorph.compute_vol_morph_mat() method can be used

to compute an equivalent sparse matrix representation by computing the

transformation for each source point individually. This generally takes

a few minutes to compute, but can be

saved to disk and be reused. The

resulting sparse matrix operation is very fast (about 400× faster) to

apply. This approach is more efficient

when the number of time points to be morphed exceeds the number of

source space points, which is generally in the thousands. This can

easily occur when morphing many time points and multiple conditions.

0%| | Time : 0/1 [00:00<?, ?it/s]

100%|##########| Time : 1/1 [00:00<00:00, 2.06it/s]

100%|##########| Time : 1/1 [00:00<00:00, 2.05it/s]

Convert morphed VolSourceEstimate into NIfTI#

We can convert our morphed source estimate into a NIfTI volume using

morph.apply(..., output='nifti1').

# Create mri-resolution volume of results

img_fsaverage = morph.apply(stc, mri_resolution=2, output='nifti1')

0%| | Time : 0/1 [00:00<?, ?it/s]

100%|##########| Time : 1/1 [00:00<00:00, 2.50it/s]

100%|##########| Time : 1/1 [00:00<00:00, 2.50it/s]

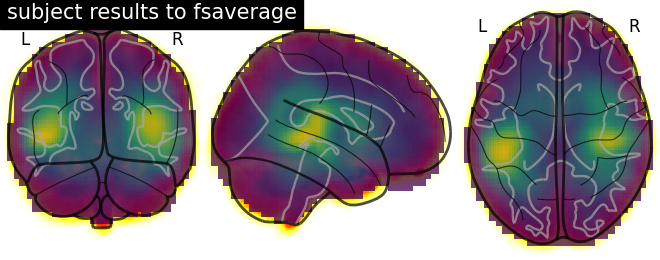

Plot results#

# Load fsaverage anatomical image

t1_fsaverage = nib.load(fname_t1_fsaverage)

# Plot glass brain (change to plot_anat to display an overlaid anatomical T1)

display = plot_glass_brain(t1_fsaverage,

title='subject results to fsaverage',

draw_cross=False,

annotate=True)

# Add functional data as overlay

display.add_overlay(img_fsaverage, alpha=0.75)

Reading and writing SourceMorph from and to disk#

An instance of SourceMorph can be saved, by calling

morph.save.

This methods allows for specification of a filename under which the morph

will be save in “.h5” format. If no file extension is provided, “-morph.h5”

will be appended to the respective defined filename:

>>> morph.save('my-file-name')

Reading a saved source morph can be achieved by using

mne.read_source_morph():

>>> morph = mne.read_source_morph('my-file-name-morph.h5')

Once the environment is set up correctly, no information such as

subject_from or subjects_dir must be provided, since it can be

inferred from the data and used morph to ‘fsaverage’ by default, e.g.:

>>> morph.apply(stc)

References#

- 1

Brian B. Avants, Charles L. Epstein, Murray C. Grossman, and James C. Gee. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Medical Image Analysis, 12(1):26–41, 2008. doi:10.1016/j.media.2007.06.004.

Total running time of the script: ( 0 minutes 27.565 seconds)

Estimated memory usage: 828 MB