Note

Go to the end to download the full example code.

Compute and visualize ERDS maps#

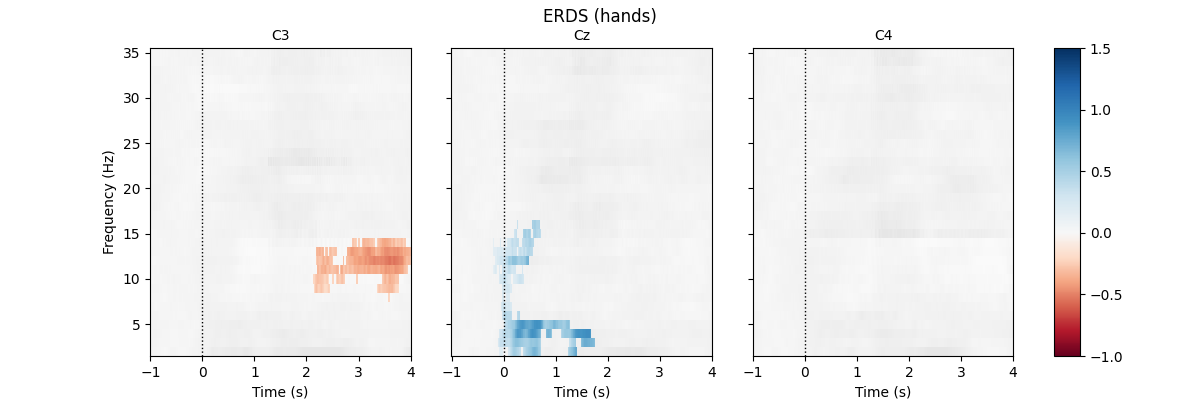

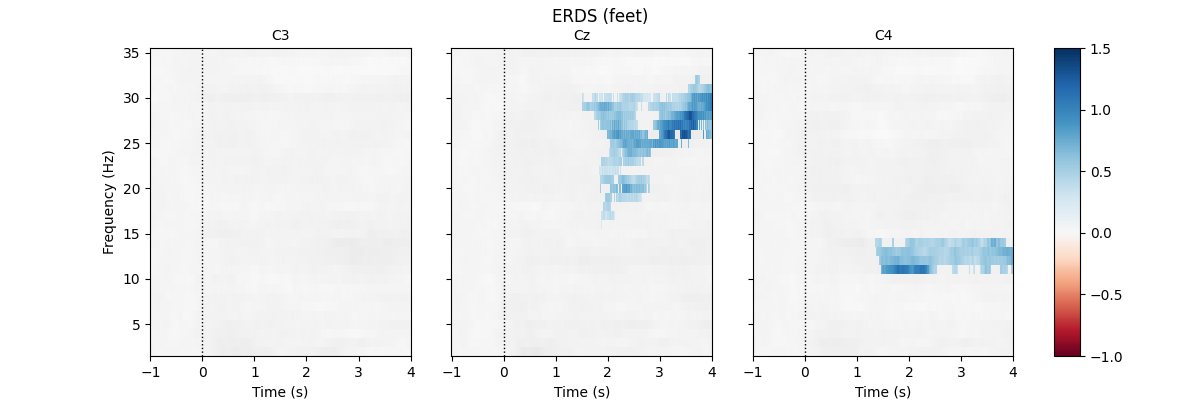

This example calculates and displays ERDS maps of event-related EEG data. ERDS (sometimes also written as ERD/ERS) is short for event-related desynchronization (ERD) and event-related synchronization (ERS) [1]. Conceptually, ERD corresponds to a decrease in power in a specific frequency band relative to a baseline. Similarly, ERS corresponds to an increase in power. An ERDS map is a time/frequency representation of ERD/ERS over a range of frequencies [2]. ERDS maps are also known as ERSP (event-related spectral perturbation) [3].

In this example, we use an EEG BCI data set containing two different motor imagery tasks (imagined hand and feet movement). Our goal is to generate ERDS maps for each of the two tasks.

First, we load the data and create epochs of 5s length. The data set contains multiple channels, but we will only consider C3, Cz, and C4. We compute maps containing frequencies ranging from 2 to 35Hz. We map ERD to red color and ERS to blue color, which is customary in many ERDS publications. Finally, we perform cluster-based permutation tests to estimate significant ERDS values (corrected for multiple comparisons within channels).

# Authors: Clemens Brunner <clemens.brunner@gmail.com>

# Felix Klotzsche <klotzsche@cbs.mpg.de>

#

# License: BSD-3-Clause

# Copyright the MNE-Python contributors.

As usual, we import everything we need.

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

from matplotlib.colors import TwoSlopeNorm

import mne

from mne.datasets import eegbci

from mne.io import concatenate_raws, read_raw_edf

from mne.stats import permutation_cluster_1samp_test as pcluster_test

First, we load and preprocess the data. We use runs 6, 10, and 14 from subject 1 (these runs contains hand and feet motor imagery).

fnames = eegbci.load_data(subjects=1, runs=(6, 10, 14))

raw = concatenate_raws([read_raw_edf(f, preload=True) for f in fnames])

raw.rename_channels(lambda x: x.strip(".")) # remove dots from channel names

# rename descriptions to be more easily interpretable

raw.annotations.rename(dict(T1="hands", T2="feet"))

Extracting EDF parameters from /home/circleci/mne_data/MNE-eegbci-data/files/eegmmidb/1.0.0/S001/S001R06.edf...

Setting channel info structure...

Creating raw.info structure...

Reading 0 ... 19999 = 0.000 ... 124.994 secs...

Extracting EDF parameters from /home/circleci/mne_data/MNE-eegbci-data/files/eegmmidb/1.0.0/S001/S001R10.edf...

Setting channel info structure...

Creating raw.info structure...

Reading 0 ... 19999 = 0.000 ... 124.994 secs...

Extracting EDF parameters from /home/circleci/mne_data/MNE-eegbci-data/files/eegmmidb/1.0.0/S001/S001R14.edf...

Setting channel info structure...

Creating raw.info structure...

Reading 0 ... 19999 = 0.000 ... 124.994 secs...

Now we can create 5-second epochs around events of interest.

Used Annotations descriptions: [np.str_('T0'), np.str_('feet'), np.str_('hands')]

Ignoring annotation durations and creating fixed-duration epochs around annotation onsets.

Not setting metadata

45 matching events found

No baseline correction applied

0 projection items activated

Using data from preloaded Raw for 45 events and 961 original time points ...

0 bad epochs dropped

Here we set suitable values for computing ERDS maps. Note especially the

cnorm variable, which sets up an asymmetric colormap where the middle

color is mapped to zero, even though zero is not the middle value of the

colormap range. This does two things: it ensures that zero values will be

plotted in white (given that below we select the RdBu colormap), and it

makes synchronization and desynchronization look equally prominent in the

plots, even though their extreme values are of different magnitudes.

freqs = np.arange(2, 36) # frequencies from 2-35Hz

vmin, vmax = -1, 1.5 # set min and max ERDS values in plot

baseline = (-1, 0) # baseline interval (in s)

cnorm = TwoSlopeNorm(vmin=vmin, vcenter=0, vmax=vmax) # min, center & max ERDS

kwargs = dict(

n_permutations=100, step_down_p=0.05, seed=1, buffer_size=None, out_type="mask"

) # for cluster test

Finally, we perform time/frequency decomposition over all epochs.

tfr = epochs.compute_tfr(

method="multitaper",

freqs=freqs,

n_cycles=freqs,

use_fft=True,

return_itc=False,

average=False,

decim=2,

)

tfr.crop(tmin, tmax).apply_baseline(baseline, mode="percent")

for event in event_ids:

# select desired epochs for visualization

tfr_ev = tfr[event]

fig, axes = plt.subplots(

1, 4, figsize=(12, 4), gridspec_kw={"width_ratios": [10, 10, 10, 1]}

)

for ch, ax in enumerate(axes[:-1]): # for each channel

# positive clusters

_, c1, p1, _ = pcluster_test(tfr_ev.data[:, ch], tail=1, **kwargs)

# negative clusters

_, c2, p2, _ = pcluster_test(tfr_ev.data[:, ch], tail=-1, **kwargs)

# note that we keep clusters with p <= 0.05 from the combined clusters

# of two independent tests; in this example, we do not correct for

# these two comparisons

c = np.stack(c1 + c2, axis=2) # combined clusters

p = np.concatenate((p1, p2)) # combined p-values

mask = c[..., p <= 0.05].any(axis=-1)

# plot TFR (ERDS map with masking)

tfr_ev.average().plot(

[ch],

cmap="RdBu",

cnorm=cnorm,

axes=ax,

colorbar=False,

show=False,

mask=mask,

mask_style="mask",

)

ax.set_title(epochs.ch_names[ch], fontsize=10)

ax.axvline(0, linewidth=1, color="black", linestyle=":") # event

if ch != 0:

ax.set_ylabel("")

ax.set_yticklabels("")

fig.colorbar(axes[0].images[-1], cax=axes[-1]).ax.set_yscale("linear")

fig.suptitle(f"ERDS ({event})")

plt.show()

Applying baseline correction (mode: percent)

Using a threshold of 1.724718

stat_fun(H1): min=-8.559572924066778 max=3.1802691358477366

Running initial clustering …

Found 78 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

9%|▉ | Permuting : 9/99 [00:00<00:00, 242.40it/s]

17%|█▋ | Permuting : 17/99 [00:00<00:00, 240.13it/s]

26%|██▋ | Permuting : 26/99 [00:00<00:00, 249.34it/s]

35%|███▌ | Permuting : 35/99 [00:00<00:00, 253.99it/s]

45%|████▌ | Permuting : 45/99 [00:00<00:00, 263.36it/s]

56%|█████▌ | Permuting : 55/99 [00:00<00:00, 269.62it/s]

66%|██████▌ | Permuting : 65/99 [00:00<00:00, 274.05it/s]

75%|███████▍ | Permuting : 74/99 [00:00<00:00, 273.06it/s]

86%|████████▌ | Permuting : 85/99 [00:00<00:00, 280.22it/s]

96%|█████████▌| Permuting : 95/99 [00:00<00:00, 282.32it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 281.21it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Using a threshold of -1.724718

stat_fun(H1): min=-8.559572924066778 max=3.1802691358477366

Running initial clustering …

Found 71 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

11%|█ | Permuting : 11/99 [00:00<00:00, 299.18it/s]

20%|██ | Permuting : 20/99 [00:00<00:00, 283.63it/s]

30%|███ | Permuting : 30/99 [00:00<00:00, 288.17it/s]

39%|███▉ | Permuting : 39/99 [00:00<00:00, 282.64it/s]

48%|████▊ | Permuting : 48/99 [00:00<00:00, 279.31it/s]

57%|█████▋ | Permuting : 56/99 [00:00<00:00, 271.48it/s]

65%|██████▍ | Permuting : 64/99 [00:00<00:00, 265.89it/s]

73%|███████▎ | Permuting : 72/99 [00:00<00:00, 261.73it/s]

82%|████████▏ | Permuting : 81/99 [00:00<00:00, 262.48it/s]

91%|█████████ | Permuting : 90/99 [00:00<00:00, 263.06it/s]

99%|█████████▉| Permuting : 98/99 [00:00<00:00, 259.48it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 263.69it/s]

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

0%| | Permuting : 0/99 [00:00<?, ?it/s]

9%|▉ | Permuting : 9/99 [00:00<00:00, 244.60it/s]

18%|█▊ | Permuting : 18/99 [00:00<00:00, 255.73it/s]

28%|██▊ | Permuting : 28/99 [00:00<00:00, 269.75it/s]

38%|███▊ | Permuting : 38/99 [00:00<00:00, 276.90it/s]

47%|████▋ | Permuting : 47/99 [00:00<00:00, 274.86it/s]

56%|█████▌ | Permuting : 55/99 [00:00<00:00, 267.93it/s]

65%|██████▍ | Permuting : 64/99 [00:00<00:00, 267.82it/s]

74%|███████▎ | Permuting : 73/99 [00:00<00:00, 267.77it/s]

83%|████████▎ | Permuting : 82/99 [00:00<00:00, 267.71it/s]

91%|█████████ | Permuting : 90/99 [00:00<00:00, 263.96it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 264.12it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 264.69it/s]

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

No baseline correction applied

Using a threshold of 1.724718

stat_fun(H1): min=-4.519473510988147 max=3.6995798232707275

Running initial clustering …

Found 83 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

9%|▉ | Permuting : 9/99 [00:00<00:00, 262.72it/s]

20%|██ | Permuting : 20/99 [00:00<00:00, 295.34it/s]

30%|███ | Permuting : 30/99 [00:00<00:00, 295.93it/s]

40%|████ | Permuting : 40/99 [00:00<00:00, 293.97it/s]

51%|█████ | Permuting : 50/99 [00:00<00:00, 294.62it/s]

61%|██████ | Permuting : 60/99 [00:00<00:00, 295.06it/s]

70%|██████▉ | Permuting : 69/99 [00:00<00:00, 290.51it/s]

80%|███████▉ | Permuting : 79/99 [00:00<00:00, 291.07it/s]

92%|█████████▏| Permuting : 91/99 [00:00<00:00, 299.84it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 305.91it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 303.17it/s]

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

0%| | Permuting : 0/99 [00:00<?, ?it/s]

10%|█ | Permuting : 10/99 [00:00<00:00, 270.55it/s]

19%|█▉ | Permuting : 19/99 [00:00<00:00, 268.83it/s]

29%|██▉ | Permuting : 29/99 [00:00<00:00, 278.31it/s]

38%|███▊ | Permuting : 38/99 [00:00<00:00, 275.43it/s]

49%|████▉ | Permuting : 49/99 [00:00<00:00, 286.62it/s]

61%|██████ | Permuting : 60/99 [00:00<00:00, 294.11it/s]

70%|██████▉ | Permuting : 69/99 [00:00<00:00, 289.67it/s]

80%|███████▉ | Permuting : 79/99 [00:00<00:00, 290.76it/s]

92%|█████████▏| Permuting : 91/99 [00:00<00:00, 299.56it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 301.22it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 298.34it/s]

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

Using a threshold of -1.724718

stat_fun(H1): min=-4.519473510988147 max=3.6995798232707275

Running initial clustering …

Found 56 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

10%|█ | Permuting : 10/99 [00:00<00:00, 272.33it/s]

20%|██ | Permuting : 20/99 [00:00<00:00, 284.25it/s]

29%|██▉ | Permuting : 29/99 [00:00<00:00, 278.44it/s]

40%|████ | Permuting : 40/99 [00:00<00:00, 291.16it/s]

51%|█████ | Permuting : 50/99 [00:00<00:00, 292.45it/s]

59%|█████▊ | Permuting : 58/99 [00:00<00:00, 282.22it/s]

69%|██████▊ | Permuting : 68/99 [00:00<00:00, 284.62it/s]

78%|███████▊ | Permuting : 77/99 [00:00<00:00, 282.07it/s]

87%|████████▋ | Permuting : 86/99 [00:00<00:00, 279.99it/s]

96%|█████████▌| Permuting : 95/99 [00:00<00:00, 278.46it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 279.93it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

No baseline correction applied

Using a threshold of 1.724718

stat_fun(H1): min=-6.4592553718811 max=3.334719139698971

Running initial clustering …

Found 61 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

9%|▉ | Permuting : 9/99 [00:00<00:00, 244.10it/s]

19%|█▉ | Permuting : 19/99 [00:00<00:00, 270.09it/s]

28%|██▊ | Permuting : 28/99 [00:00<00:00, 269.16it/s]

38%|███▊ | Permuting : 38/99 [00:00<00:00, 276.49it/s]

48%|████▊ | Permuting : 48/99 [00:00<00:00, 280.93it/s]

61%|██████ | Permuting : 60/99 [00:00<00:00, 295.05it/s]

72%|███████▏ | Permuting : 71/99 [00:00<00:00, 300.24it/s]

82%|████████▏ | Permuting : 81/99 [00:00<00:00, 299.76it/s]

92%|█████████▏| Permuting : 91/99 [00:00<00:00, 299.40it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 300.52it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 298.01it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Using a threshold of -1.724718

stat_fun(H1): min=-6.4592553718811 max=3.334719139698971

Running initial clustering …

Found 64 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

10%|█ | Permuting : 10/99 [00:00<00:00, 273.61it/s]

21%|██ | Permuting : 21/99 [00:00<00:00, 299.82it/s]

31%|███▏ | Permuting : 31/99 [00:00<00:00, 298.96it/s]

40%|████ | Permuting : 40/99 [00:00<00:00, 290.66it/s]

51%|█████ | Permuting : 50/99 [00:00<00:00, 292.06it/s]

59%|█████▊ | Permuting : 58/99 [00:00<00:00, 281.84it/s]

68%|██████▊ | Permuting : 67/99 [00:00<00:00, 279.38it/s]

78%|███████▊ | Permuting : 77/99 [00:00<00:00, 281.98it/s]

88%|████████▊ | Permuting : 87/99 [00:00<00:00, 284.01it/s]

98%|█████████▊| Permuting : 97/99 [00:00<00:00, 285.61it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 286.34it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

No baseline correction applied

Using a threshold of 1.713872

stat_fun(H1): min=-3.6760366758717375 max=3.3443493979849728

Running initial clustering …

Found 70 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

10%|█ | Permuting : 10/99 [00:00<00:00, 271.03it/s]

19%|█▉ | Permuting : 19/99 [00:00<00:00, 269.08it/s]

26%|██▋ | Permuting : 26/99 [00:00<00:00, 248.26it/s]

34%|███▍ | Permuting : 34/99 [00:00<00:00, 245.37it/s]

44%|████▍ | Permuting : 44/99 [00:00<00:00, 256.53it/s]

55%|█████▍ | Permuting : 54/99 [00:00<00:00, 264.00it/s]

65%|██████▍ | Permuting : 64/99 [00:00<00:00, 269.39it/s]

74%|███████▎ | Permuting : 73/99 [00:00<00:00, 269.02it/s]

83%|████████▎ | Permuting : 82/99 [00:00<00:00, 268.78it/s]

92%|█████████▏| Permuting : 91/99 [00:00<00:00, 268.56it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 270.90it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 269.22it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

Using a threshold of -1.713872

stat_fun(H1): min=-3.6760366758717375 max=3.3443493979849728

Running initial clustering …

Found 77 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

9%|▉ | Permuting : 9/99 [00:00<00:00, 245.33it/s]

18%|█▊ | Permuting : 18/99 [00:00<00:00, 256.13it/s]

30%|███ | Permuting : 30/99 [00:00<00:00, 290.33it/s]

41%|████▏ | Permuting : 41/99 [00:00<00:00, 299.96it/s]

53%|█████▎ | Permuting : 52/99 [00:00<00:00, 305.84it/s]

63%|██████▎ | Permuting : 62/99 [00:00<00:00, 304.20it/s]

73%|███████▎ | Permuting : 72/99 [00:00<00:00, 302.81it/s]

82%|████████▏ | Permuting : 81/99 [00:00<00:00, 297.52it/s]

93%|█████████▎| Permuting : 92/99 [00:00<00:00, 301.41it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 303.90it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 302.26it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

No baseline correction applied

Using a threshold of 1.713872

stat_fun(H1): min=-4.956834187075391 max=5.467989936693932

Running initial clustering …

Found 100 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

9%|▉ | Permuting : 9/99 [00:00<00:00, 248.04it/s]

20%|██ | Permuting : 20/99 [00:00<00:00, 286.86it/s]

29%|██▉ | Permuting : 29/99 [00:00<00:00, 280.08it/s]

38%|███▊ | Permuting : 38/99 [00:00<00:00, 276.71it/s]

47%|████▋ | Permuting : 47/99 [00:00<00:00, 274.63it/s]

58%|█████▊ | Permuting : 57/99 [00:00<00:00, 278.82it/s]

67%|██████▋ | Permuting : 66/99 [00:00<00:00, 276.85it/s]

76%|███████▌ | Permuting : 75/99 [00:00<00:00, 275.48it/s]

85%|████████▍ | Permuting : 84/99 [00:00<00:00, 274.39it/s]

94%|█████████▍| Permuting : 93/99 [00:00<00:00, 273.51it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 275.36it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 275.23it/s]

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

0%| | Permuting : 0/99 [00:00<?, ?it/s]

11%|█ | Permuting : 11/99 [00:00<00:00, 301.64it/s]

21%|██ | Permuting : 21/99 [00:00<00:00, 299.56it/s]

31%|███▏ | Permuting : 31/99 [00:00<00:00, 298.50it/s]

41%|████▏ | Permuting : 41/99 [00:00<00:00, 298.11it/s]

51%|█████ | Permuting : 50/99 [00:00<00:00, 291.39it/s]

60%|█████▉ | Permuting : 59/99 [00:00<00:00, 286.87it/s]

70%|██████▉ | Permuting : 69/99 [00:00<00:00, 288.45it/s]

80%|███████▉ | Permuting : 79/99 [00:00<00:00, 289.73it/s]

88%|████████▊ | Permuting : 87/99 [00:00<00:00, 282.75it/s]

98%|█████████▊| Permuting : 97/99 [00:00<00:00, 284.03it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 286.82it/s]

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

Using a threshold of -1.713872

stat_fun(H1): min=-4.956834187075391 max=5.467989936693932

Running initial clustering …

Found 66 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

8%|▊ | Permuting : 8/99 [00:00<00:00, 232.09it/s]

18%|█▊ | Permuting : 18/99 [00:00<00:00, 264.95it/s]

29%|██▉ | Permuting : 29/99 [00:00<00:00, 279.02it/s]

39%|███▉ | Permuting : 39/99 [00:00<00:00, 283.78it/s]

49%|████▉ | Permuting : 49/99 [00:00<00:00, 286.64it/s]

60%|█████▉ | Permuting : 59/99 [00:00<00:00, 288.58it/s]

70%|██████▉ | Permuting : 69/99 [00:00<00:00, 289.99it/s]

80%|███████▉ | Permuting : 79/99 [00:00<00:00, 291.06it/s]

90%|████████▉ | Permuting : 89/99 [00:00<00:00, 291.83it/s]

99%|█████████▉| Permuting : 98/99 [00:00<00:00, 288.78it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 289.88it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

No baseline correction applied

Using a threshold of 1.713872

stat_fun(H1): min=-5.954856152984689 max=4.091828652270905

Running initial clustering …

Found 93 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

9%|▉ | Permuting : 9/99 [00:00<00:00, 246.84it/s]

18%|█▊ | Permuting : 18/99 [00:00<00:00, 256.98it/s]

27%|██▋ | Permuting : 27/99 [00:00<00:00, 260.45it/s]

37%|███▋ | Permuting : 37/99 [00:00<00:00, 270.06it/s]

47%|████▋ | Permuting : 47/99 [00:00<00:00, 275.97it/s]

59%|█████▊ | Permuting : 58/99 [00:00<00:00, 285.44it/s]

69%|██████▊ | Permuting : 68/99 [00:00<00:00, 287.35it/s]

78%|███████▊ | Permuting : 77/99 [00:00<00:00, 284.40it/s]

87%|████████▋ | Permuting : 86/99 [00:00<00:00, 282.07it/s]

97%|█████████▋| Permuting : 96/99 [00:00<00:00, 283.45it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 282.45it/s]

Step-down-in-jumps iteration #1 found 1 cluster to exclude from subsequent iterations

0%| | Permuting : 0/99 [00:00<?, ?it/s]

11%|█ | Permuting : 11/99 [00:00<00:00, 299.85it/s]

21%|██ | Permuting : 21/99 [00:00<00:00, 298.59it/s]

30%|███ | Permuting : 30/99 [00:00<00:00, 287.90it/s]

39%|███▉ | Permuting : 39/99 [00:00<00:00, 282.31it/s]

49%|████▉ | Permuting : 49/99 [00:00<00:00, 285.55it/s]

60%|█████▉ | Permuting : 59/99 [00:00<00:00, 287.73it/s]

70%|██████▉ | Permuting : 69/99 [00:00<00:00, 289.07it/s]

80%|███████▉ | Permuting : 79/99 [00:00<00:00, 290.23it/s]

90%|████████▉ | Permuting : 89/99 [00:00<00:00, 291.20it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 295.47it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 293.99it/s]

Step-down-in-jumps iteration #2 found 0 additional clusters to exclude from subsequent iterations

Using a threshold of -1.713872

stat_fun(H1): min=-5.954856152984689 max=4.091828652270905

Running initial clustering …

Found 53 clusters

0%| | Permuting : 0/99 [00:00<?, ?it/s]

10%|█ | Permuting : 10/99 [00:00<00:00, 274.45it/s]

19%|█▉ | Permuting : 19/99 [00:00<00:00, 270.91it/s]

29%|██▉ | Permuting : 29/99 [00:00<00:00, 279.68it/s]

41%|████▏ | Permuting : 41/99 [00:00<00:00, 299.98it/s]

51%|█████ | Permuting : 50/99 [00:00<00:00, 292.92it/s]

61%|██████ | Permuting : 60/99 [00:00<00:00, 293.69it/s]

70%|██████▉ | Permuting : 69/99 [00:00<00:00, 289.31it/s]

79%|███████▉ | Permuting : 78/99 [00:00<00:00, 286.03it/s]

89%|████████▉ | Permuting : 88/99 [00:00<00:00, 287.46it/s]

98%|█████████▊| Permuting : 97/99 [00:00<00:00, 282.48it/s]

100%|██████████| Permuting : 99/99 [00:00<00:00, 285.39it/s]

Step-down-in-jumps iteration #1 found 0 clusters to exclude from subsequent iterations

No baseline correction applied

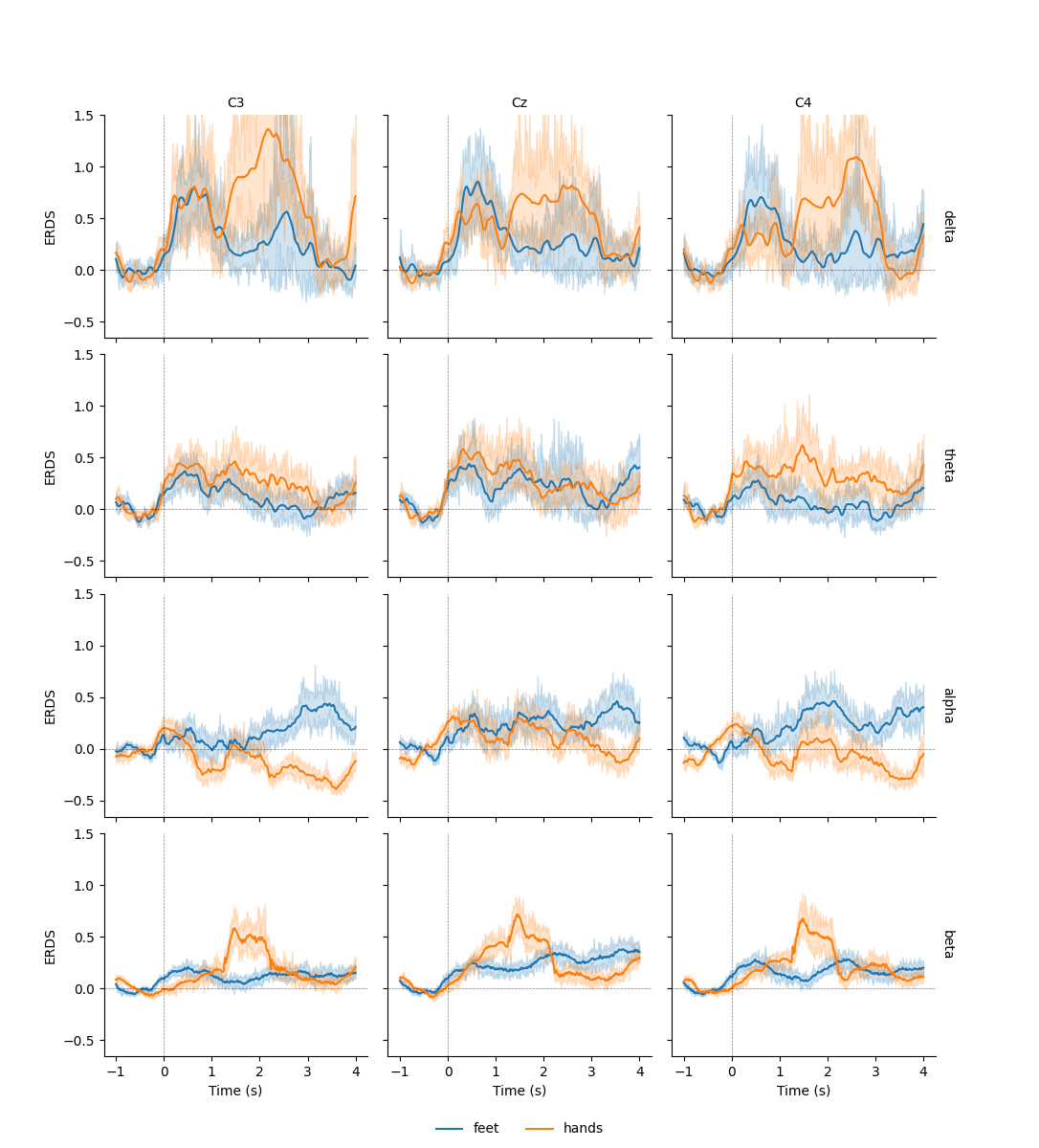

Similar to Epochs objects, we can also export data from

EpochsTFR and AverageTFR objects

to a Pandas DataFrame. By default, the time

column of the exported data frame is in milliseconds. Here, to be consistent

with the time-frequency plots, we want to keep it in seconds, which we can

achieve by setting time_format=None:

df = tfr.to_data_frame(time_format=None)

df.head()

This allows us to use additional plotting functions like

seaborn.lineplot() to plot confidence bands:

df = tfr.to_data_frame(time_format=None, long_format=True)

# Map to frequency bands:

freq_bounds = {"_": 0, "delta": 3, "theta": 7, "alpha": 13, "beta": 35, "gamma": 140}

df["band"] = pd.cut(

df["freq"], list(freq_bounds.values()), labels=list(freq_bounds)[1:]

)

# Filter to retain only relevant frequency bands:

freq_bands_of_interest = ["delta", "theta", "alpha", "beta"]

df = df[df.band.isin(freq_bands_of_interest)]

df["band"] = df["band"].cat.remove_unused_categories()

# Order channels for plotting:

df["channel"] = df["channel"].cat.reorder_categories(("C3", "Cz", "C4"), ordered=True)

g = sns.FacetGrid(df, row="band", col="channel", margin_titles=True)

g.map(sns.lineplot, "time", "value", "condition", n_boot=10)

axline_kw = dict(color="black", linestyle="dashed", linewidth=0.5, alpha=0.5)

g.map(plt.axhline, y=0, **axline_kw)

g.map(plt.axvline, x=0, **axline_kw)

g.set(ylim=(None, 1.5))

g.set_axis_labels("Time (s)", "ERDS")

g.set_titles(col_template="{col_name}", row_template="{row_name}")

g.add_legend(ncol=2, loc="lower center")

g.fig.subplots_adjust(left=0.1, right=0.9, top=0.9, bottom=0.08)

Converting "condition" to "category"...

Converting "epoch" to "category"...

Converting "channel" to "category"...

Converting "ch_type" to "category"...

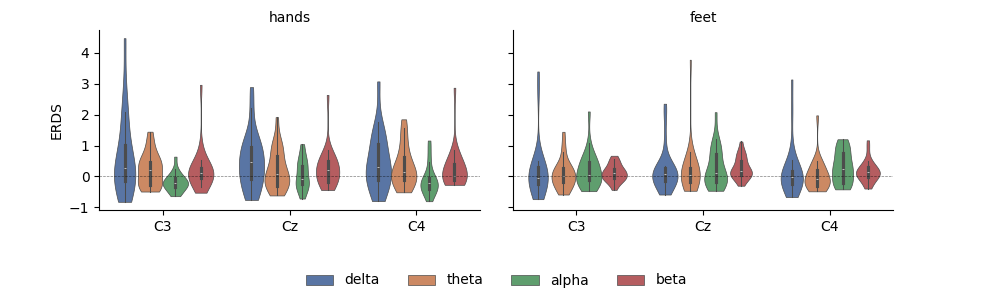

Having the data as a DataFrame also facilitates subsetting, grouping, and other transforms. Here, we use seaborn to plot the average ERDS in the motor imagery interval as a function of frequency band and imagery condition:

df_mean = (

df.query("time > 1")

.groupby(["condition", "epoch", "band", "channel"], observed=False)[["value"]]

.mean()

.reset_index()

)

g = sns.FacetGrid(

df_mean, col="condition", col_order=["hands", "feet"], margin_titles=True

)

g = g.map(

sns.violinplot,

"channel",

"value",

"band",

cut=0,

palette="deep",

order=["C3", "Cz", "C4"],

hue_order=freq_bands_of_interest,

linewidth=0.5,

).add_legend(ncol=4, loc="lower center")

g.map(plt.axhline, **axline_kw)

g.set_axis_labels("", "ERDS")

g.set_titles(col_template="{col_name}", row_template="{row_name}")

g.fig.subplots_adjust(left=0.1, right=0.9, top=0.9, bottom=0.3)

References#

Total running time of the script: (0 minutes 32.176 seconds)