Note

Go to the end to download the full example code.

Receptive Field Estimation and Prediction#

This example reproduces figures from Lalor et al.’s mTRF toolbox in

MATLAB [1]. We will show how the

mne.decoding.ReceptiveField class

can perform a similar function along with scikit-learn. We will first fit a

linear encoding model using the continuously-varying speech envelope to predict

activity of a 128 channel EEG system. Then, we will take the reverse approach

and try to predict the speech envelope from the EEG (known in the literature

as a decoding model, or simply stimulus reconstruction).

# Authors: Chris Holdgraf <choldgraf@gmail.com>

# Eric Larson <larson.eric.d@gmail.com>

# Nicolas Barascud <nicolas.barascud@ens.fr>

#

# License: BSD-3-Clause

# Copyright the MNE-Python contributors.

from os.path import join

import matplotlib.pyplot as plt

import numpy as np

from scipy.io import loadmat

from sklearn.model_selection import KFold

from sklearn.preprocessing import scale

import mne

from mne.decoding import ReceptiveField

Load the data from the publication#

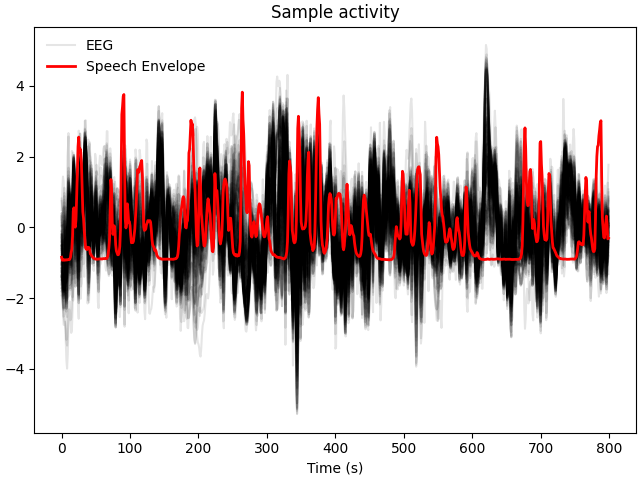

First we will load the data collected in [1]. In this experiment subjects listened to natural speech. Raw EEG and the speech stimulus are provided. We will load these below, downsampling the data in order to speed up computation since we know that our features are primarily low-frequency in nature. Then we’ll visualize both the EEG and speech envelope.

path = mne.datasets.mtrf.data_path()

decim = 2

data = loadmat(join(path, "speech_data.mat"))

raw = data["EEG"].T

speech = data["envelope"].T

sfreq = float(data["Fs"].item())

sfreq /= decim

speech = mne.filter.resample(speech, down=decim, method="polyphase")

raw = mne.filter.resample(raw, down=decim, method="polyphase")

# Read in channel positions and create our MNE objects from the raw data

montage = mne.channels.make_standard_montage("biosemi128")

info = mne.create_info(montage.ch_names, sfreq, "eeg").set_montage(montage)

raw = mne.io.RawArray(raw, info)

n_channels = len(raw.ch_names)

# Plot a sample of brain and stimulus activity

fig, ax = plt.subplots(layout="constrained")

lns = ax.plot(scale(raw[:, :800][0].T), color="k", alpha=0.1)

ln1 = ax.plot(scale(speech[0, :800]), color="r", lw=2)

ax.legend([lns[0], ln1[0]], ["EEG", "Speech Envelope"], frameon=False)

ax.set(title="Sample activity", xlabel="Time (s)")

Polyphase resampling neighborhood: ±2 input samples

Polyphase resampling neighborhood: ±2 input samples

Creating RawArray with float64 data, n_channels=128, n_times=7677

Range : 0 ... 7676 = 0.000 ... 119.938 secs

Ready.

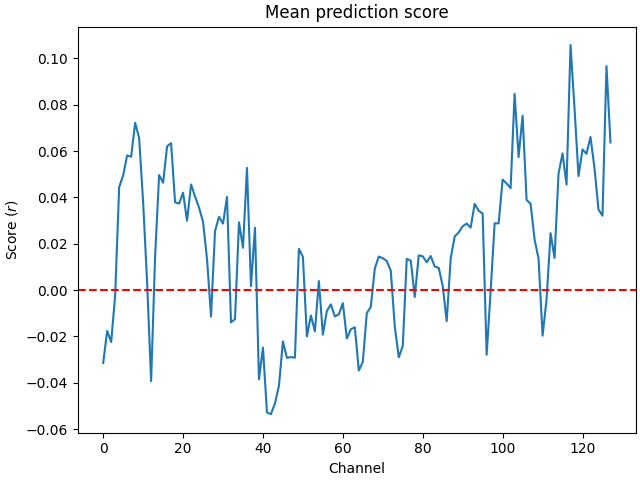

Create and fit a receptive field model#

We will construct an encoding model to find the linear relationship between a time-delayed version of the speech envelope and the EEG signal. This allows us to make predictions about the response to new stimuli.

# Define the delays that we will use in the receptive field

tmin, tmax = -0.2, 0.4

# Initialize the model

rf = ReceptiveField(

tmin, tmax, sfreq, feature_names=["envelope"], estimator=1.0, scoring="corrcoef"

)

# We'll have (tmax - tmin) * sfreq delays

# and an extra 2 delays since we are inclusive on the beginning / end index

n_delays = int((tmax - tmin) * sfreq) + 2

n_splits = 3

cv = KFold(n_splits)

# Prepare model data (make time the first dimension)

speech = speech.T

Y, _ = raw[:] # Outputs for the model

Y = Y.T

# Iterate through splits, fit the model, and predict/test on held-out data

coefs = np.zeros((n_splits, n_channels, n_delays))

scores = np.zeros((n_splits, n_channels))

for ii, (train, test) in enumerate(cv.split(speech)):

print(f"split {ii + 1} / {n_splits}")

rf.fit(speech[train], Y[train])

scores[ii] = rf.score(speech[test], Y[test])

# coef_ is shape (n_outputs, n_features, n_delays). we only have 1 feature

coefs[ii] = rf.coef_[:, 0, :]

times = rf.delays_ / float(rf.sfreq)

# Average scores and coefficients across CV splits

mean_coefs = coefs.mean(axis=0)

mean_scores = scores.mean(axis=0)

# Plot mean prediction scores across all channels

fig, ax = plt.subplots(layout="constrained")

ix_chs = np.arange(n_channels)

ax.plot(ix_chs, mean_scores)

ax.axhline(0, ls="--", color="r")

ax.set(title="Mean prediction score", xlabel="Channel", ylabel="Score ($r$)")

split 1 / 3

Fitting 1 epochs, 1 channels

0%| | Sample : 0/2 [00:00<?, ?it/s]

50%|█████ | Sample : 1/2 [00:05<00:05, 5.83s/it]split 2 / 3

Fitting 1 epochs, 1 channels

0%| | Sample : 0/2 [00:00<?, ?it/s]

100%|██████████| Sample : 2/2 [00:00<00:00, 66.55it/s]

100%|██████████| Sample : 2/2 [00:00<00:00, 66.06it/s]

split 3 / 3

Fitting 1 epochs, 1 channels

0%| | Sample : 0/2 [00:00<?, ?it/s]

100%|██████████| Sample : 2/2 [00:00<00:00, 76.93it/s]

100%|██████████| Sample : 2/2 [00:00<00:00, 76.34it/s]

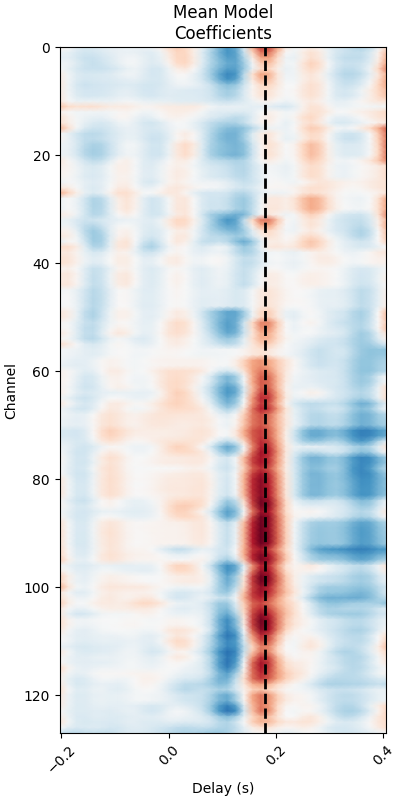

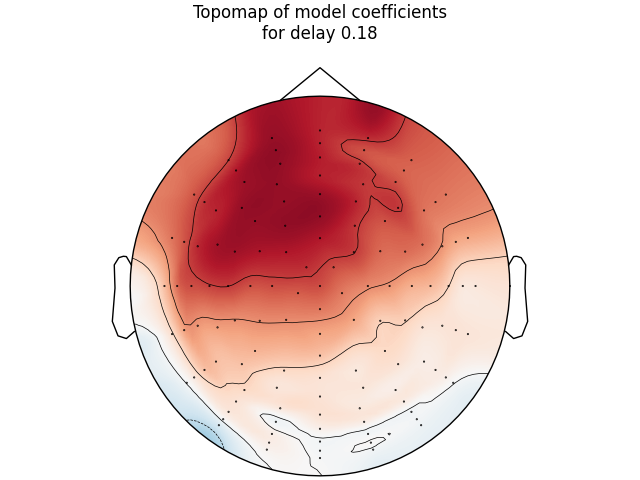

Investigate model coefficients#

Finally, we will look at how the linear coefficients (sometimes referred to as beta values) are distributed across time delays as well as across the scalp. We will recreate figure 1 and figure 2 from [1].

# Print mean coefficients across all time delays / channels (see Fig 1)

time_plot = 0.180 # For highlighting a specific time.

fig, ax = plt.subplots(figsize=(4, 8), layout="constrained")

max_coef = mean_coefs.max()

ax.pcolormesh(

times,

ix_chs,

mean_coefs,

cmap="RdBu_r",

vmin=-max_coef,

vmax=max_coef,

shading="gouraud",

)

ax.axvline(time_plot, ls="--", color="k", lw=2)

ax.set(

xlabel="Delay (s)",

ylabel="Channel",

title="Mean Model\nCoefficients",

xlim=times[[0, -1]],

ylim=[len(ix_chs) - 1, 0],

xticks=np.arange(tmin, tmax + 0.2, 0.2),

)

plt.setp(ax.get_xticklabels(), rotation=45)

# Make a topographic map of coefficients for a given delay (see Fig 2C)

ix_plot = np.argmin(np.abs(time_plot - times))

fig, ax = plt.subplots(layout="constrained")

mne.viz.plot_topomap(

mean_coefs[:, ix_plot], pos=info, axes=ax, show=False, vlim=(-max_coef, max_coef)

)

ax.set(title=f"Topomap of model coefficients\nfor delay {time_plot}")

Create and fit a stimulus reconstruction model#

We will now demonstrate another use case for the for the

mne.decoding.ReceptiveField class as we try to predict the stimulus

activity from the EEG data. This is known in the literature as a decoding, or

stimulus reconstruction model [1].

A decoding model aims to find the

relationship between the speech signal and a time-delayed version of the EEG.

This can be useful as we exploit all of the available neural data in a

multivariate context, compared to the encoding case which treats each M/EEG

channel as an independent feature. Therefore, decoding models might provide a

better quality of fit (at the expense of not controlling for stimulus

covariance), especially for low SNR stimuli such as speech.

# We use the same lags as in :footcite:`CrosseEtAl2016`. Negative lags now

# index the relationship

# between the neural response and the speech envelope earlier in time, whereas

# positive lags would index how a unit change in the amplitude of the EEG would

# affect later stimulus activity (obviously this should have an amplitude of

# zero).

tmin, tmax = -0.2, 0.0

# Initialize the model. Here the features are the EEG data. We also specify

# ``patterns=True`` to compute inverse-transformed coefficients during model

# fitting (cf. next section and :footcite:`HaufeEtAl2014`).

# We'll use a ridge regression estimator with an alpha value similar to

# Crosse et al.

sr = ReceptiveField(

tmin,

tmax,

sfreq,

feature_names=raw.ch_names,

estimator=1e4,

scoring="corrcoef",

patterns=True,

)

# We'll have (tmax - tmin) * sfreq delays

# and an extra 2 delays since we are inclusive on the beginning / end index

n_delays = int((tmax - tmin) * sfreq) + 2

n_splits = 3

cv = KFold(n_splits)

# Iterate through splits, fit the model, and predict/test on held-out data

coefs = np.zeros((n_splits, n_channels, n_delays))

patterns = coefs.copy()

scores = np.zeros((n_splits,))

for ii, (train, test) in enumerate(cv.split(speech)):

print(f"split {ii + 1} / {n_splits}")

sr.fit(Y[train], speech[train])

scores[ii] = sr.score(Y[test], speech[test])[0]

# coef_ is shape (n_outputs, n_features, n_delays). We have 128 features

coefs[ii] = sr.coef_[0, :, :]

patterns[ii] = sr.patterns_[0, :, :]

times = sr.delays_ / float(sr.sfreq)

# Average scores and coefficients across CV splits

mean_coefs = coefs.mean(axis=0)

mean_patterns = patterns.mean(axis=0)

mean_scores = scores.mean(axis=0)

max_coef = np.abs(mean_coefs).max()

max_patterns = np.abs(mean_patterns).max()

split 1 / 3

Fitting 1 epochs, 128 channels

0%| | Sample : 0/8384 [00:00<?, ?it/s]

0%| | Sample : 1/8384 [00:00<02:25, 57.79it/s]

2%|▏ | Sample : 142/8384 [00:00<00:01, 4362.88it/s]

3%|▎ | Sample : 280/8384 [00:00<00:01, 5803.38it/s]

5%|▍ | Sample : 412/8384 [00:00<00:01, 6439.87it/s]

7%|▋ | Sample : 549/8384 [00:00<00:01, 6896.02it/s]

8%|▊ | Sample : 686/8384 [00:00<00:01, 7204.06it/s]

10%|▉ | Sample : 827/8384 [00:00<00:01, 7464.74it/s]

12%|█▏ | Sample : 967/8384 [00:00<00:00, 7652.33it/s]

13%|█▎ | Sample : 1110/8384 [00:00<00:00, 7824.29it/s]

15%|█▍ | Sample : 1252/8384 [00:00<00:00, 7949.58it/s]

17%|█▋ | Sample : 1397/8384 [00:00<00:00, 8071.97it/s]

18%|█▊ | Sample : 1539/8384 [00:00<00:00, 8156.09it/s]

20%|██ | Sample : 1684/8384 [00:00<00:00, 8244.27it/s]

22%|██▏ | Sample : 1825/8384 [00:00<00:00, 8294.00it/s]

23%|██▎ | Sample : 1967/8384 [00:00<00:00, 8344.69it/s]

25%|██▌ | Sample : 2111/8384 [00:00<00:00, 8402.11it/s]

27%|██▋ | Sample : 2256/8384 [00:00<00:00, 8453.78it/s]

29%|██▊ | Sample : 2401/8384 [00:00<00:00, 8503.67it/s]

30%|███ | Sample : 2547/8384 [00:00<00:00, 8549.60it/s]

32%|███▏ | Sample : 2698/8384 [00:00<00:00, 8617.24it/s]

34%|███▍ | Sample : 2846/8384 [00:00<00:00, 8661.49it/s]

36%|███▌ | Sample : 2998/8384 [00:00<00:00, 8719.16it/s]

38%|███▊ | Sample : 3150/8384 [00:00<00:00, 8771.87it/s]

39%|███▉ | Sample : 3299/8384 [00:00<00:00, 8807.66it/s]

41%|████ | Sample : 3448/8384 [00:00<00:00, 8840.50it/s]

43%|████▎ | Sample : 3593/8384 [00:00<00:00, 8853.58it/s]

45%|████▍ | Sample : 3740/8384 [00:00<00:00, 8875.71it/s]

46%|████▋ | Sample : 3886/8384 [00:00<00:00, 8888.14it/s]

48%|████▊ | Sample : 4033/8384 [00:00<00:00, 8905.44it/s]

50%|████▉ | Sample : 4182/8384 [00:00<00:00, 8930.47it/s]

52%|█████▏ | Sample : 4332/8384 [00:00<00:00, 8957.66it/s]

53%|█████▎ | Sample : 4484/8384 [00:00<00:00, 8987.71it/s]

55%|█████▌ | Sample : 4635/8384 [00:00<00:00, 9014.74it/s]

57%|█████▋ | Sample : 4782/8384 [00:00<00:00, 9023.60it/s]

59%|█████▉ | Sample : 4928/8384 [00:00<00:00, 9028.21it/s]

61%|██████ | Sample : 5077/8384 [00:00<00:00, 9043.12it/s]

62%|██████▏ | Sample : 5228/8384 [00:00<00:00, 9063.14it/s]

64%|██████▍ | Sample : 5378/8384 [00:00<00:00, 9080.02it/s]

66%|██████▌ | Sample : 5530/8384 [00:00<00:00, 9102.31it/s]

68%|██████▊ | Sample : 5680/8384 [00:00<00:00, 9117.62it/s]

70%|██████▉ | Sample : 5829/8384 [00:00<00:00, 9126.82it/s]

71%|███████▏ | Sample : 5981/8384 [00:00<00:00, 9146.04it/s]

73%|███████▎ | Sample : 6134/8384 [00:00<00:00, 9167.99it/s]

75%|███████▍ | Sample : 6286/8384 [00:00<00:00, 9184.50it/s]

77%|███████▋ | Sample : 6437/8384 [00:00<00:00, 9195.85it/s]

79%|███████▊ | Sample : 6588/8384 [00:00<00:00, 9207.24it/s]

80%|████████ | Sample : 6738/8384 [00:00<00:00, 9215.34it/s]

82%|████████▏ | Sample : 6890/8384 [00:00<00:00, 9228.46it/s]

84%|████████▍ | Sample : 7040/8384 [00:00<00:00, 9234.53it/s]

86%|████████▌ | Sample : 7192/8384 [00:00<00:00, 9246.56it/s]

88%|████████▊ | Sample : 7344/8384 [00:00<00:00, 9257.32it/s]

89%|████████▉ | Sample : 7496/8384 [00:00<00:00, 9268.82it/s]

91%|█████████ | Sample : 7647/8384 [00:00<00:00, 9277.52it/s]

93%|█████████▎| Sample : 7799/8384 [00:00<00:00, 9287.01it/s]

95%|█████████▍| Sample : 7951/8384 [00:00<00:00, 9295.26it/s]

97%|█████████▋| Sample : 8101/8384 [00:00<00:00, 9299.33it/s]

98%|█████████▊| Sample : 8251/8384 [00:00<00:00, 9301.06it/s]

100%|██████████| Sample : 8384/8384 [00:00<00:00, 9004.44it/s]

split 2 / 3

Fitting 1 epochs, 128 channels

0%| | Sample : 0/8384 [00:00<?, ?it/s]

0%| | Sample : 1/8384 [00:00<02:24, 58.04it/s]

1%|▏ | Sample : 115/8384 [00:00<00:02, 3549.65it/s]

3%|▎ | Sample : 256/8384 [00:00<00:01, 5348.22it/s]

5%|▍ | Sample : 401/8384 [00:00<00:01, 6322.42it/s]

7%|▋ | Sample : 546/8384 [00:00<00:01, 6916.43it/s]

8%|▊ | Sample : 692/8384 [00:00<00:01, 7323.57it/s]

10%|▉ | Sample : 835/8384 [00:00<00:00, 7584.16it/s]

12%|█▏ | Sample : 982/8384 [00:00<00:00, 7815.59it/s]

13%|█▎ | Sample : 1131/8384 [00:00<00:00, 8008.60it/s]

15%|█▌ | Sample : 1281/8384 [00:00<00:00, 8174.44it/s]

17%|█▋ | Sample : 1431/8384 [00:00<00:00, 8307.38it/s]

19%|█▉ | Sample : 1576/8384 [00:00<00:00, 8385.46it/s]

21%|██ | Sample : 1728/8384 [00:00<00:00, 8493.09it/s]

22%|██▏ | Sample : 1879/8384 [00:00<00:00, 8583.14it/s]

24%|██▍ | Sample : 2031/8384 [00:00<00:00, 8665.56it/s]

26%|██▌ | Sample : 2183/8384 [00:00<00:00, 8735.46it/s]

28%|██▊ | Sample : 2334/8384 [00:00<00:00, 8792.18it/s]

30%|██▉ | Sample : 2485/8384 [00:00<00:00, 8840.90it/s]

31%|███▏ | Sample : 2626/8384 [00:00<00:00, 8835.71it/s]

33%|███▎ | Sample : 2770/8384 [00:00<00:00, 8844.25it/s]

35%|███▍ | Sample : 2916/8384 [00:00<00:00, 8861.17it/s]

37%|███▋ | Sample : 3063/8384 [00:00<00:00, 8883.64it/s]

38%|███▊ | Sample : 3212/8384 [00:00<00:00, 8914.14it/s]

40%|████ | Sample : 3362/8384 [00:00<00:00, 8945.12it/s]

42%|████▏ | Sample : 3513/8384 [00:00<00:00, 8977.59it/s]

44%|████▎ | Sample : 3665/8384 [00:00<00:00, 9009.30it/s]

46%|████▌ | Sample : 3816/8384 [00:00<00:00, 9037.08it/s]

47%|████▋ | Sample : 3967/8384 [00:00<00:00, 9059.76it/s]

49%|████▉ | Sample : 4117/8384 [00:00<00:00, 9079.22it/s]

51%|█████ | Sample : 4269/8384 [00:00<00:00, 9103.31it/s]

53%|█████▎ | Sample : 4417/8384 [00:00<00:00, 9111.86it/s]

54%|█████▍ | Sample : 4569/8384 [00:00<00:00, 9133.04it/s]

56%|█████▋ | Sample : 4721/8384 [00:00<00:00, 9153.91it/s]

58%|█████▊ | Sample : 4872/8384 [00:00<00:00, 9170.32it/s]

60%|█████▉ | Sample : 5024/8384 [00:00<00:00, 9189.80it/s]

62%|██████▏ | Sample : 5177/8384 [00:00<00:00, 9210.51it/s]

64%|██████▎ | Sample : 5330/8384 [00:00<00:00, 9228.69it/s]

65%|██████▌ | Sample : 5483/8384 [00:00<00:00, 9245.37it/s]

67%|██████▋ | Sample : 5636/8384 [00:00<00:00, 9260.83it/s]

69%|██████▉ | Sample : 5790/8384 [00:00<00:00, 9278.69it/s]

71%|███████ | Sample : 5941/8384 [00:00<00:00, 9285.30it/s]

73%|███████▎ | Sample : 6092/8384 [00:00<00:00, 9292.33it/s]

74%|███████▍ | Sample : 6244/8384 [00:00<00:00, 9301.80it/s]

76%|███████▋ | Sample : 6396/8384 [00:00<00:00, 9310.95it/s]

78%|███████▊ | Sample : 6548/8384 [00:00<00:00, 9318.79it/s]

80%|███████▉ | Sample : 6699/8384 [00:00<00:00, 9322.25it/s]

82%|████████▏ | Sample : 6852/8384 [00:00<00:00, 9332.61it/s]

83%|████████▎ | Sample : 6998/8384 [00:00<00:00, 9320.74it/s]

85%|████████▌ | Sample : 7148/8384 [00:00<00:00, 9323.51it/s]

87%|████████▋ | Sample : 7300/8384 [00:00<00:00, 9331.91it/s]

89%|████████▉ | Sample : 7452/8384 [00:00<00:00, 9340.31it/s]

91%|█████████ | Sample : 7603/8384 [00:00<00:00, 9343.41it/s]

92%|█████████▏| Sample : 7748/8384 [00:00<00:00, 9327.50it/s]

94%|█████████▍| Sample : 7901/8384 [00:00<00:00, 9338.42it/s]

96%|█████████▌| Sample : 8052/8384 [00:00<00:00, 9340.59it/s]

98%|█████████▊| Sample : 8206/8384 [00:00<00:00, 9352.80it/s]

100%|█████████▉| Sample : 8355/8384 [00:00<00:00, 9347.69it/s]

100%|██████████| Sample : 8384/8384 [00:00<00:00, 9113.11it/s]

split 3 / 3

Fitting 1 epochs, 128 channels

0%| | Sample : 0/8384 [00:00<?, ?it/s]

0%| | Sample : 1/8384 [00:00<02:16, 61.58it/s]

1%|▏ | Sample : 116/8384 [00:00<00:02, 3682.27it/s]

3%|▎ | Sample : 259/8384 [00:00<00:01, 5507.61it/s]

5%|▍ | Sample : 407/8384 [00:00<00:01, 6502.67it/s]

7%|▋ | Sample : 558/8384 [00:00<00:01, 7143.45it/s]

8%|▊ | Sample : 709/8384 [00:00<00:01, 7567.35it/s]

10%|█ | Sample : 861/8384 [00:00<00:00, 7884.27it/s]

12%|█▏ | Sample : 1009/8384 [00:00<00:00, 8084.30it/s]

14%|█▍ | Sample : 1159/8384 [00:00<00:00, 8253.48it/s]

16%|█▌ | Sample : 1311/8384 [00:00<00:00, 8406.95it/s]

17%|█▋ | Sample : 1461/8384 [00:00<00:00, 8515.03it/s]

19%|█▉ | Sample : 1613/8384 [00:00<00:00, 8617.64it/s]

21%|██ | Sample : 1765/8384 [00:00<00:00, 8705.20it/s]

23%|██▎ | Sample : 1916/8384 [00:00<00:00, 8772.58it/s]

25%|██▍ | Sample : 2067/8384 [00:00<00:00, 8833.01it/s]

26%|██▋ | Sample : 2218/8384 [00:00<00:00, 8886.69it/s]

28%|██▊ | Sample : 2370/8384 [00:00<00:00, 8934.13it/s]

30%|███ | Sample : 2520/8384 [00:00<00:00, 8969.93it/s]

32%|███▏ | Sample : 2672/8384 [00:00<00:00, 9011.40it/s]

34%|███▎ | Sample : 2824/8384 [00:00<00:00, 9046.20it/s]

36%|███▌ | Sample : 2977/8384 [00:00<00:00, 9082.59it/s]

37%|███▋ | Sample : 3130/8384 [00:00<00:00, 9116.66it/s]

39%|███▉ | Sample : 3281/8384 [00:00<00:00, 9135.86it/s]

41%|████ | Sample : 3434/8384 [00:00<00:00, 9163.71it/s]

43%|████▎ | Sample : 3585/8384 [00:00<00:00, 9182.45it/s]

45%|████▍ | Sample : 3738/8384 [00:00<00:00, 9204.57it/s]

46%|████▋ | Sample : 3891/8384 [00:00<00:00, 9226.29it/s]

48%|████▊ | Sample : 4043/8384 [00:00<00:00, 9243.08it/s]

50%|█████ | Sample : 4195/8384 [00:00<00:00, 9259.61it/s]

52%|█████▏ | Sample : 4348/8384 [00:00<00:00, 9275.41it/s]

54%|█████▎ | Sample : 4499/8384 [00:00<00:00, 9285.23it/s]

55%|█████▌ | Sample : 4652/8384 [00:00<00:00, 9299.98it/s]

57%|█████▋ | Sample : 4805/8384 [00:00<00:00, 9314.11it/s]

59%|█████▉ | Sample : 4959/8384 [00:00<00:00, 9330.08it/s]

61%|██████ | Sample : 5112/8384 [00:00<00:00, 9341.02it/s]

63%|██████▎ | Sample : 5265/8384 [00:00<00:00, 9352.90it/s]

65%|██████▍ | Sample : 5418/8384 [00:00<00:00, 9362.18it/s]

66%|██████▋ | Sample : 5572/8384 [00:00<00:00, 9373.40it/s]

68%|██████▊ | Sample : 5725/8384 [00:00<00:00, 9380.68it/s]

70%|███████ | Sample : 5877/8384 [00:00<00:00, 9384.25it/s]

72%|███████▏ | Sample : 6030/8384 [00:00<00:00, 9392.95it/s]

74%|███████▎ | Sample : 6183/8384 [00:00<00:00, 9400.55it/s]

76%|███████▌ | Sample : 6336/8384 [00:00<00:00, 9407.69it/s]

77%|███████▋ | Sample : 6489/8384 [00:00<00:00, 9414.55it/s]

79%|███████▉ | Sample : 6642/8384 [00:00<00:00, 9422.68it/s]

81%|████████ | Sample : 6793/8384 [00:00<00:00, 9421.79it/s]

83%|████████▎ | Sample : 6945/8384 [00:00<00:00, 9424.78it/s]

85%|████████▍ | Sample : 7096/8384 [00:00<00:00, 9424.45it/s]

86%|████████▋ | Sample : 7249/8384 [00:00<00:00, 9429.96it/s]

88%|████████▊ | Sample : 7402/8384 [00:00<00:00, 9435.75it/s]

90%|█████████ | Sample : 7554/8384 [00:00<00:00, 9438.10it/s]

92%|█████████▏| Sample : 7705/8384 [00:00<00:00, 9437.17it/s]

94%|█████████▎| Sample : 7858/8384 [00:00<00:00, 9440.65it/s]

96%|█████████▌| Sample : 8012/8384 [00:00<00:00, 9446.68it/s]

97%|█████████▋| Sample : 8156/8384 [00:00<00:00, 9422.07it/s]

99%|█████████▉| Sample : 8309/8384 [00:00<00:00, 9428.54it/s]

100%|██████████| Sample : 8384/8384 [00:00<00:00, 9236.50it/s]

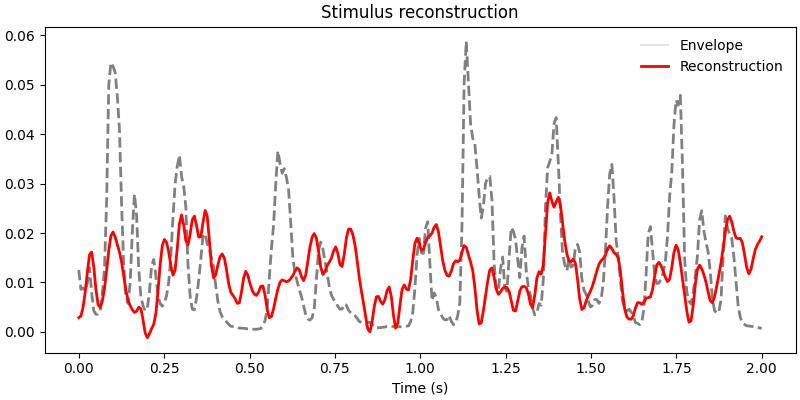

Visualize stimulus reconstruction#

To get a sense of our model performance, we can plot the actual and predicted stimulus envelopes side by side.

y_pred = sr.predict(Y[test])

time = np.linspace(0, 2.0, 5 * int(sfreq))

fig, ax = plt.subplots(figsize=(8, 4), layout="constrained")

ax.plot(

time, speech[test][sr.valid_samples_][: int(5 * sfreq)], color="grey", lw=2, ls="--"

)

ax.plot(time, y_pred[sr.valid_samples_][: int(5 * sfreq)], color="r", lw=2)

ax.legend([lns[0], ln1[0]], ["Envelope", "Reconstruction"], frameon=False)

ax.set(title="Stimulus reconstruction")

ax.set_xlabel("Time (s)")

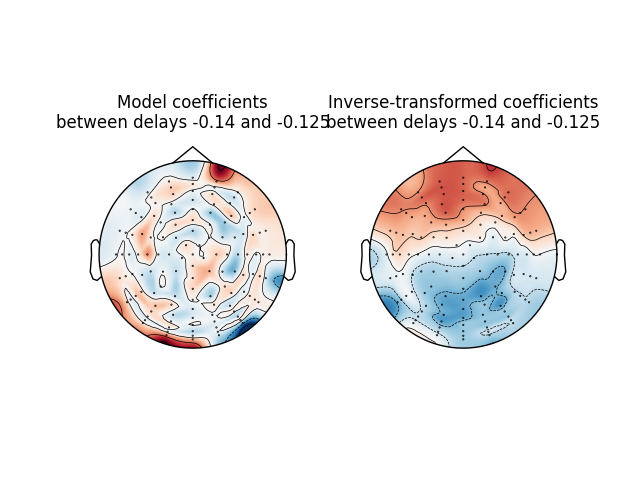

Investigate model coefficients#

Finally, we will look at how the decoding model coefficients are distributed across the scalp. We will attempt to recreate figure 5 from [1]. The decoding model weights reflect the channels that contribute most toward reconstructing the stimulus signal, but are not directly interpretable in a neurophysiological sense. Here we also look at the coefficients obtained via an inversion procedure [2], which have a more straightforward interpretation as their value (and sign) directly relates to the stimulus signal’s strength (and effect direction).

time_plot = (-0.140, -0.125) # To average between two timepoints.

ix_plot = np.arange(

np.argmin(np.abs(time_plot[0] - times)), np.argmin(np.abs(time_plot[1] - times))

)

fig, ax = plt.subplots(1, 2)

mne.viz.plot_topomap(

np.mean(mean_coefs[:, ix_plot], axis=1),

pos=info,

axes=ax[0],

show=False,

vlim=(-max_coef, max_coef),

)

ax[0].set(title=f"Model coefficients\nbetween delays {time_plot[0]} and {time_plot[1]}")

mne.viz.plot_topomap(

np.mean(mean_patterns[:, ix_plot], axis=1),

pos=info,

axes=ax[1],

show=False,

vlim=(-max_patterns, max_patterns),

)

ax[1].set(

title=(

f"Inverse-transformed coefficients\nbetween delays {time_plot[0]} and "

f"{time_plot[1]}"

)

)

References#

Total running time of the script: (0 minutes 14.471 seconds)